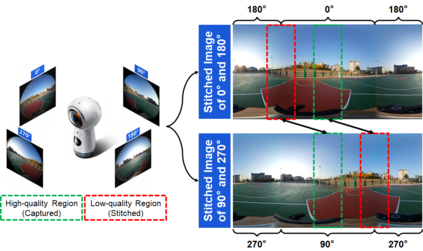

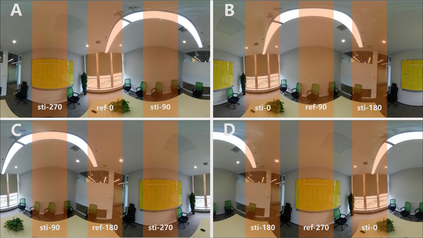

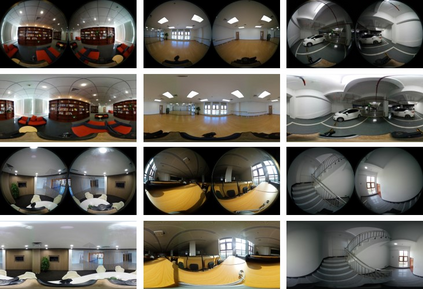

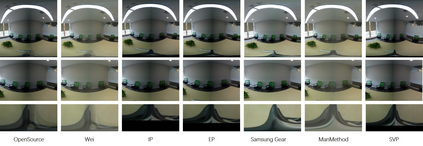

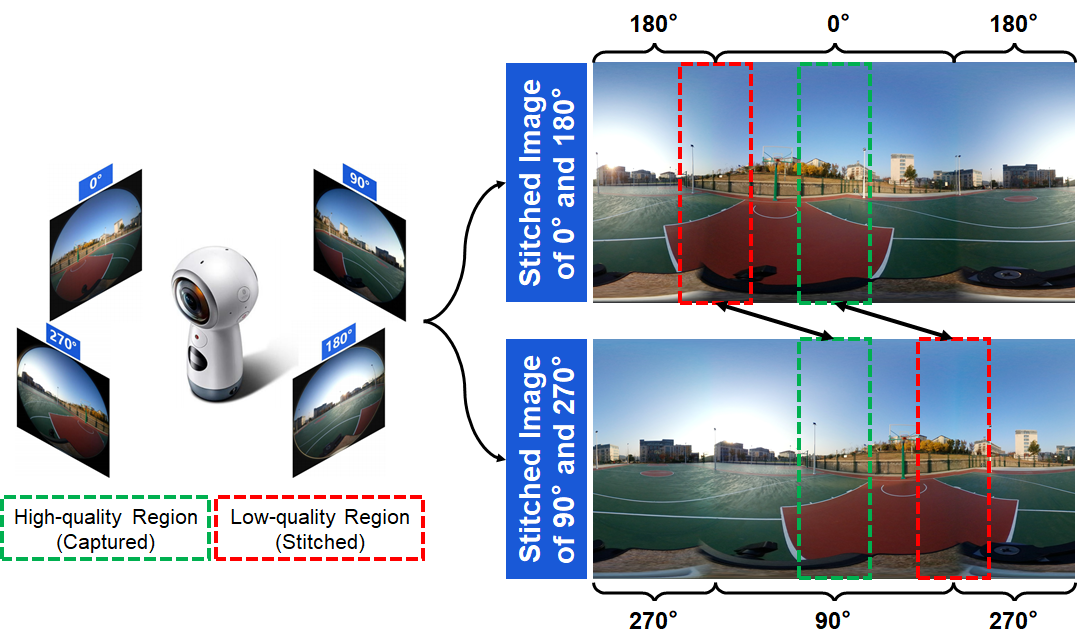

Along with the development of virtual reality (VR), omnidirectional images play an important role in producing multimedia content with immersive experience. However, despite various existing approaches for omnidirectional image stitching, how to quantitatively assess the quality of stitched images is still insufficiently explored. To address this problem, we establish a novel omnidirectional image dataset containing stitched images as well as dual-fisheye images captured from standard quarters of 0, 90, 180 and 270. In this manner, when evaluating the quality of an image stitched from a pair of fisheye images (e.g., 0 and 180), the other pair of fisheye images (e.g., 90 and 270) can be used as the cross-reference to provide ground-truth observations of the stitching regions. Based on this dataset, we further benchmark seven widely used stitching models with seven evaluation metrics for IQA. To the best of our knowledge, it is the first dataset that focuses on assessing the stitching quality of omnidirectional images.

翻译:在开发虚拟现实(VR)的同时,全方向图像在以亲身经验制作多媒体内容方面发挥着重要作用,然而,尽管现有各种全方向图像缝合方法,但如何定量评估缝合图像质量的问题仍未得到充分探讨。为了解决这一问题,我们建立了一个全方向图像新颖数据集,其中包含缝合图像以及从标准区0、90、180和270摄取的双鱼眼图像。这样,在评价从一对鱼眼图像(如0和180)缝合的图像质量时,其他一对鱼眼图像(如90和270)可用作交叉参照,以提供缝合区域的地面观察。基于这一数据集,我们进一步将7个广泛使用的缝合模型与IQA的7个评价指标作为基准。据我们所知,第一套数据集侧重于评估全方向图像的缝合质量。