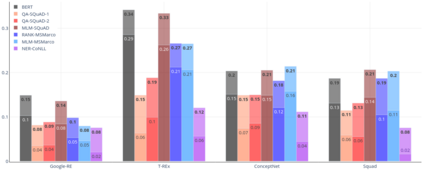

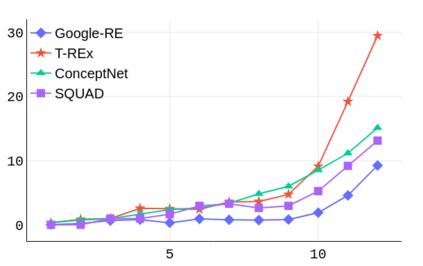

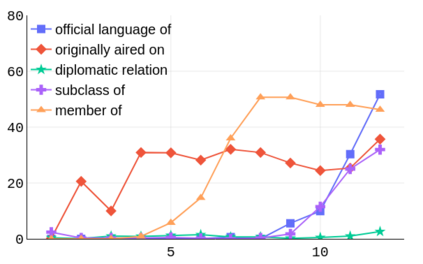

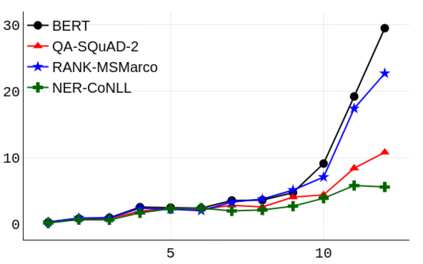

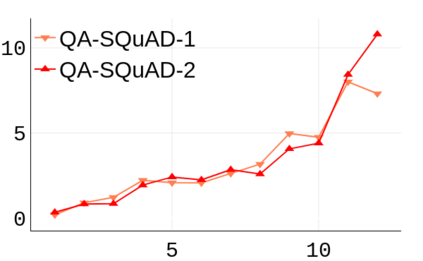

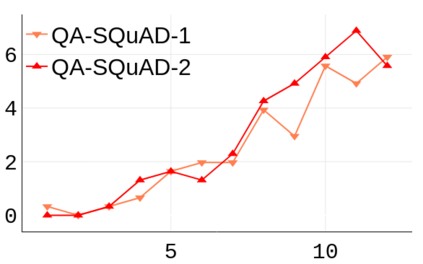

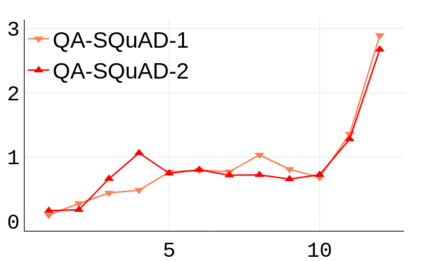

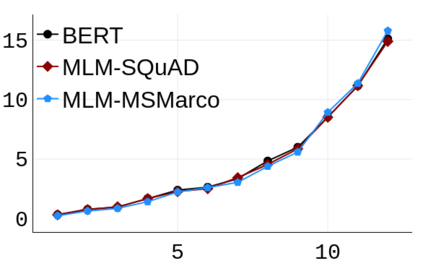

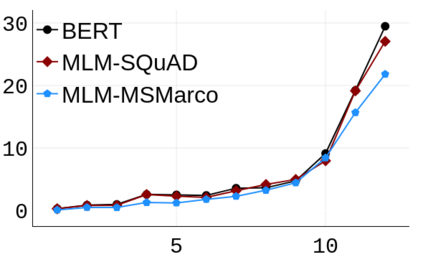

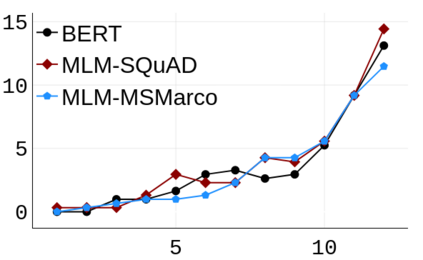

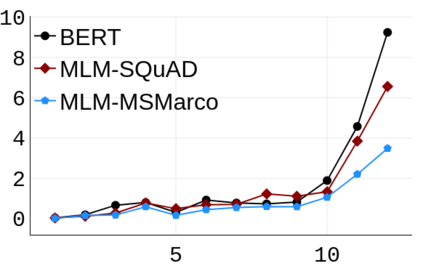

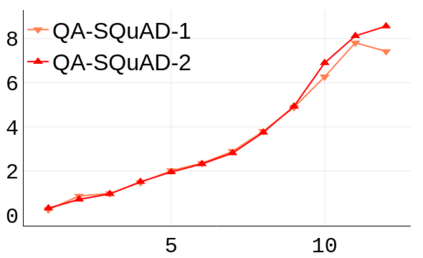

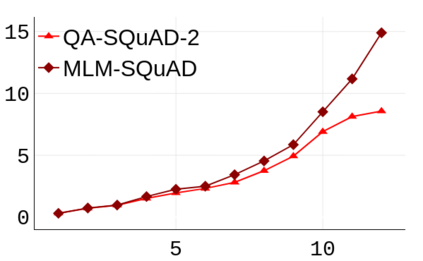

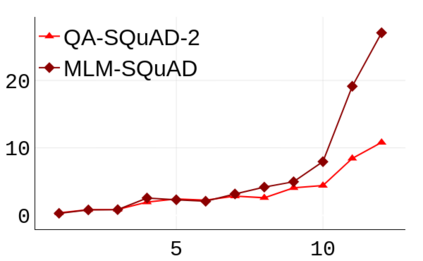

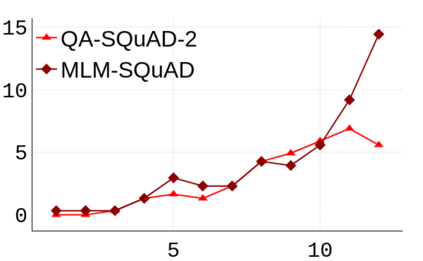

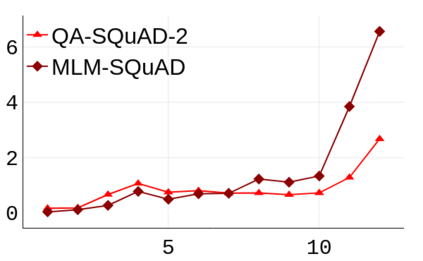

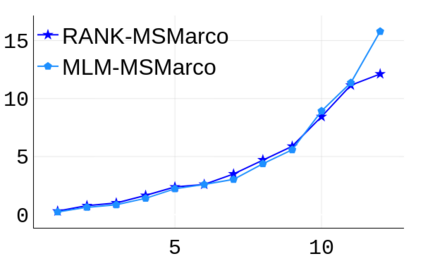

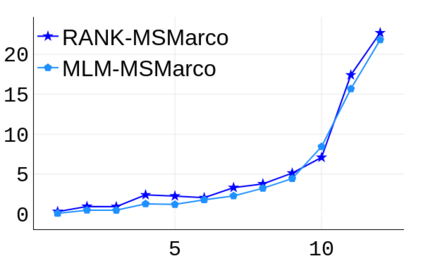

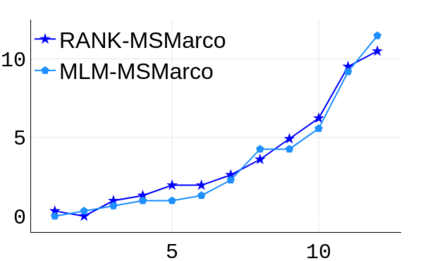

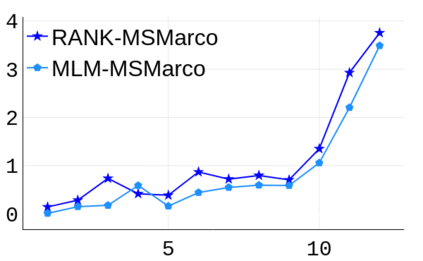

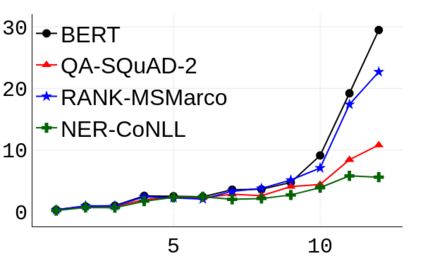

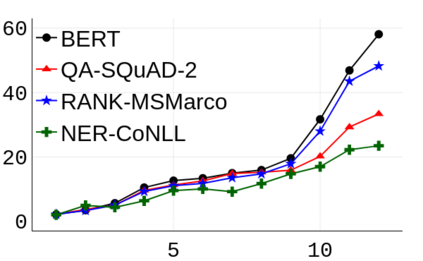

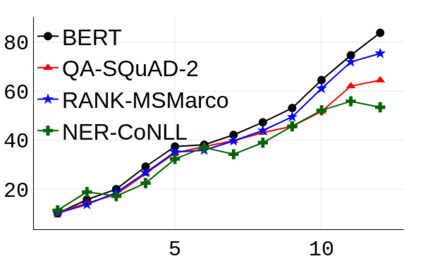

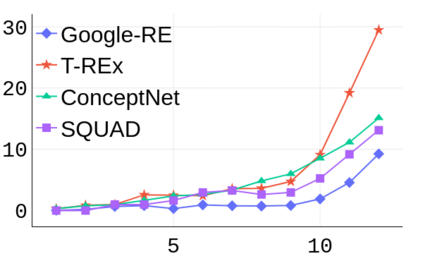

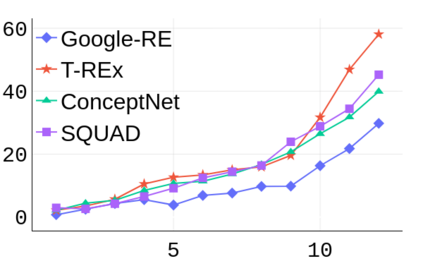

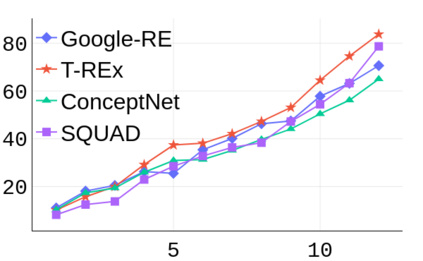

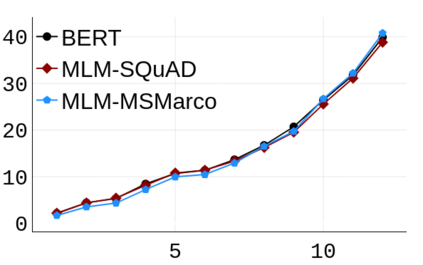

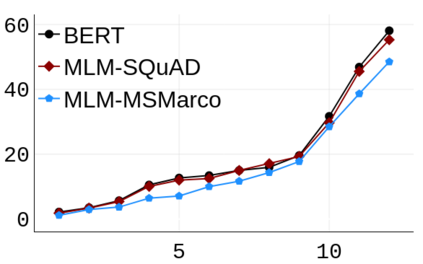

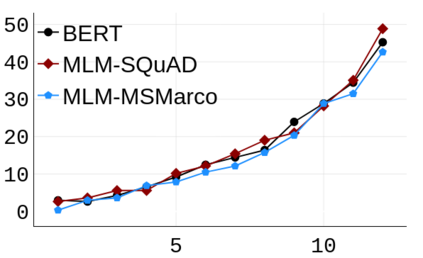

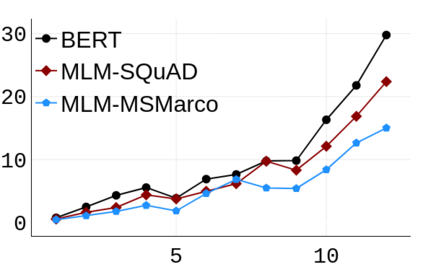

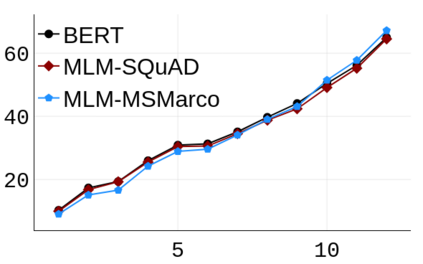

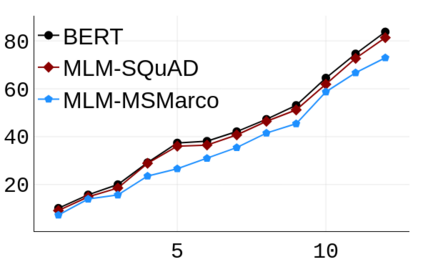

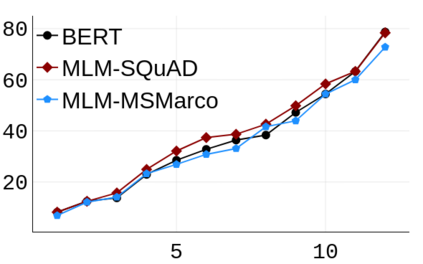

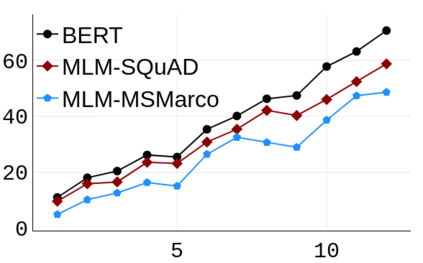

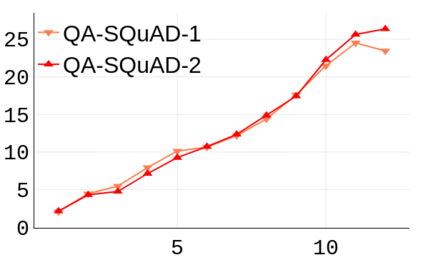

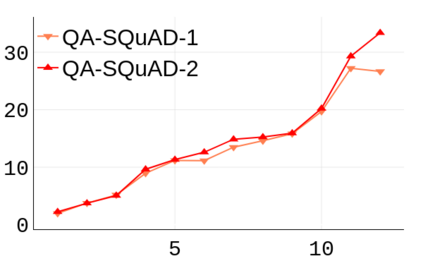

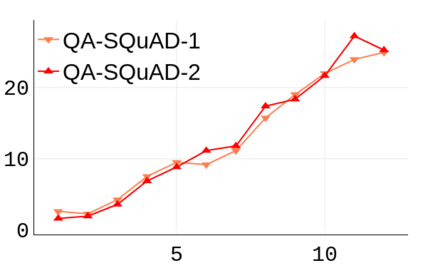

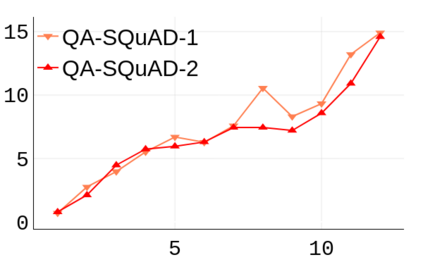

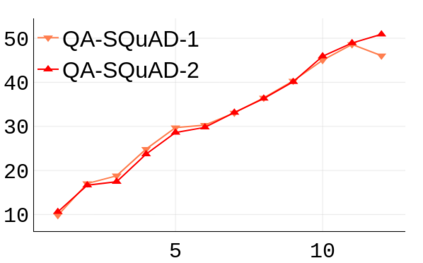

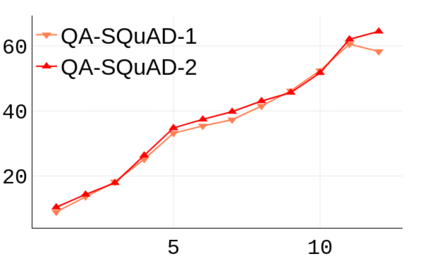

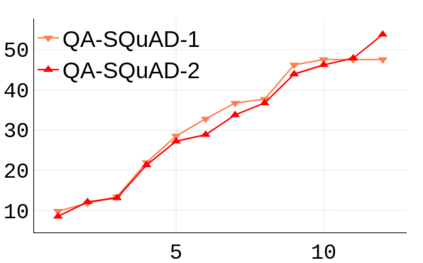

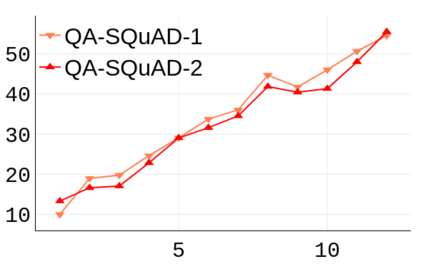

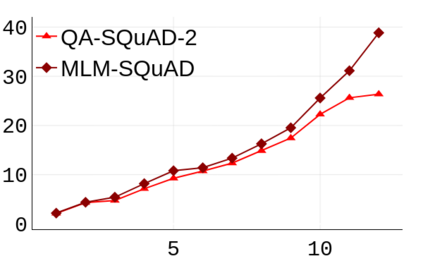

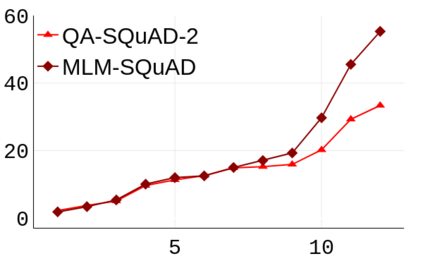

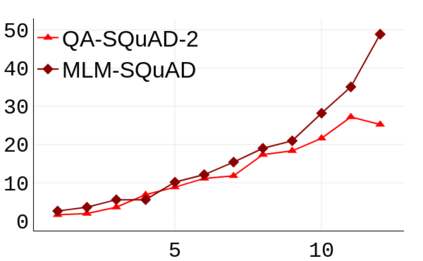

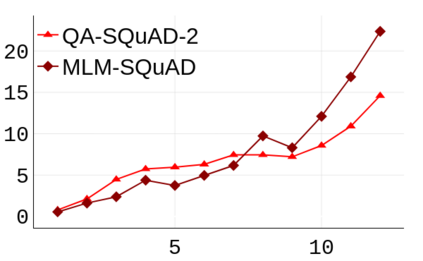

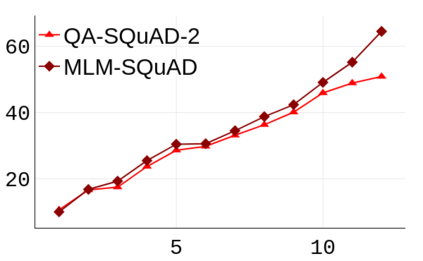

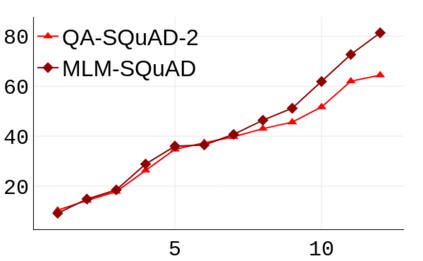

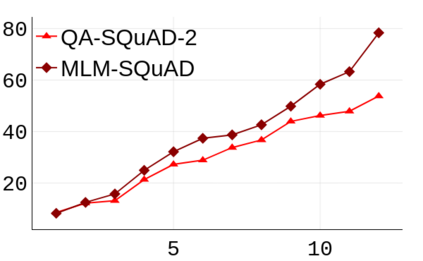

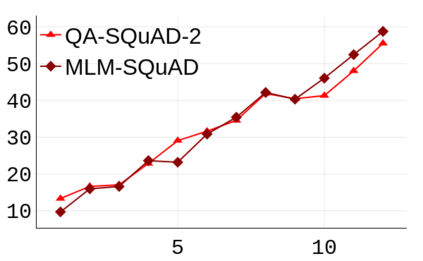

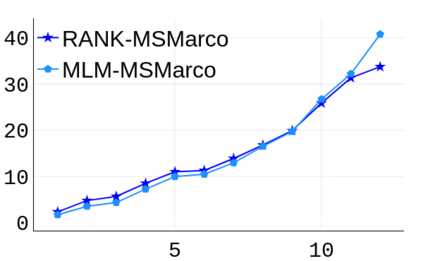

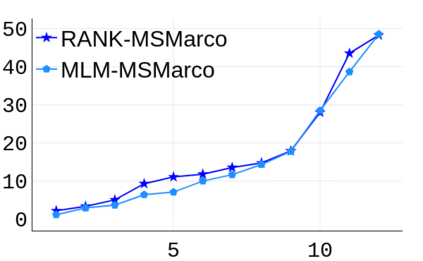

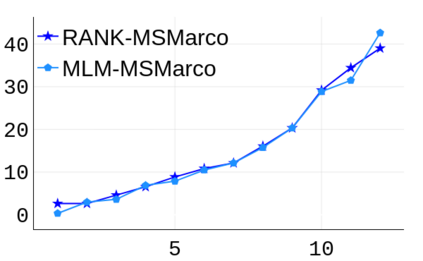

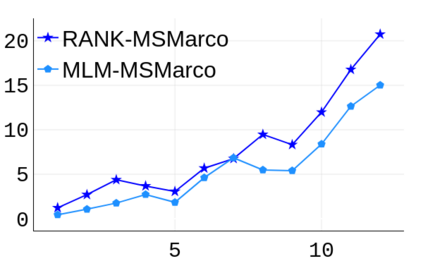

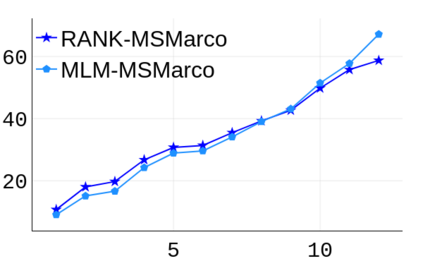

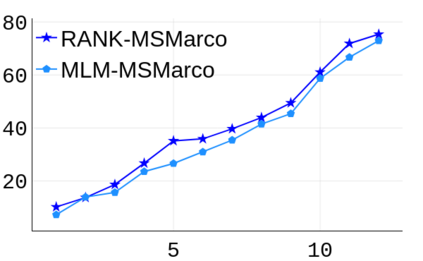

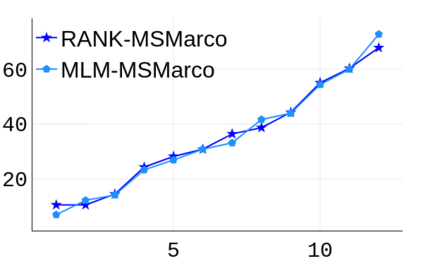

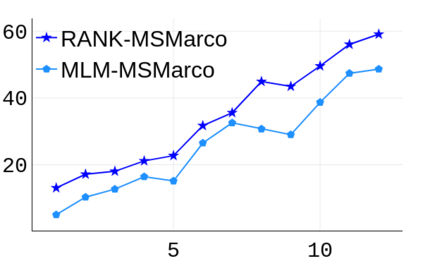

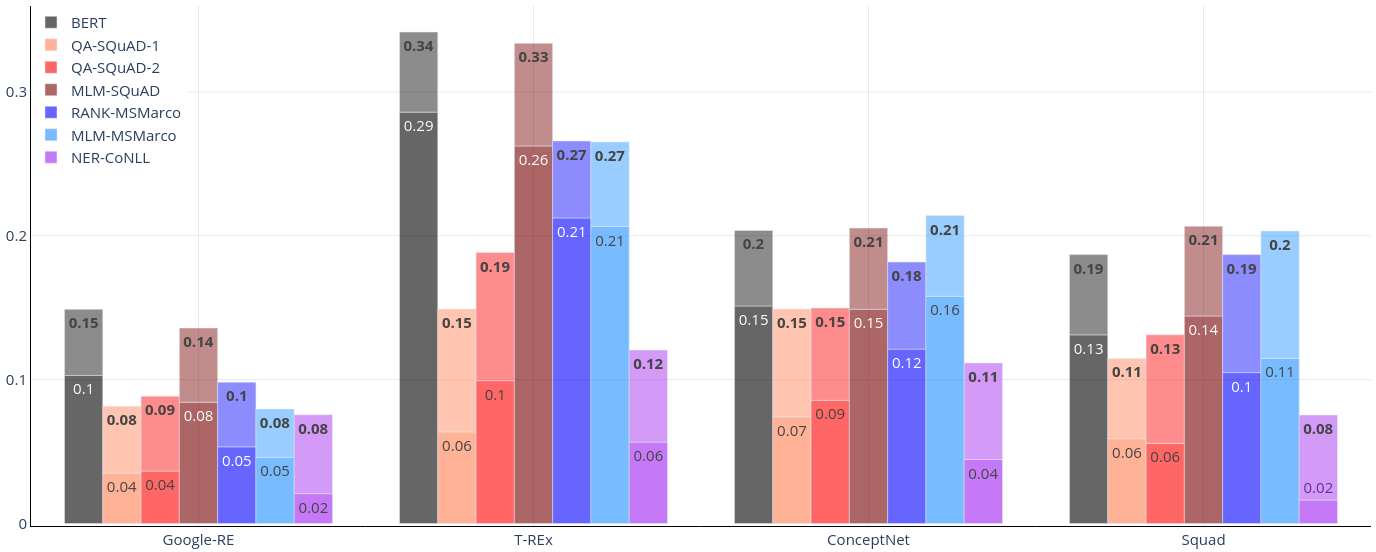

Probing complex language models has recently revealed several insights into linguistic and semantic patterns found in the learned representations. In this paper, we probe BERT specifically to understand and measure the relational knowledge it captures. We utilize knowledge base completion tasks to probe every layer of pre-trained as well as fine-tuned BERT (ranking, question answering, NER). Our findings show that knowledge is not just contained in BERT's final layers. Intermediate layers contribute a significant amount (17-60%) to the total knowledge found. Probing intermediate layers also reveals how different types of knowledge emerge at varying rates. When BERT is fine-tuned, relational knowledge is forgotten but the extent of forgetting is impacted by the fine-tuning objective but not the size of the dataset. We found that ranking models forget the least and retain more knowledge in their final layer. We release our code on github to repeat the experiments.

翻译:检验复杂的语言模型最近揭示了对在所学的表述中发现的语言和语义模式的一些洞察力。在本文中,我们专门调查生物伦理学和伦理学,以了解和衡量它所捕捉到的关系知识。我们利用知识基础的完成任务来探测经过预先训练的和经过精细调整的生物伦理学和伦理学(排名、问答、NER)的每一层。我们的研究结果表明,知识并不仅仅包含在生物伦理学和伦理学研究小组的最后一层中。中间层(17-60%)对所发现的知识总量的贡献很大。中间层也揭示了不同类型的知识如何以不同的速度出现。当生物伦理学和伦理学小组经过精细调整时,关系知识被遗忘了,但遗忘的程度却受到微调目标的影响,而不是数据集的大小。我们发现,排位模型忘记了最少的,在最后一层中保留了更多的知识。我们发布了关于插图的代码,以重复实验。