Github项目推荐 | awesome-bert:BERT相关资源大列表

awesome-bert:BERT相关资源大列表

by Jiakui

本项目包含BERT 相关论文和 github 项目。

项目地址:

https://github.com/Jiakui/awesome-bert

【注】本文的相关链接复制下方网址到浏览器访问。

https://ai.yanxishe.com/page/blogDetail/10050?from=wx

论文:

arXiv:1810.04805, BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding , Authors: Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova

arXiv:1812.06705, Conditional BERT Contextual Augmentation, Authors: Xing Wu, Shangwen Lv, Liangjun Zang, Jizhong Han, Songlin Hu

arXiv:1812.03593, SDNet: Contextualized Attention-based Deep Network for Conversational Question Answering, Authors: Chenguang Zhu, Michael Zeng, Xuedong Huang

arXiv:1901.02860, Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context, Authors: Zihang Dai, Zhilin Yang, Yiming Yang, William W. Cohen, Jaime Carbonell, Quoc V. Le and Ruslan Salakhutdinov.

arXiv:1901.04085, Passage Re-ranking with BERT, Authors: Rodrigo Nogueira, Kyunghyun Cho

Github仓库:

官方项目:

google-research/bert, officical TensorFlow code and pre-trained models for BERT , [10053 stars]

除了tensorflow之外的BERT的实现:

codertimo/BERT-pytorch, Google AI 2018 BERT pytorch implementation

huggingface/pytorch-pretrained-BERT, A PyTorch implementation of Google AI's BERT model with script to load Google's pre-trained models , [2422 stars]

Separius/BERT-keras, Keras implementation of BERT with pre-trained weights, [325 stars]

soskek/bert-chainer, Chainer implementation of "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding"

innodatalabs/tbert, PyTorch port of BERT ML model

guotong1988/BERT-tensorflow, BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

dreamgonfly/BERT-pytorch, PyTorch implementation of BERT in "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding"

CyberZHG/keras-bert, Implementation of BERT that could load official pre-trained models for feature extraction and prediction

soskek/bert-chainer, Chainer implementation of "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding"

MaZhiyuanBUAA/bert-tf1.4.0, bert-tf1.4.0

dhlee347/pytorchic-bert, Pytorch Implementation of Google BERT, [106 stars]

kpot/keras-transformer, Keras library for building (Universal) Transformers, facilitating BERT and GPT models, [17 stars]

miroozyx/BERT_with_keras, A Keras version of Google's BERT model, [5 stars]

conda-forge/pytorch-pretrained-bert-feedstock, A conda-smithy repository for pytorch-pretrained-bert. , [0 stars]

BERT的其他资源:

brightmart/bert_language_understanding, Pre-training of Deep Bidirectional Transformers for Language Understanding: pre-train TextCNN, [503 stars]

Y1ran/NLP-BERT--ChineseVersion, 谷歌自然语言处理模型BERT:论文解析与python代码 , [83 stars]

yangbisheng2009/cn-bert, BERT在中文NLP的应用, [7 stars]

JayYip/bert-multiple-gpu, A multiple GPU support version of BERT, [16 stars]

HighCWu/keras-bert-tpu, Implementation of BERT that could load official pre-trained models for feature extraction and prediction on TPU, [6 stars]

Willyoung2017/Bert_Attempt, PyTorch Pretrained Bert, [0 stars]

Pydataman/bert_examples, some examples of bert, run_classifier.py 是基于谷歌bert实现了Quora Insincere Questions Classification二分类比赛。run_ner.py是基于瑞金医院AI大赛 第一赛季数据和bert写的一个命名实体识别。

guotong1988/BERT-chinese, BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding 中文 汉语

zhongyunuestc/bert_multitask, 多任务task

Microsoft/AzureML-BERT, End-to-end walk through for fine-tuning BERT using Azure Machine Learning , [14 stars]

bigboNed3/bert_serving, export bert model for serving, [10 stars]

yoheikikuta/bert-japanese, BERT with SentencePiece for Japanese text. [92 stars]

whqwill/seq2seq-keyphrase-bert, add BERT to encoder part for https://github.com/memray/seq2seq-keyphrase-pytorch, [19 stars]

algteam/bert-examples, bert-demo, [9 stars]

cedrickchee/awesome-bert-nlp, A curated list of NLP resources focused on BERT, attention mechanism, Transformer networks, and transfer learning. [9 stars]

cnfive/cnbert, 中文注释一下bert代码功能, [5 stars]

brightmart/bert_customized, bert with customized features, [20 stars]

yuanxiaosc/BERT_Paper_Chinese_Translation, BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding 论文的中文翻译。Chinese Translation! , [5 stars]

JayYip/bert-multitask-learning, BERT for Multitask Learning, [29 stars]

BERT QA任务:

benywon/ChineseBert, This is a chinese Bert model specific for question answering, [6 stars]

vliu15/BERT, Tensorflow implementation of BERT for QA

matthew-z/R-net, R-net in PyTorch, with BERT and ELMo, [77 stars]

nyu-dl/dl4marco-bert, Passage Re-ranking with BERT, [92 stars]

xzp27/BERT-for-Chinese-Question-Answering, [7 stars]

chiayewken/bert-qa, BERT for question answering starting with HotpotQA, [2 stars]

BERT分类任务:

zhpmatrix/Kaggle-Quora-Insincere-Questions-Classification, Kaggle新赛(baseline)-基于BERT的fine-tuning方案+基于tensor2tensor的Transformer Encoder方案

maksna/bert-fine-tuning-for-chinese-multiclass-classification, use google pre-training model bert to fine-tuning for the chinese multiclass classification

NLPScott/bert-Chinese-classification-task, bert中文分类实践, [51 stars]

Socialbird-AILab/BERT-Classification-Tutorial, [151 stars]

fooSynaptic/BERT_classifer_trial, BERT trial for chinese corpus classfication

xiaopingzhong/bert-finetune-for-classfier, 微调BERT模型,同时构建自己的数据集实现分类

brightmart/sentiment_analysis_fine_grain, Multi-label Classification with BERT; Fine Grained Sentiment Analysis from AI challenger, [170 stars]

pengming617/bert_classification, 利用bert预训练的中文模型进行文本分类, [6 stars]

xieyufei1993/Bert-Pytorch-Chinese-TextClassification, Pytorch Bert Finetune in Chinese Text Classification, [7 stars]

liyibo/text-classification-demos, Neural models for Text Classification in Tensorflow, such as cnn, dpcnn, fasttext, bert ..., [6 stars]

circlePi/BERT_Chinese_Text_Class_By_pytorch, A Pytorch implements of Chinese text class based on BERT_Pretrained_Model, [3 stars]

BERT NER 任务:

JamesGu14/BERT-NER-CLI, Bert NER command line tester with step by step setup guide, [20 stars]

zhpmatrix/bert-sequence-tagging, 基于BERT的中文序列标注

kyzhouhzau/BERT-NER, Use google BERT to do CoNLL-2003 NER ! , [160 stars]

king-menin/ner-bert, NER task solution (bert-Bi-LSTM-CRF) with google bert https://github.com/google-research.

macanv/BERT-BiLSMT-CRF-NER, Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning , [349 stars]

FuYanzhe2/Name-Entity-Recognition, Lstm-crf,Lattice-CRF,bert-ner及近年ner相关论文follow, [11 stars]

mhcao916/NER_Based_on_BERT, this project is based on google bert model, which is a Chinese NER

ProHiryu/bert-chinese-ner, 使用预训练语言模型BERT做中文NER, [88 stars]

sberbank-ai/ner-bert, BERT-NER (nert-bert) with google bert, [22 stars]

kyzhouhzau/Bert-BiLSTM-CRF, This model base on bert-as-service. Model structure : bert-embedding bilstm crf. , [3 stars]

Hoiy/berserker, Berserker - BERt chineSE woRd toKenizER, Berserker (BERt chineSE woRd toKenizER) is a Chinese tokenizer built on top of Google's BERT model. , [2 stars]

BERT文本生成任务:

asyml/texar, Toolkit for Text Generation and Beyond https://texar.io, Texar is a general-purpose text generation toolkit, has also implemented BERT here for classification, and text generation applications by combining with Texar's other modules. [892 stars]

BERT知识图谱任务:

lvjianxin/Knowledge-extraction, 基于中文的知识抽取,BaseLine:Bi-LSTM+CRF 升级版:Bert预训练

sakuranew/BERT-AttributeExtraction, USING BERT FOR Attribute Extraction in KnowledgeGraph. fine-tuning and feature extraction. 使用基于bert的微调和特征提取方法来进行知识图谱百度百科人物词条属性抽取。 [10 stars]

BERT visualization toolkit:

jessevig/bertviz, Tool for visualizing BERT's attention, [147 stars]

BERT可视化工具包:

GaoQ1/rasa_nlu_gq, turn natural language into structured data(支持中文,自定义了N种模型,支持不同的场景和任务), [33 stars]

GaoQ1/rasa_chatbot_cn, 基于rasa-nlu和rasa-core 搭建的对话系统demo, [60 stars]

GaoQ1/rasa-bert-finetune, 支持rasa-nlu 的bert finetune, [5 stars]

BERT语言模型和嵌入:

hanxiao/bert-as-service, Mapping a variable-length sentence to a fixed-length vector using pretrained BERT model, [1941 stars]

YC-wind/embedding_study, 中文预训练模型生成字向量学习,测试BERT,ELMO的中文效果, [17 stars]

Kyubyong/bert-token-embeddings, Bert Pretrained Token Embeddings, [24 stars]

xu-song/bert_as_language_model, bert as language model, fork from https://github.com/google-research/bert, [22 stars]

yuanxiaosc/Deep_dynamic_word_representation, TensorFlow code and pre-trained models for deep dynamic word representation (DDWR). It combines the BERT model and ELMo's deep context word representation., [7 stars]

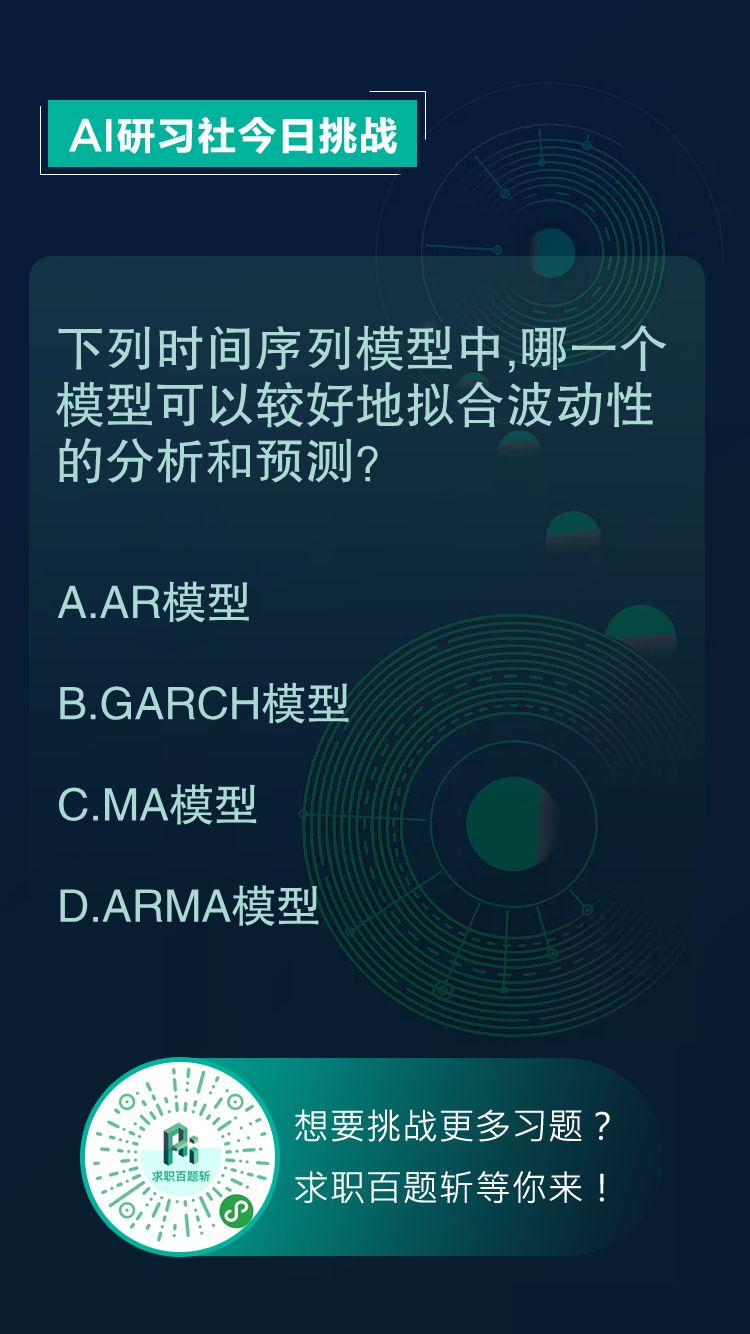

AI求职百题斩 · 每日一题

每天进步一点点,扫码参与每日一题!

点击下方“阅读原文”,参与 强化学习论文讨论小组 互动↙