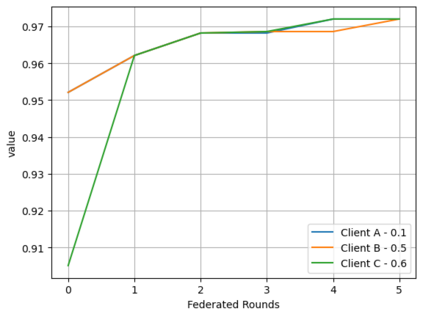

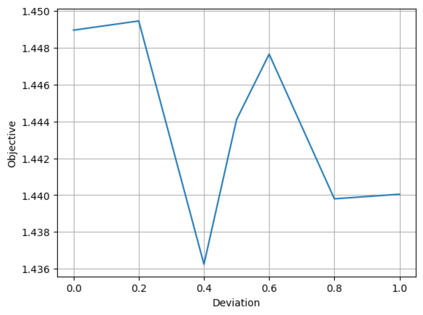

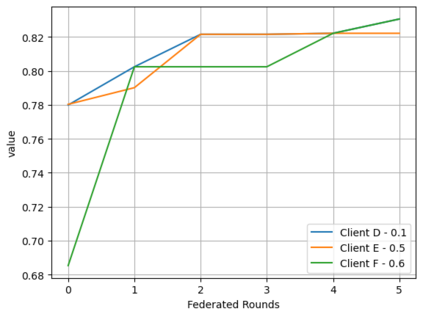

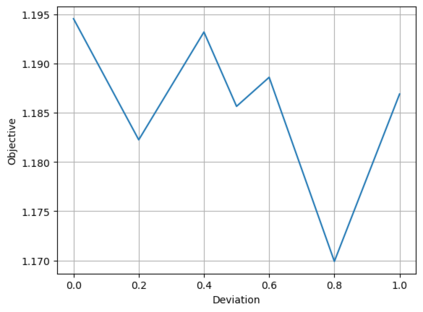

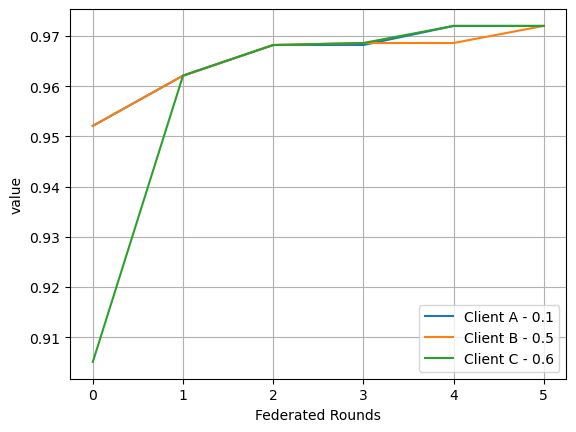

Federated learning (FL) is a paradigm that allows distributed clients to learn a shared machine learning model without sharing their sensitive training data. While largely decentralized, FL requires resources to fund a central orchestrator or to reimburse contributors of datasets to incentivize participation. Inspired by insights from prior-independent auction design, we propose a mechanism, FIPIA (Federated Incentive Payments via Prior-Independent Auctions), to collect monetary contributions from self-interested clients. The mechanism operates in the semi-honest trust model and works even if clients have a heterogeneous interest in receiving high-quality models, and the server does not know the clients' level of interest. We run experiments on the MNIST, FashionMNIST, and CIFAR-10 datasets to test clients' model quality under FIPIA and FIPIA's incentive properties.

翻译:联邦学习(FL)是一种范例,它允许分布式客户学习共享的机器学习模式,而不必分享其敏感的培训数据。虽然基本上分散化,但FL需要资源资助一个中央管弦乐队或偿还数据集提供者,以激励参与。在前独立拍卖设计的深刻见解的启发下,我们提议了一个机制,即FIPIA(通过前独立拍卖的奖励性付款联合会),以从自利客户那里募集捐款。这个机制在半诚实信任模式下运作,即使客户对接受高质量模型有不同的兴趣,服务器也不了解客户的兴趣水平。我们在MNIST、FashonMNIST和CIFAR-10数据集上进行了实验,以测试FIPIA和FIPIA的激励特性下的客户模式质量。