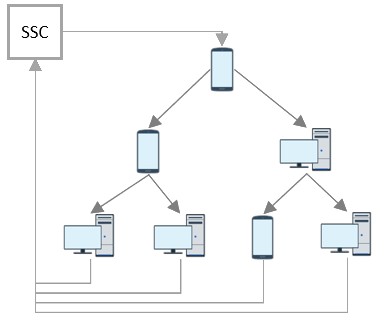

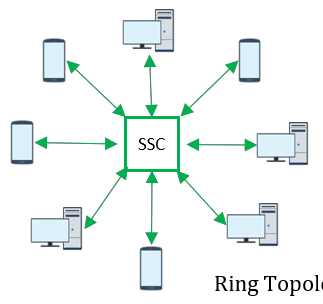

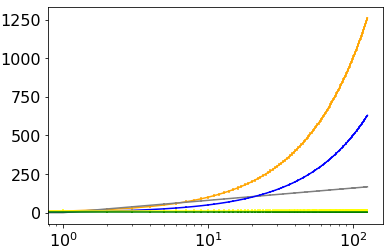

Distributed multi-agent learning enables agents to cooperatively train a model without requiring to share their datasets. While this setting ensures some level of privacy, it has been shown that, even when data is not directly shared, the training process is vulnerable to privacy attacks including data reconstruction and model inversion attacks. Additionally, malicious agents that train on inverted labels or random data, may arbitrarily weaken the accuracy of the global model. This paper addresses these challenges and presents Privacy-preserving and trustable Distributed Learning (PT-DL), a fully decentralized framework that relies on Differential Privacy to guarantee strong privacy protections of the agents' data, and Ethereum smart contracts to ensure trustability. The paper shows that PT-DL is resilient up to a 50% collusion attack, with high probability, in a malicious trust model and the experimental evaluation illustrates the benefits of the proposed model as a privacy-preserving and trustable distributed multi-agent learning system on several classification tasks.

翻译:分布式多试剂学习使代理商能够合作培训模型,而无需分享数据集。虽然这一设置确保了一定程度的隐私,但已经表明,即使数据不直接共享,培训过程也容易受到隐私攻击,包括数据重建和模型反向攻击;此外,培训反向标签或随机数据的恶意代理商可能任意削弱全球模型的准确性。本文件讨论这些挑战,并展示了保护隐私和可信任的分布式学习(PT-DL),这是一个完全分散的框架,依靠差异隐私来保证对代理商数据进行强有力的隐私保护,Etheum智能合同以确保可信任性。 该文件表明,在恶意信任模型和实验性评价中,PT-DL具有高达50%的连带攻击的复原能力,极有可能在恶意信任模型中和实验性评价中展示了拟议模型作为若干分类任务的隐私保护和可信任的分布式多代理人学习系统的好处。