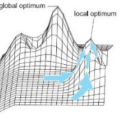

This paper introduces Stochastic Gradient Langevin Boosting (SGLB) - a powerful and efficient machine learning framework that may deal with a wide range of loss functions and has provable generalization guarantees. The method is based on a special form of the Langevin diffusion equation specifically designed for gradient boosting. This allows us to theoretically guarantee the global convergence even for multimodal loss functions, while standard gradient boosting algorithms can guarantee only local optimum. We also empirically show that SGLB outperforms classic gradient boosting when applied to classification tasks with 0-1 loss function, which is known to be multimodal.

翻译:本文介绍Stochastestic Gradient Langevin Boosting(SGLB),这是一个强大而高效的机器学习框架,可以处理各种损失功能,并具有可变的通用保证。该方法基于一种专门为梯度加速而设计的Langevin扩散方程式的特殊形式。这使我们能够在理论上保证即使是多式联运损失功能的全球趋同,而标准的梯度加速算法只能保证当地的最佳性。我们还从经验上表明,SGLB在应用于具有0-1损失功能的分类任务时,优于典型的梯度加速,而这种职能是已知的多式联运。