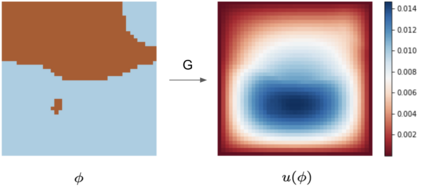

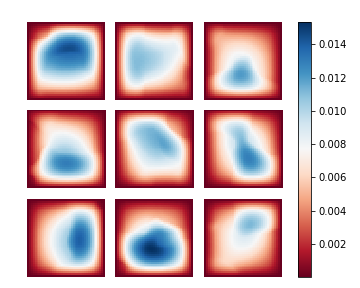

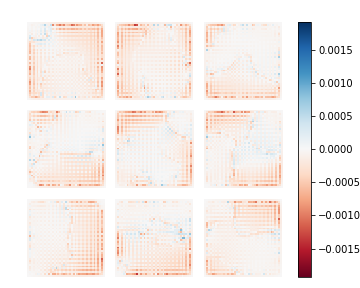

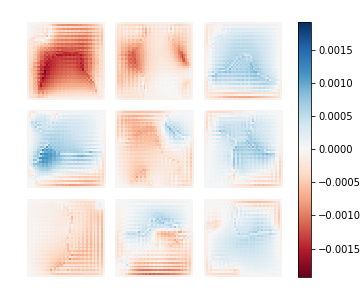

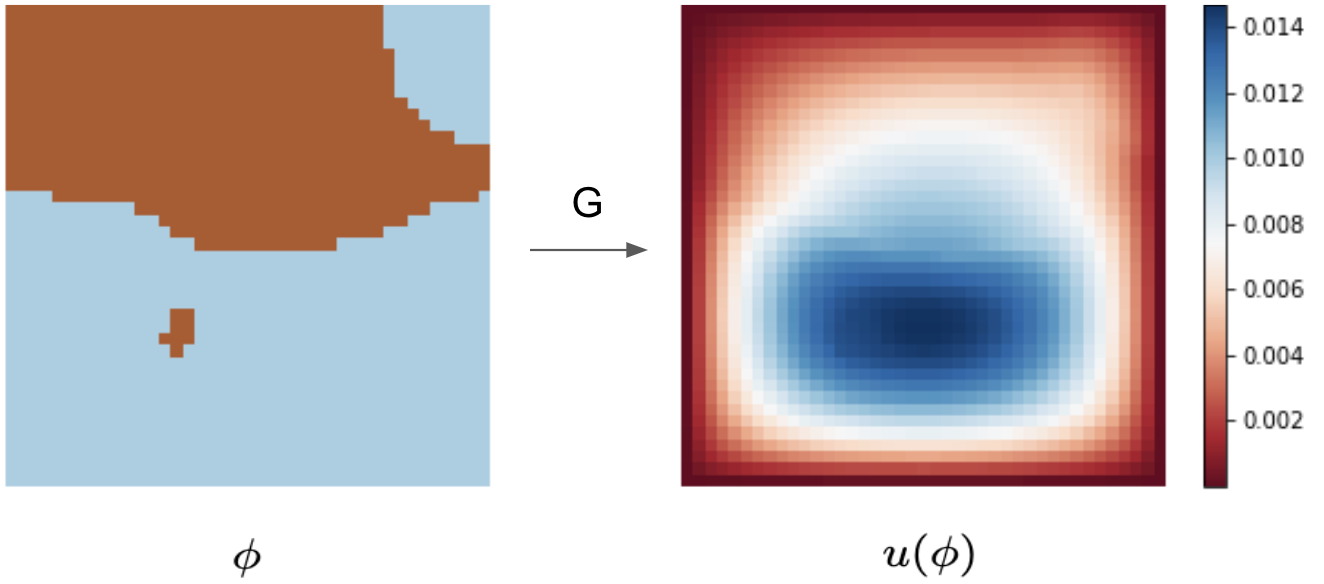

We introduce a practical method to enforce linear partial differential equation (PDE) constraints for functions defined by neural networks (NNs), up to a desired tolerance. By combining methods in differentiable physics and applications of the implicit function theorem to NN models, we develop a differentiable PDE-constrained NN layer. During training, our model learns a family of functions, each of which defines a mapping from PDE parameters to PDE solutions. At inference time, the model finds an optimal linear combination of the functions in the learned family by solving a PDE-constrained optimization problem. Our method provides continuous solutions over the domain of interest that exactly satisfy desired physical constraints. Our results show that incorporating hard constraints directly into the NN architecture achieves much lower test error, compared to training on an unconstrained objective.

翻译:我们引入了一种实用的方法,对神经网络(NNs)界定的功能实施线性部分等式限制,直至理想的容忍度。通过将不同物理方法和隐含功能理论应用到NN模型的方法结合起来,我们开发了一种不同的PDE限制的NN层。在培训过程中,我们的模型学习了一套功能,其中每个功能都界定了从PDE参数到PDE解决方案的绘图。在推断时间,模型通过解决PDE限制的优化问题,找到了将学习家庭功能的最佳线性组合。我们的方法为兴趣领域提供了持续的解决办法,完全满足了预期的物理限制。我们的结果显示,将硬性限制直接纳入NNN结构的结果比关于不受限制的目标的培训要低得多的测试错误。