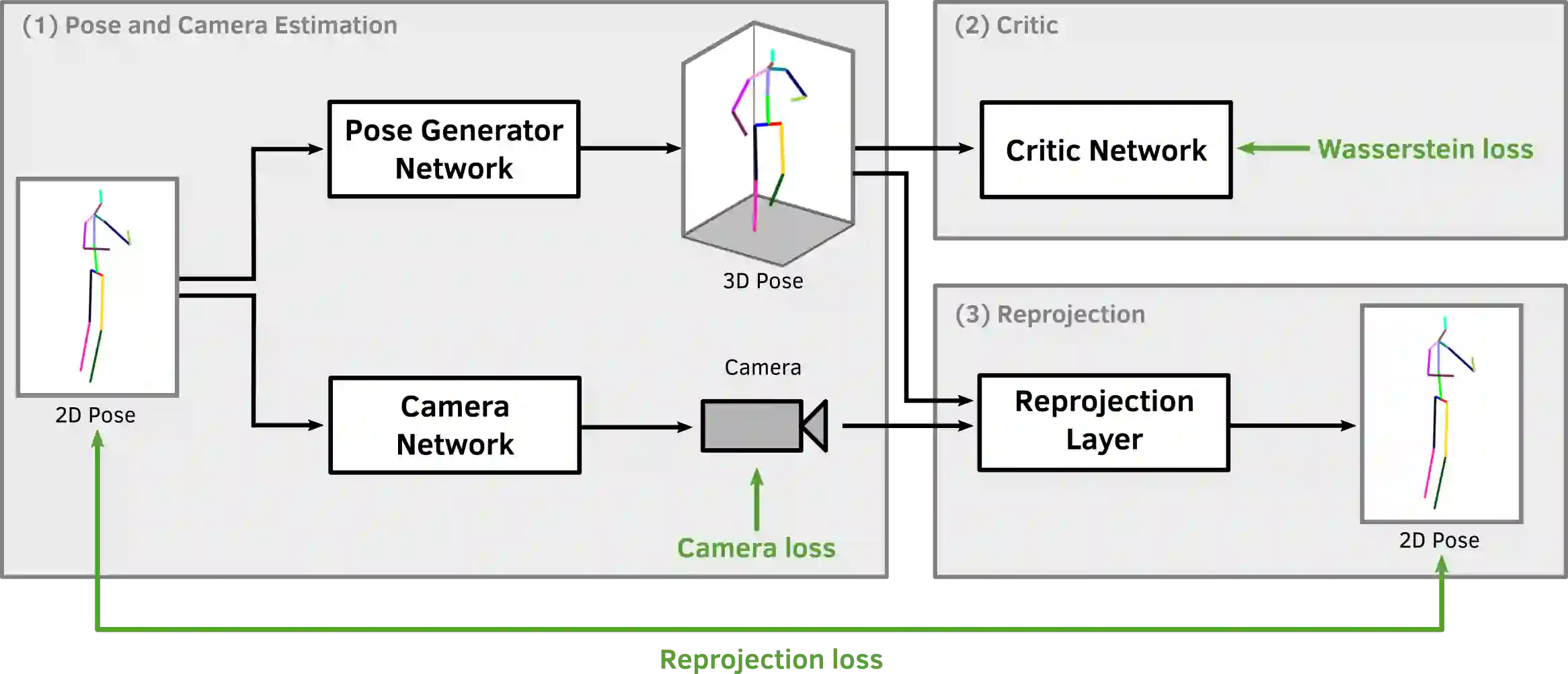

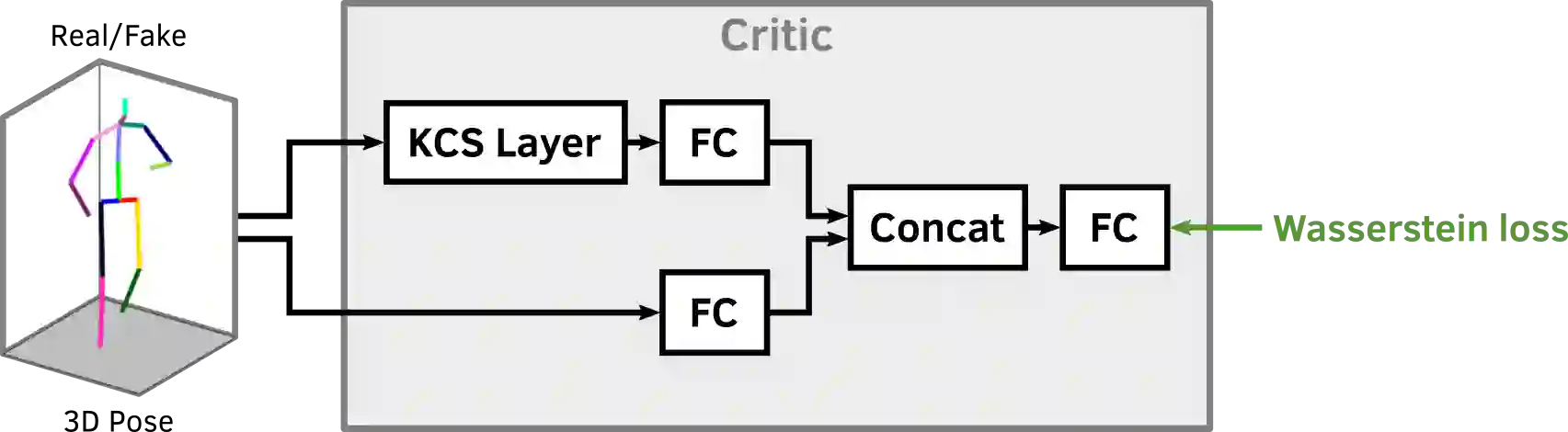

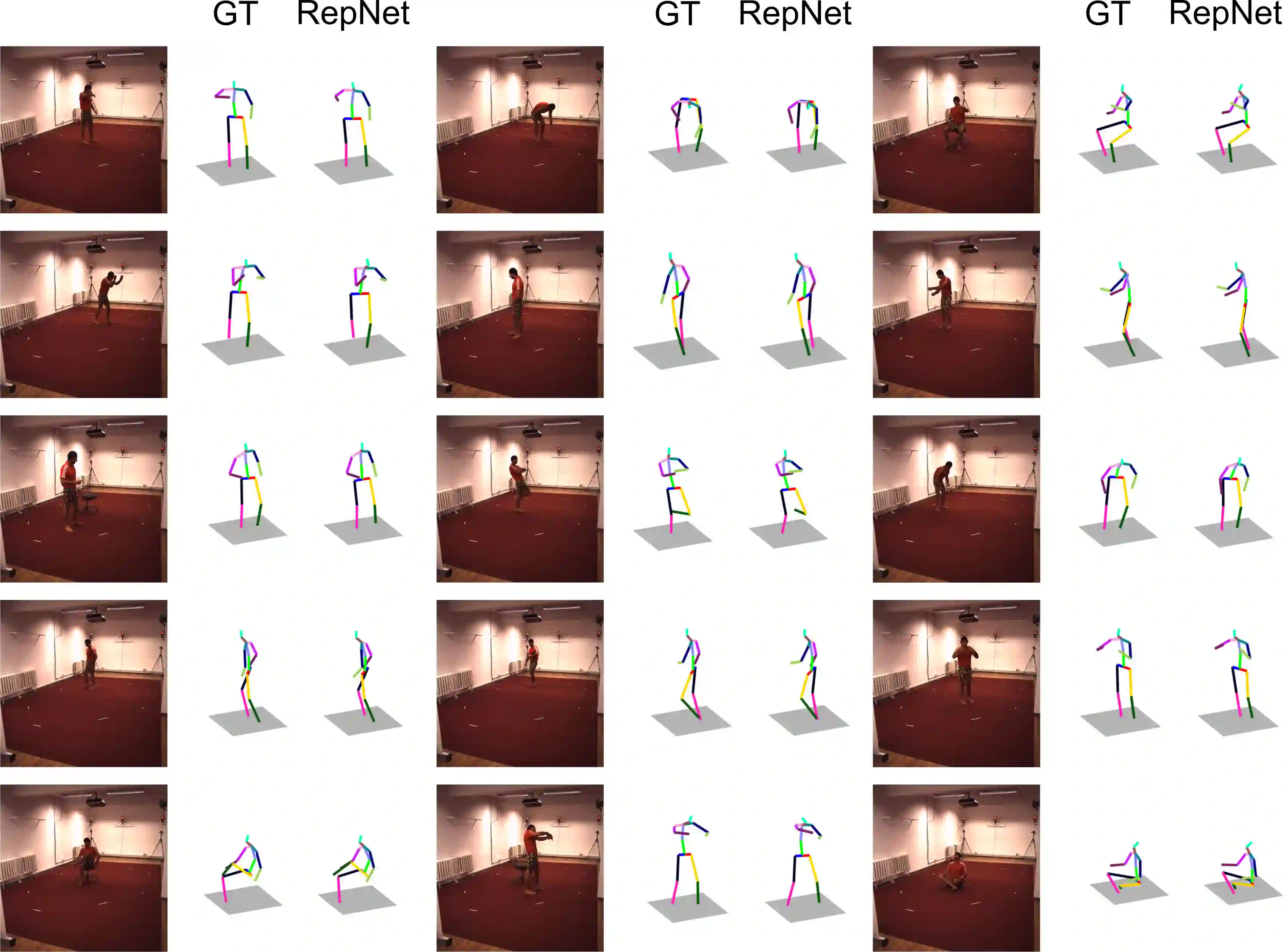

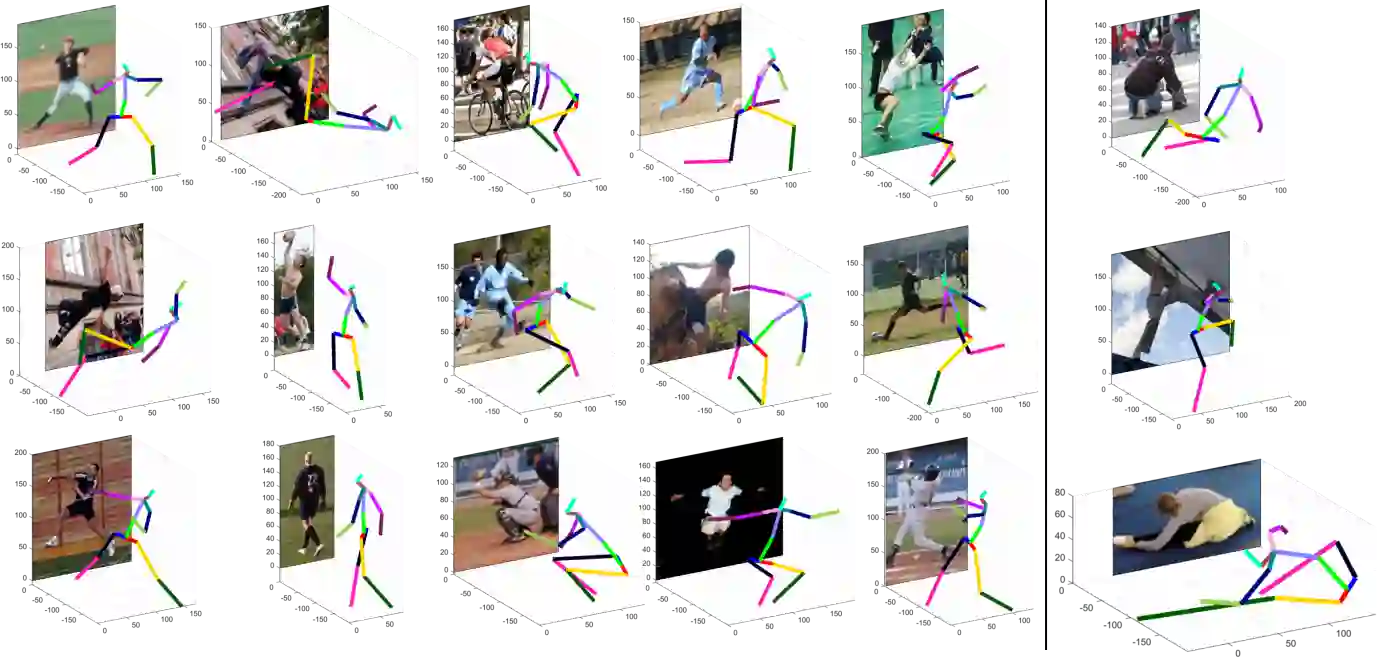

This paper addresses the problem of 3D human pose estimation from single images. While for a long time human skeletons were parameterized and fitted to the observation by satisfying a reprojection error, nowadays researchers directly use neural networks to infer the 3D pose from the observations. However, most of these approaches ignore the fact that a reprojection constraint has to be satisfied and are sensitive to overfitting. We tackle the overfitting problem by ignoring 2D to 3D correspondences. This efficiently avoids a simple memorization of the training data and allows for a weakly supervised training. One part of the proposed reprojection network (RepNet) learns a mapping from a distribution of 2D poses to a distribution of 3D poses using an adversarial training approach. Another part of the network estimates the camera. This allows for the definition of a network layer that performs the reprojection of the estimated 3D pose back to 2D which results in a reprojection loss function. Our experiments show that RepNet generalizes well to unknown data and outperforms state-of-the-art methods when applied to unseen data. Moreover, our implementation runs in real-time on a standard desktop PC.

翻译:虽然长期以来,人类骨骼被参数化,并适合通过再预测错误进行观察,但当今研究人员直接使用神经网络从观察中推断3D的外形。然而,大多数这些方法忽视了必须满足再预测限制并敏感地进行过度配置这一事实。我们通过忽略2D至3D通信来解决过分匹配问题。这有效地避免了培训数据的简单记忆化,并允许进行微弱监督的培训。拟议的再预测网络(RepNet)的一部分从2D配置图象的分布到3D配置图象的分布中学习,使用对抗性培训方法。网络的另一部分对相机进行了估计。这样就可以界定一个对估计3D进行再预测的网络层,从而产生再预测损失功能。我们的实验显示,RepNet对未知数据进行了概括,在应用到不可见数据时超越了最新设计的方法。此外,我们实施的是实时的 PC 台式计算机。