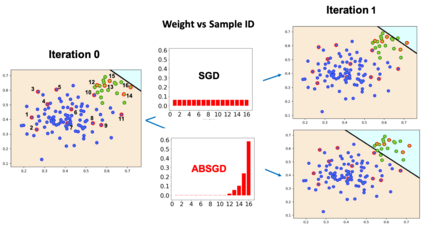

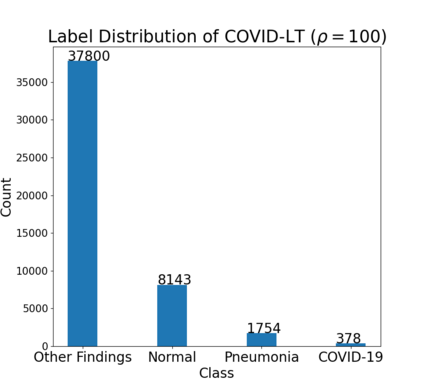

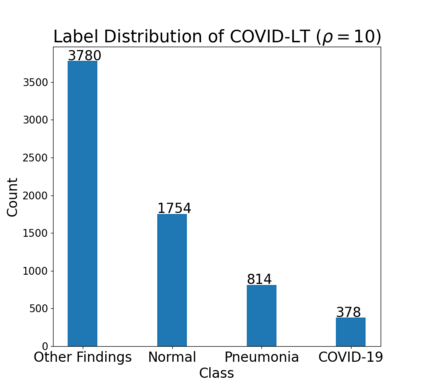

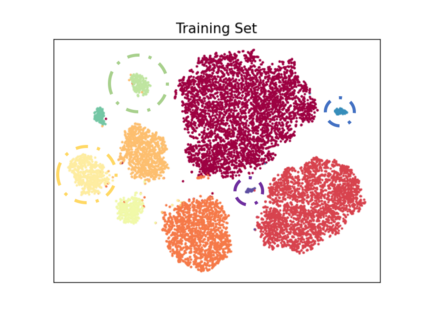

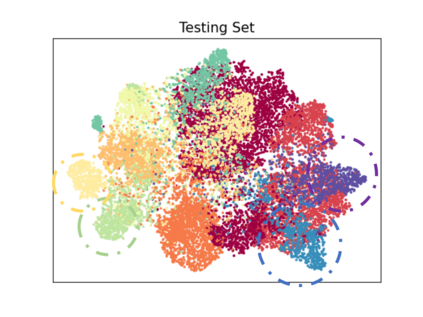

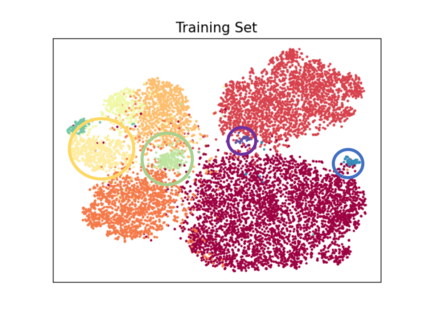

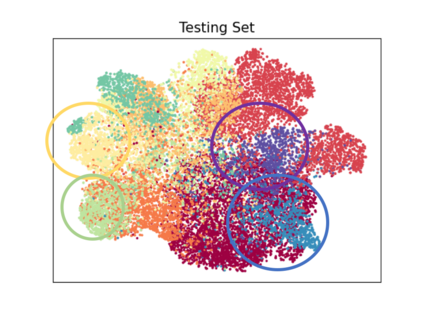

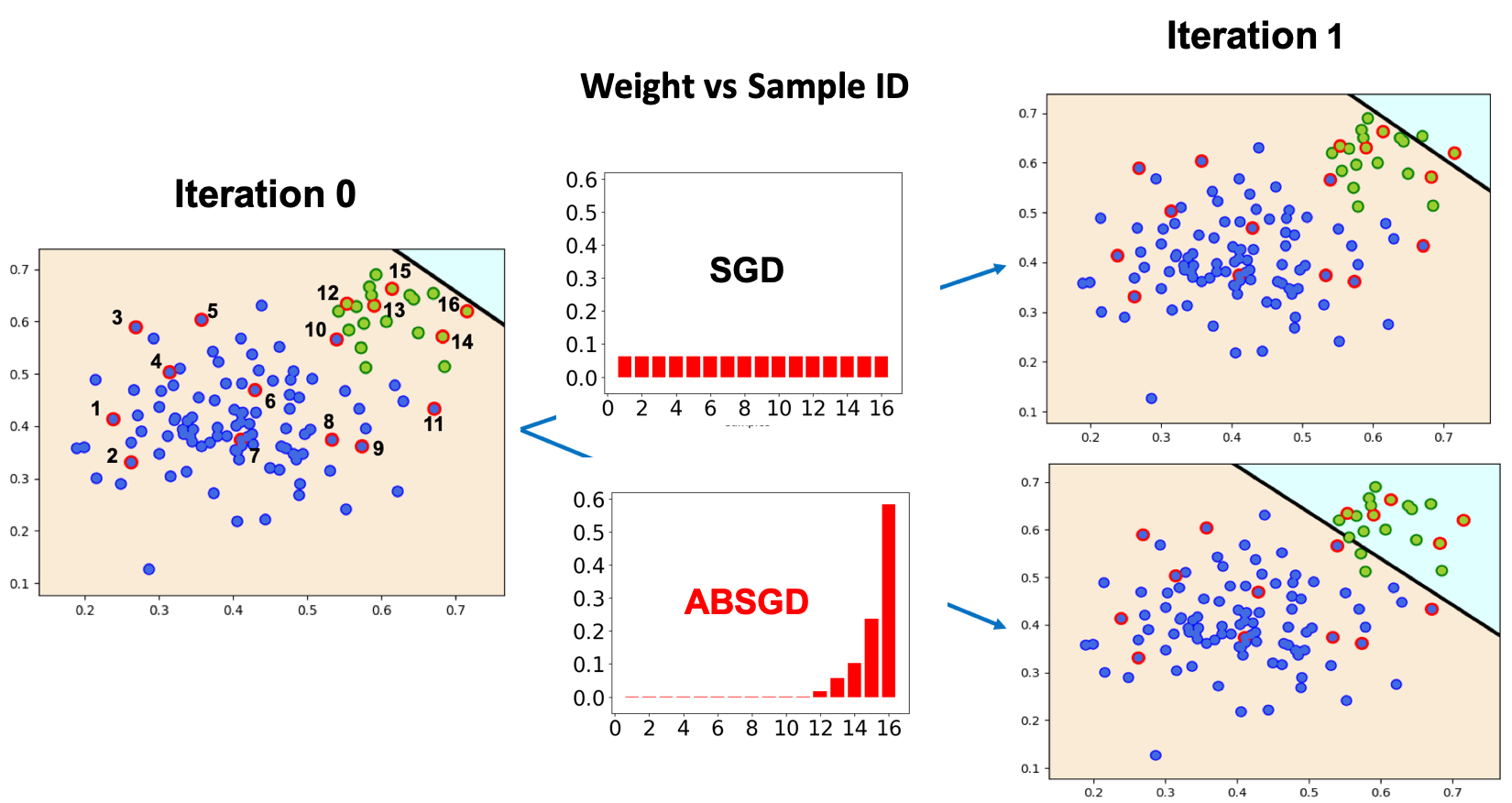

In this paper~\footnote{The original title is "Momentum SGD with Robust Weighting For Imbalanced Classification"}, we present a simple yet effective method (ABSGD) for addressing the data imbalance issue in deep learning. Our method is a simple modification to momentum SGD where we leverage an attentional mechanism to assign an individual importance weight to each gradient in the mini-batch. Unlike existing individual weighting methods that learn the individual weights by meta-learning on a separate balanced validation data, our weighting scheme is self-adaptive and is grounded in distributionally robust optimization. The weight of a sampled data is systematically proportional to exponential of a scaled loss value of the data, where the scaling factor is interpreted as the regularization parameter in the framework of information-regularized distributionally robust optimization. We employ a step damping strategy for the scaling factor to balance between the learning of feature extraction layers and the learning of the classifier layer. Compared with exiting meta-learning methods that require three backward propagations for computing mini-batch stochastic gradients at three different points at each iteration, our method is more efficient with only one backward propagation at each iteration as in standard deep learning methods. Compared with existing class-level weighting schemes, our method can be applied to online learning without any knowledge of class prior, while enjoying further performance boost in offline learning combined with existing class-level weighting schemes. Our empirical studies on several benchmark datasets also demonstrate the effectiveness of our proposed method

翻译:在本文“ footnote { ” 中, 我们最初的标题是“ 以粗略的重量加权的移动 SGD ” }, 我们提出了一个简单而有效的方法( ABSGD ), 以解决深层学习中的数据不平衡问题。 我们的方法是对动力 SGD 的简单修改, 我们利用一个关注机制来给微型批量中的每个梯度分配一个个人重要重量。 与现有的个体加权方法不同, 通过单独平衡的校验数据学习元数据来学习个体加权, 我们的加权方案是自我调整的, 并且以分布稳健的优化为基础。 抽样数据的权重与数据缩放损失值的指数成系统成比例, 将缩放系数解释为信息- 常规化分布优化的优化框架中的正规化参数 。 我们采用一个渐进的缩放战略来平衡地段提取层层层的学习和分层的学习。 与后期的元学习方法相比, 每三个不同的点需要三次反向传播来计算小相匹配的梯度梯度梯度, 。 在每三个不同的分点上,, 我们的测算方法中, 我们的比我们的现有的阶级的比, 我们的比级的比, 我们的等级的比, 我们的等级的等级的比, 我们的等级的比, 我们的等级的,, 级级级级级级级级级级级级级级的 学习 的 的 的 学习方法,,, 级级级级级的比 学习前级的比 的 级级级级级的 级的 级级级级级级级级级级级级级级级级级 学习 学习 学习 级的 级级级级 的 级级级级级级级级级级级级级级级级 学习 学习 学习 级的 级的 级的 级级级级级级级的 级级级级级级级级级级级的 级的 级的 级级级级级级级级级的 级 级 级的 级级级级级级级级级级级级级级级级级级级级 级 级的 级的 级 级 级的