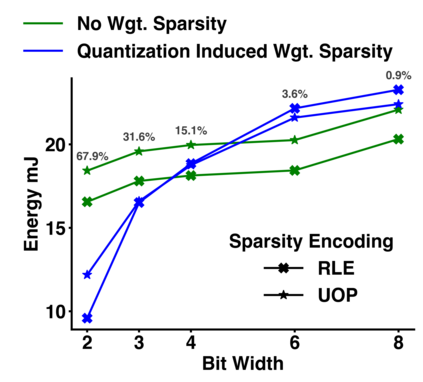

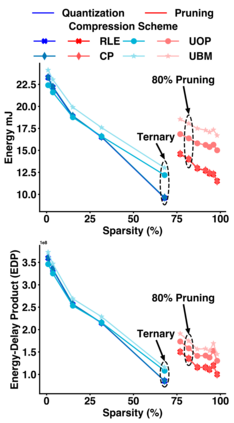

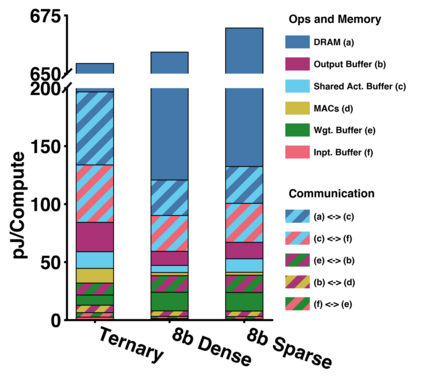

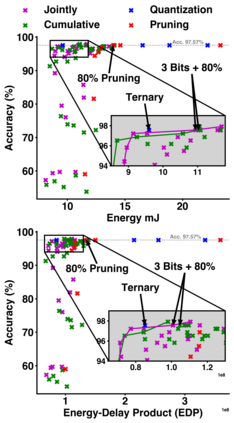

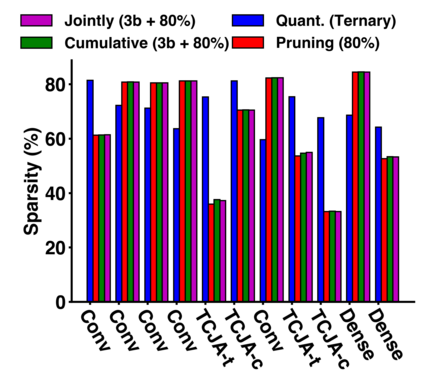

Energy efficient implementations and deployments of Spiking neural networks (SNNs) have been of great interest due to the possibility of developing artificial systems that can achieve the computational powers and energy efficiency of the biological brain. Efficient implementations of SNNs on modern digital hardware are also inspired by advances in machine learning and deep neural networks (DNNs). Two techniques widely employed in the efficient deployment of DNNs -- the quantization and pruning of parameters, can both compress the model size, reduce memory footprints, and facilitate low-latency execution. The interaction between quantization and pruning and how they might impact model performance on SNN accelerators is currently unknown. We study various combinations of pruning and quantization in isolation, cumulatively, and simultaneously (jointly) to a state-of-the-art SNN targeting gesture recognition for dynamic vision sensor cameras (DVS). We show that this state-of-the-art model is amenable to aggressive parameter quantization, not suffering from any loss in accuracy down to ternary weights. However, pruning only maintains iso-accuracy up to 80% sparsity, which results in 45% more energy than the best quantization on our architectural model. Applying both pruning and quantization can result in an accuracy loss to offer a favourable trade-off on the energy-accuracy Pareto-frontier for the given hardware configuration.

翻译:由于有可能开发能够实现生物大脑计算力和能源效率的人工系统,从而有可能开发能够实现生物大脑计算力和能源效率的人工系统,因此,高效地实施现代数字硬件系统也是机器学习和深神经网络的进步所激励的。在高效部署DNN(DVS)中广泛使用的两种技术 -- -- 量化和调整参数,既可以压缩模型大小,减少记忆足迹,也可以促进低纬度执行。四分化和修剪之间的相互作用,以及它们如何影响SNNN加速器的模型性能,目前尚不清楚。我们同时(同时)在分离、累积和(共同)的同时,将SNNNN的各种裁剪裁和四分化结合起来,以示对动态视觉传感器摄像头(DVSS)的姿态识别。我们显示,这种状态的模型既适合攻击性参数的四分化,又不会因精确度下降而导致四分权重力。然而,在模型中,精度的精确度只能维持45级的硬度结果。