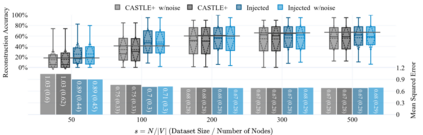

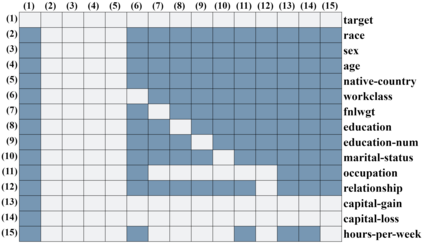

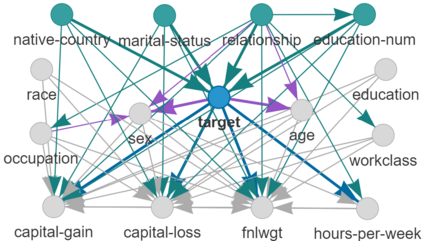

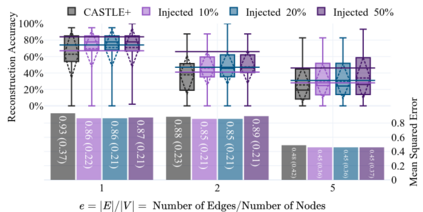

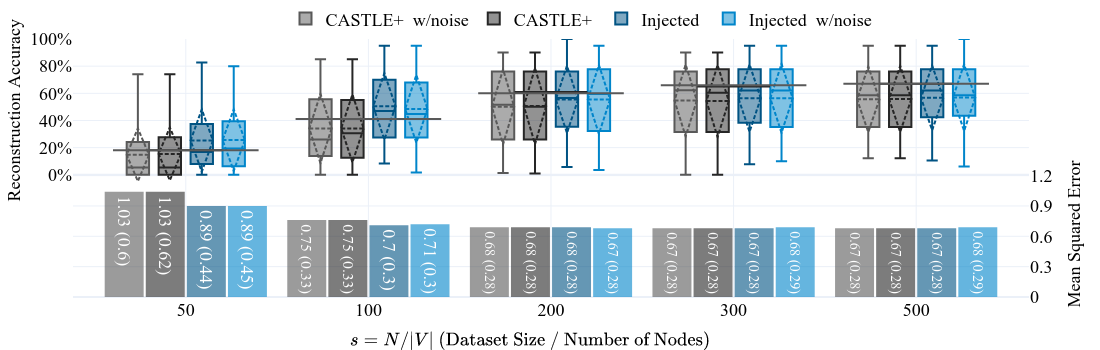

Neural networks have proven to be effective at solving a wide range of problems but it is often unclear whether they learn any meaningful causal relationship: this poses a problem for the robustness of neural network models and their use for high-stakes decisions. We propose a novel method overcoming this issue by injecting knowledge in the form of (possibly partial) causal graphs into feed-forward neural networks, so that the learnt model is guaranteed to conform to the graph, hence adhering to expert knowledge. This knowledge may be given up-front or during the learning process, to improve the model through human-AI collaboration. We apply our method to synthetic and real (tabular) data showing that it is robust against noise and can improve causal discovery and prediction performance in low data regimes.

翻译:神经网络已证明在解决广泛的问题方面是有效的,但往往不清楚它们是否学会了任何有意义的因果关系:这对神经网络模型的稳健性及其用于高层决策提出了问题。我们提议了一种新的方法来解决这一问题,将知识以(可能部分)因果图的形式注入供养神经网络,从而保证所学模型符合图表,从而遵守专家知识。这种知识可以先于或学习过程,通过人类-AI合作来改进模型。我们采用的方法是合成的和真实的(表层)数据,表明它对于噪音是稳健的,可以改进低数据系统中的因果发现和预测性能。