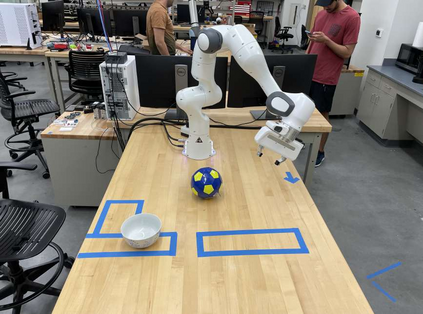

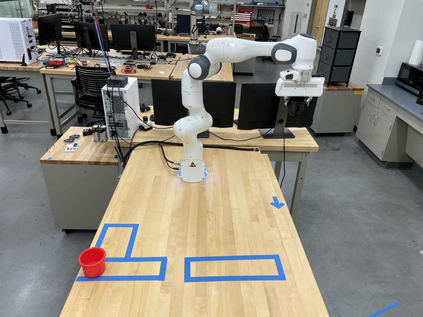

Humans can leverage physical interaction to teach robot arms. This physical interaction takes multiple forms depending on the task, the user, and what the robot has learned so far. State-of-the-art approaches focus on learning from a single modality, or combine multiple interaction types by assuming that the robot has prior information about the human's intended task. By contrast, in this paper we introduce an algorithmic formalism that unites learning from demonstrations, corrections, and preferences. Our approach makes no assumptions about the tasks the human wants to teach the robot; instead, we learn a reward model from scratch by comparing the human's inputs to nearby alternatives. We first derive a loss function that trains an ensemble of reward models to match the human's demonstrations, corrections, and preferences. The type and order of feedback is up to the human teacher: we enable the robot to collect this feedback passively or actively. We then apply constrained optimization to convert our learned reward into a desired robot trajectory. Through simulations and a user study we demonstrate that our proposed approach more accurately learns manipulation tasks from physical human interaction than existing baselines, particularly when the robot is faced with new or unexpected objectives. Videos of our user study are available at: https://youtu.be/FSUJsTYvEKU

翻译:人类可以利用物理互动来教授机器人的手臂。 这种物理互动可以根据任务、 用户和机器人迄今所学到的知识, 以多种形式进行物理互动。 这种物理互动可以根据任务、 用户和机器人迄今所学的东西, 采取多种形式。 最先进的方法侧重于从单一模式中学习, 或者通过假设机器人事先掌握了有关人类预定任务的信息而将多重互动类型结合起来。 相反, 在本文中, 我们引入了一种算法形式主义, 将从演示、 校正和偏好中学习, 将人类想要学习的演示、 校正和偏好结合起来。 我们的方法不会对人类想要教机器人的任务做出任何假设; 相反, 我们的方法通过比较人类的投入与附近的其他选择, 来从零头学到一个奖励模式。 我们首先产生一个损失函数, 来训练奖励模型的组合, 以匹配人类的演示、 校正、 和偏好。 反馈的类型和顺序由人类老师决定: 我们让机器人能够被动或积极地收集这种反馈。 然后我们应用有限的优化来将我们学到的奖励转换为理想的机械轨道。 我们通过模拟和用户研究来证明我们提议的方法比现有基线更准确地学习人类的操作任务, 而不是当机器人面对新的或意外目标时, MATYU/ TYU 。 我们的视频的用户研究是可用的。