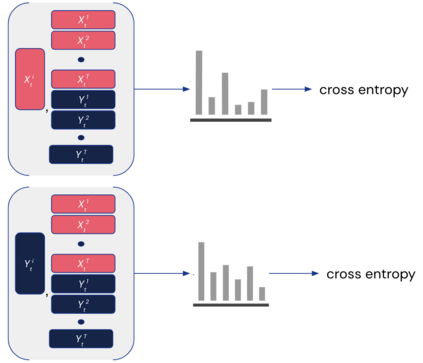

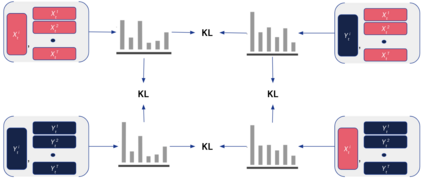

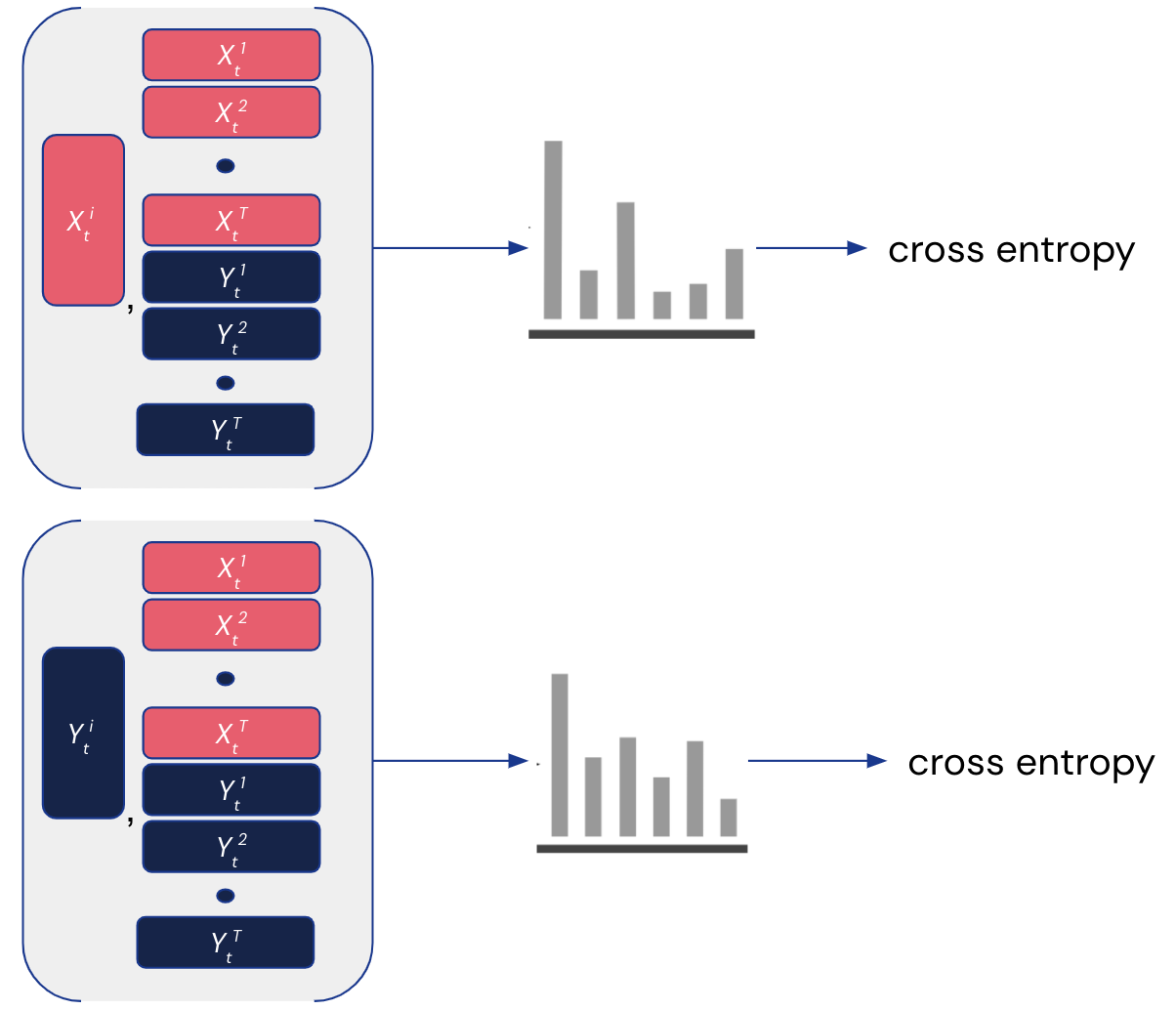

Many reinforcement learning (RL) agents require a large amount of experience to solve tasks. We propose Contrastive BERT for RL (CoBERL), an agent that combines a new contrastive loss and a hybrid LSTM-transformer architecture to tackle the challenge of improving data efficiency. CoBERL enables efficient, robust learning from pixels across a wide range of domains. We use bidirectional masked prediction in combination with a generalization of recent contrastive methods to learn better representations for transformers in RL, without the need of hand engineered data augmentations. We find that CoBERL consistently improves performance across the full Atari suite, a set of control tasks and a challenging 3D environment.

翻译:许多强化学习(RL)代理商需要大量经验才能解决问题。 我们提议为RL(COBERL)提供对抗性BERT,这是一个将新的对比性损失和混合LSTM-转基因结构结合起来的代理商,以应对提高数据效率的挑战。 CoBERL使得能够从一系列广泛的领域的像素中高效、有力地学习。 我们使用双向蒙面预测,同时对最近的对比性方法进行概括化,以学习RL变压器的更好表现,而不需要人工设计的数据增强。 我们发现COBERL不断改善整个Atari套件的性能、一套控制任务以及挑战的3D环境。