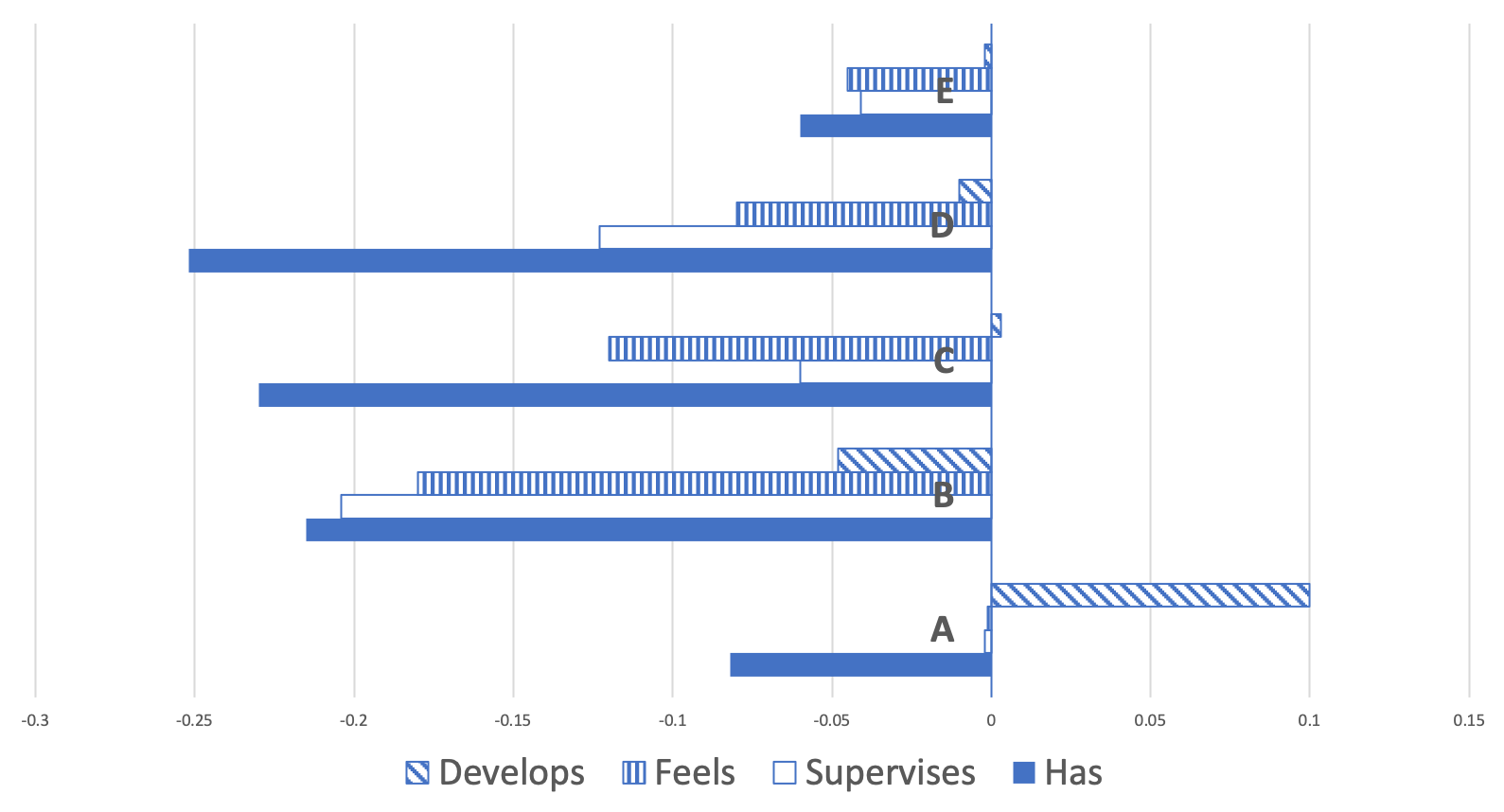

Much of the world's population experiences some form of disability during their lifetime. Caution must be exercised while designing natural language processing (NLP) systems to prevent systems from inadvertently perpetuating ableist bias against people with disabilities, i.e., prejudice that favors those with typical abilities. We report on various analyses based on word predictions of a large-scale BERT language model. Statistically significant results demonstrate that people with disabilities can be disadvantaged. Findings also explore overlapping forms of discrimination related to interconnected gender and race identities.

翻译:在设计自然语言处理(NLP)系统时,必须谨慎行事,以防止各种系统无意中延续对残疾人的自发偏见,即有利于具有典型能力的人的偏见。我们报告根据对大规模BERT语言模式的字数预测进行的各种分析。从统计学上看,重要的结果表明残疾人可能处于不利地位。调查结果还探讨了与相互关联的性别和种族身份有关的相互重叠的歧视形式。