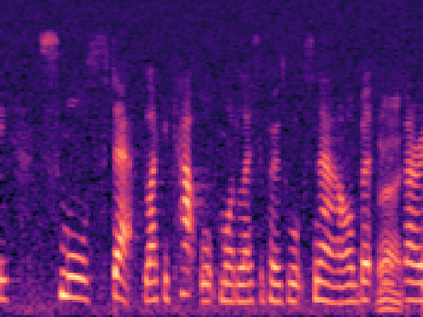

Deep learning techniques have considerably improved speech processing in recent years. Speaker representations extracted by deep learning models are being used in a wide range of tasks such as speaker recognition and speech emotion recognition. Attention mechanisms have started to play an important role in improving deep learning models in the field of speech processing. Nonetheless, despite the fact that important speaker-related information can be embedded in individual frequency-bins of the input spectral representations, current attention models are unable to attend to fine-grained information items in spectral representations. In this paper we propose Fine-grained Early Frequency Attention (FEFA) for speaker representation learning. Our model is a simple and lightweight model that can be integrated into various CNN pipelines and is capable of focusing on information items as small as frequency-bins. We evaluate the proposed model on three tasks of speaker recognition, speech emotion recognition, and spoken digit recognition. We use Three widely used public datasets, namely VoxCeleb, IEMOCAP, and Free Spoken Digit Dataset for our experiments. We attach FEFA to several prominent deep learning models and evaluate its impact on the final performance. We also compare our work with other related works in the area. Our experiments show that by adding FEFA to different CNN architectures, performance is consistently improved by substantial margins, and the models equipped with FEFA outperform all the other attentive models. We also test our model against different levels of added noise showing improvements in robustness and less sensitivity compared to the backbone networks.

翻译:近年来,深层学习技术大大改进了语言处理。深层学习模式所提取的演讲人演示正在用于诸如语音识别和语音情绪识别等广泛的任务中。注意机制已开始在改进语音处理领域的深层学习模式方面发挥重要作用。尽管重要的与演讲人有关的信息可以嵌入输入光谱演示的单个频集中,但目前关注模式无法用于光谱演示中的精细识别信息项目。在本文中,我们提议为演讲人演示而采用精细度的早频度关注模型(FEFA)。我们的模式是一个简单而轻量的模型,可以融入CNN的各种管道,并能够侧重于小于频积的信息项目。我们评估了与发言者识别、语音识别和口号识别这三项任务相关的拟议模式。我们使用三种广泛使用的公共数据集,即VoxCeleb、IEMOCAP和Free Spoken Digit数据集,用于我们的实验。我们把若干突出的深度学习模型附在了起来,并评价其对最后绩效的影响。我们还将我们的工作与FEF系统基础的改进工作与其他相关模型相比,我们不断将FFFFF格式的改进后展示了我们的工作与FEFB结构中的其他改进程度。