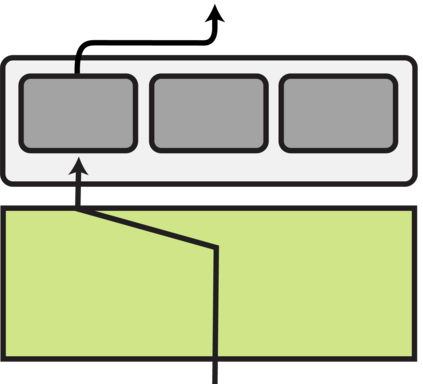

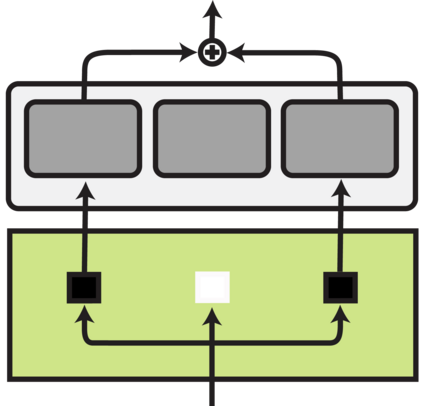

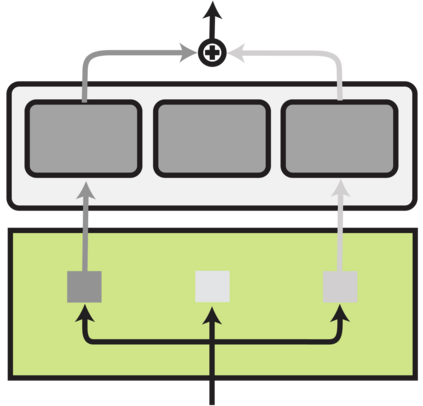

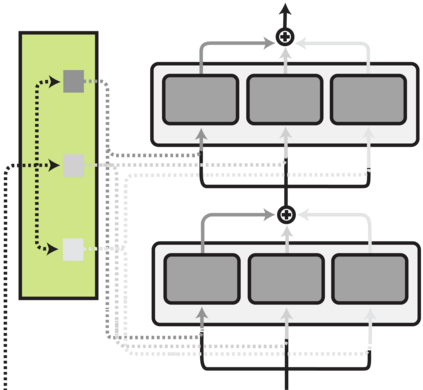

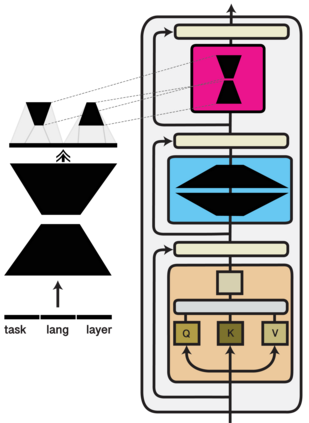

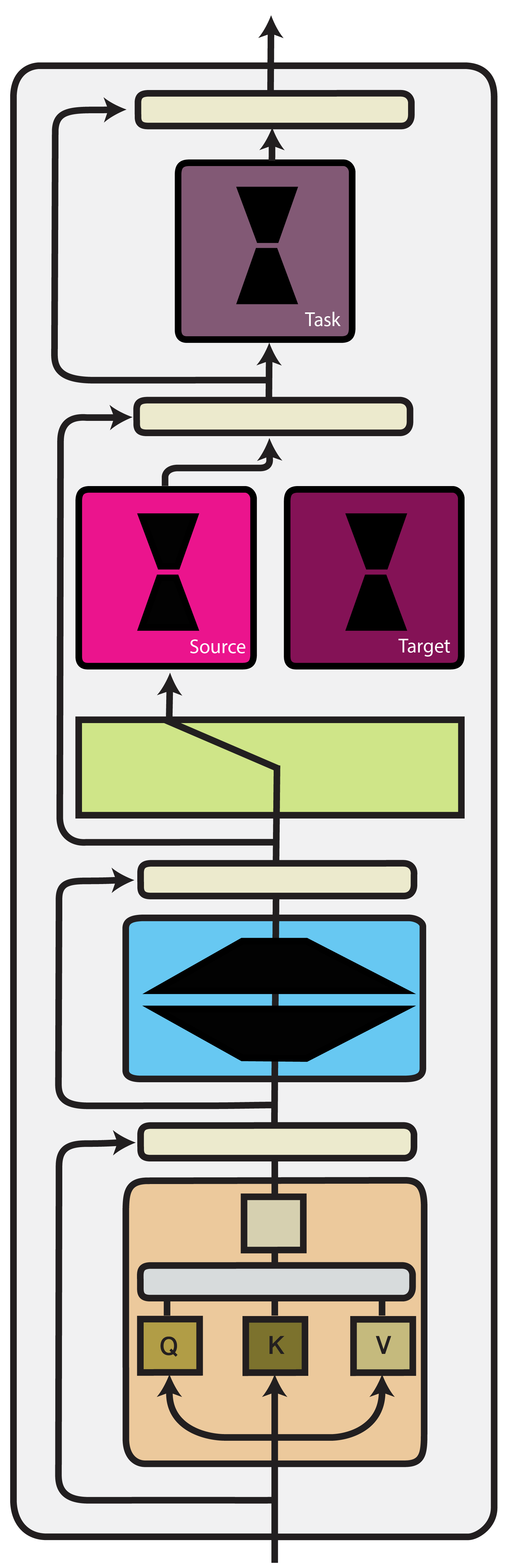

Transfer learning has recently become the dominant paradigm of machine learning. Pre-trained models fine-tuned for downstream tasks achieve better performance with fewer labelled examples. Nonetheless, it remains unclear how to develop models that specialise towards multiple tasks without incurring negative interference and that generalise systematically to non-identically distributed tasks. Modular deep learning has emerged as a promising solution to these challenges. In this framework, units of computation are often implemented as autonomous parameter-efficient modules. Information is conditionally routed to a subset of modules and subsequently aggregated. These properties enable positive transfer and systematic generalisation by separating computation from routing and updating modules locally. We offer a survey of modular architectures, providing a unified view over several threads of research that evolved independently in the scientific literature. Moreover, we explore various additional purposes of modularity, including scaling language models, causal inference, programme induction, and planning in reinforcement learning. Finally, we report various concrete applications where modularity has been successfully deployed such as cross-lingual and cross-modal knowledge transfer. Related talks and projects to this survey, are available at https://www.modulardeeplearning.com/.

翻译:最近,转让学习已成为机械学习的主要模式;为下游任务而经过微调的经过培训的模型,以较少的标记实例,取得了较好的业绩;然而,仍然不清楚如何发展专门从事多种任务、不引起负面干扰和系统地概括于非身份分配任务的模型;模块深层次学习已成为解决这些挑战的一个有希望的办法;在这个框架内,计算单位往往作为自主参数效率模块加以实施;信息有条件地进入一个模块子,随后加以汇总;这些属性通过将计算与本地的路径和更新模块分开,使得积极的转移和系统化的概括化成为可能;我们对模块架构进行了调查,对科学文献独立发展的若干研究线索提供了统一的观点;此外,我们探索了模块性的其他各种额外目的,包括扩大语言模型、因果关系、方案引导和强化学习规划;最后,我们报告了各种具体应用,在这些应用中,模块性已经成功部署,例如跨语言和跨模式知识转让;与本次调查有关的会谈和项目,可在https://www.modulardeeplearing.com/上查阅。