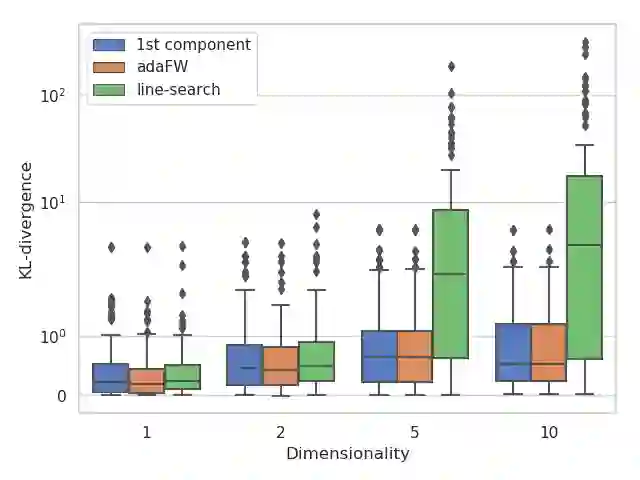

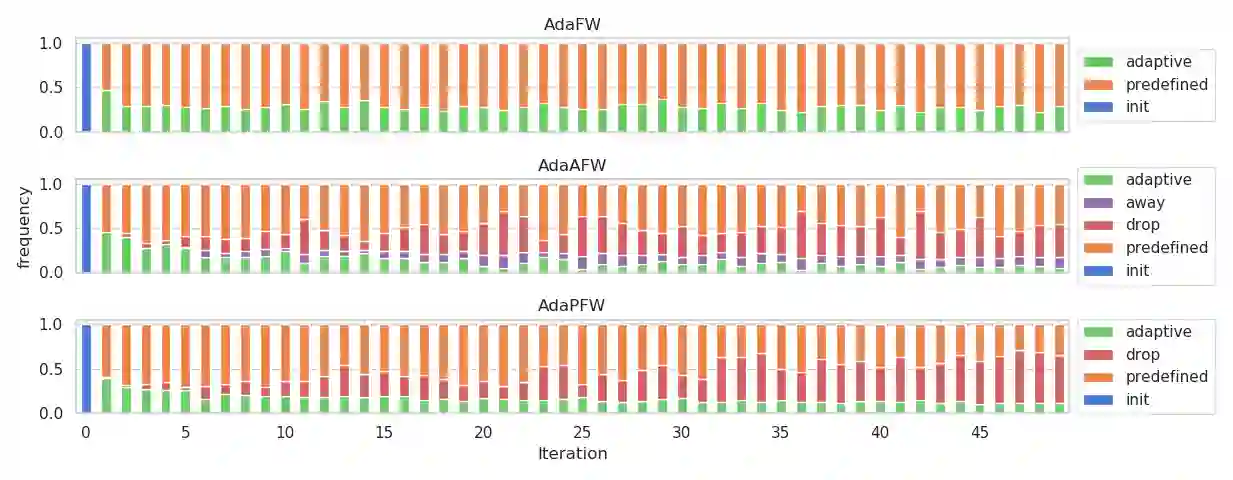

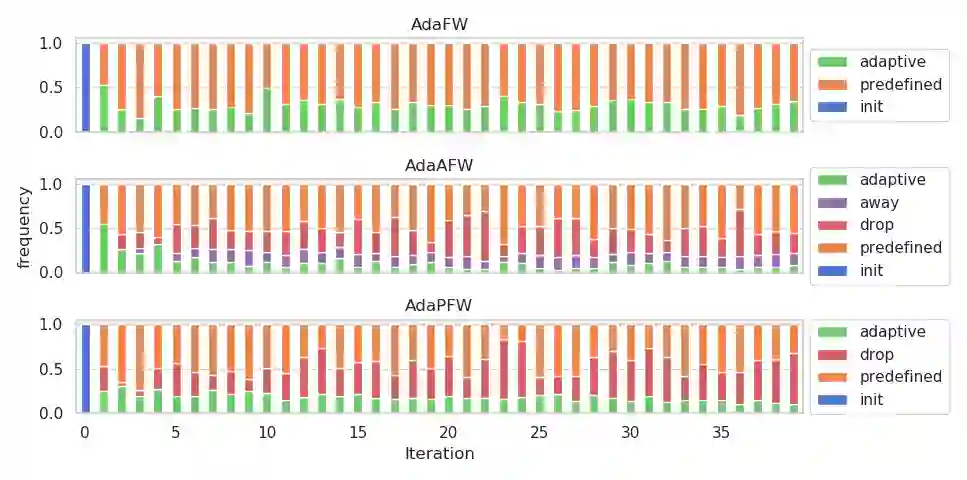

Variational Inference makes a trade-off between the capacity of the variational family and the tractability of finding an approximate posterior distribution. Instead, Boosting Variational Inference allows practitioners to obtain increasingly good posterior approximations by spending more compute. The main obstacle to widespread adoption of Boosting Variational Inference is the amount of resources necessary to improve over a strong Variational Inference baseline. In our work, we trace this limitation back to the global curvature of the KL-divergence. We characterize how the global curvature impacts time and memory consumption, address the problem with the notion of local curvature, and provide a novel approximate backtracking algorithm for estimating local curvature. We give new theoretical convergence rates for our algorithms and provide experimental validation on synthetic and real-world datasets.

翻译:变式推断使变式家庭的能力与寻找近似后体分布的可移动性之间发生权衡。相反,促进变式推断使从业者能够通过更多计算费用获得越来越好的后部近似值。广泛采用推式变式推断的主要障碍是改进强大的变式推断基线所必需的资源数量。在我们的工作中,我们将这一限制追溯到KL-感官的全球曲线。我们描述全球曲线如何影响时间和记忆消耗,用当地曲线的概念解决这个问题,并提供一种新的近似反跟踪算法来估计当地曲线。我们为我们的算法提供新的理论趋同率,并对合成和真实世界数据集提供实验性验证。