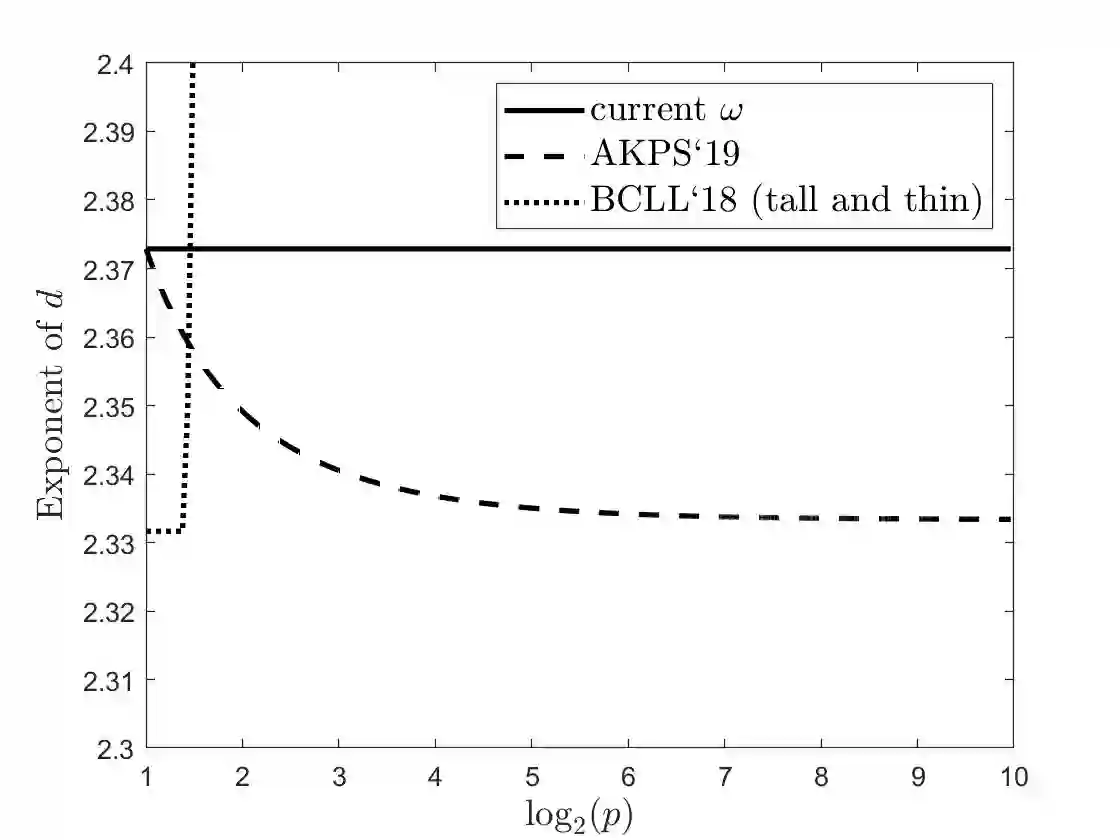

The current complexity of regression is nearly linear in the complexity of matrix multiplication/inversion. Here we show that algorithms for $2$-norm regression, i.e., standard linear regression, as well as $p$-norm regression (for $1 < p < \infty$) can be improved to go below the matrix multiplication threshold for sufficiently sparse matrices. We also show that for some values of $p$, the dependence on dimension in input-sparsity time algorithms can be improved beyond $d^\omega$ for tall-and-thin row-sparse matrices.

翻译:目前回归的复杂性在矩阵倍增/反转的复杂性方面几乎是线性的。 这里我们显示,$2的北回归算法,即标准的线性回归算法,以及$p$-北回归算法($ < p < p < \infty$)可以改进,使其低于矩阵倍增阈值,以达到足够分散的矩阵。 我们还表明,对于某些值,即$p$,对于输入-平衡时间算法的维度,可以改进,超过$d ⁇ omega$,对于高到深的直线矩阵算法,可以改进。

相关内容

专知会员服务

36+阅读 · 2019年10月17日