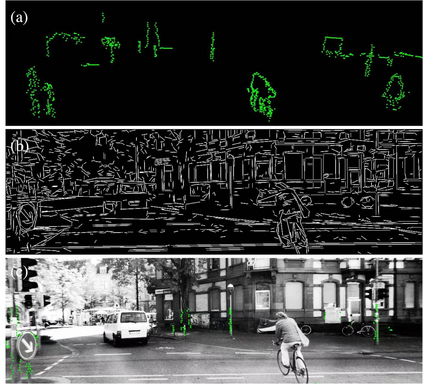

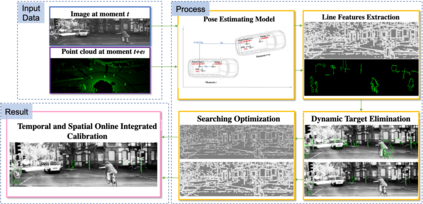

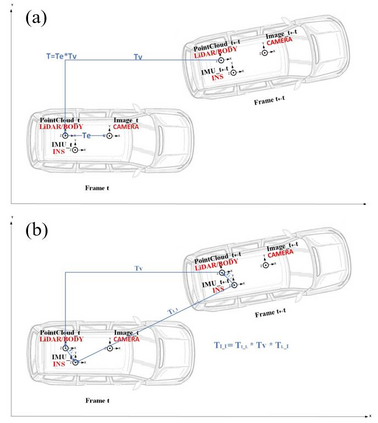

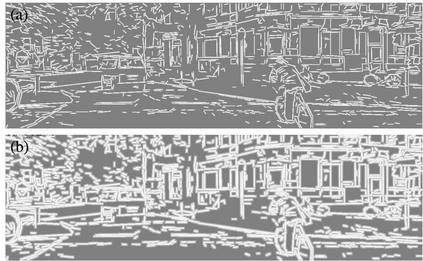

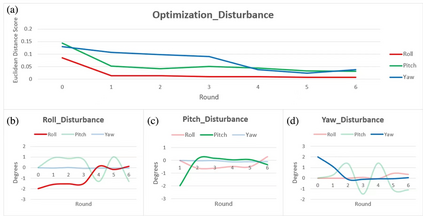

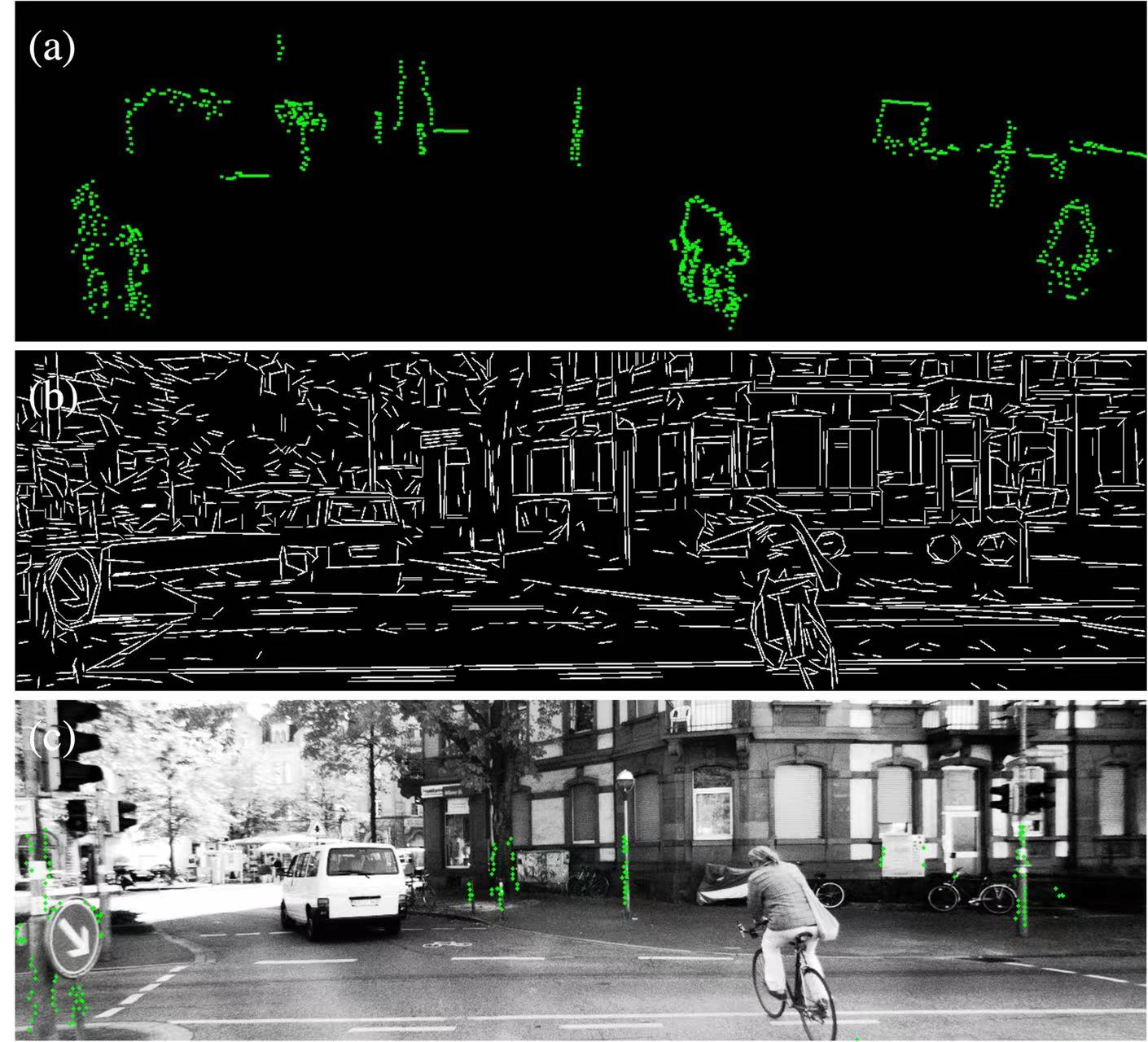

While camera and LiDAR are widely used in most of the assisted and autonomous driving systems, only a few works have been proposed to associate the temporal synchronization and extrinsic calibration for camera and LiDAR which are dedicated to online sensors data fusion. The temporal and spatial calibration technologies are facing the challenges of lack of relevance and real-time. In this paper, we introduce the pose estimation model and environmental robust line features extraction to improve the relevance of data fusion and instant online ability of correction. Dynamic targets eliminating aims to seek optimal policy considering the correspondence of point cloud matching between adjacent moments. The searching optimization process aims to provide accurate parameters with both computation accuracy and efficiency. To demonstrate the benefits of this method, we evaluate it on the KITTI benchmark with ground truth value. In online experiments, our approach improves the accuracy by 38.5\% than the soft synchronization method in temporal calibration. While in spatial calibration, our approach automatically corrects disturbance errors within 0.4 second and achieves an accuracy of 0.3-degree. This work can promote the research and application of sensor fusion.

翻译:虽然大多数辅助和自主驱动系统广泛使用照相机和激光成像仪,但只提议了为数不多的工程,将专门用于在线传感器数据聚合的照相机和激光成像仪和激光成像仪的时间同步和外部校准结合起来。时间和空间校准技术面临着缺乏相关性和实时的挑战。在本文中,我们引入了成像估计模型和环境强力线特征提取,以提高数据聚合和即时在线校正能力的相关性。动态消除目标旨在寻求最佳政策,考虑到相邻时点云匹配的对应性。搜索优化进程旨在提供精确的参数,同时计算准确和高效。为展示这种方法的效益,我们用地面事实价值来评估该方法。在在线实验中,我们的方法比时间校准的软性同步方法提高了38.5 ⁇ 的准确度。在空间校准中,我们的方法自动纠正0.4秒内的扰动误差,并达到0.3度的精确度。这项工作可以促进传感器融合的研究和应用。