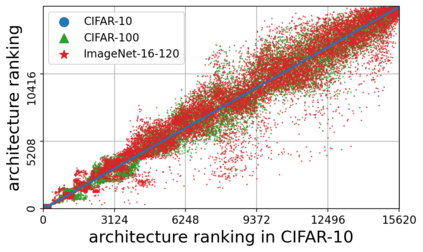

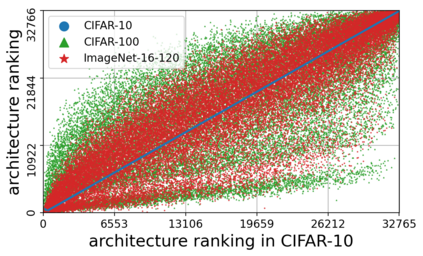

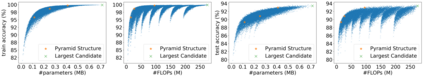

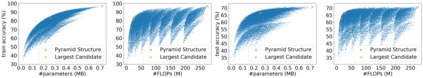

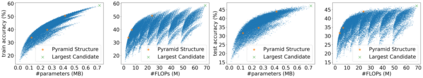

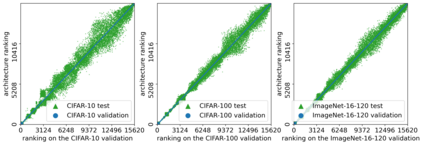

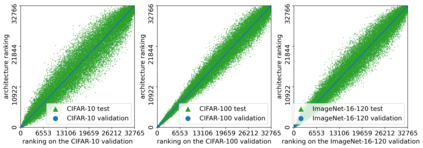

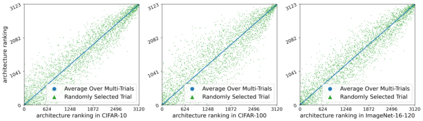

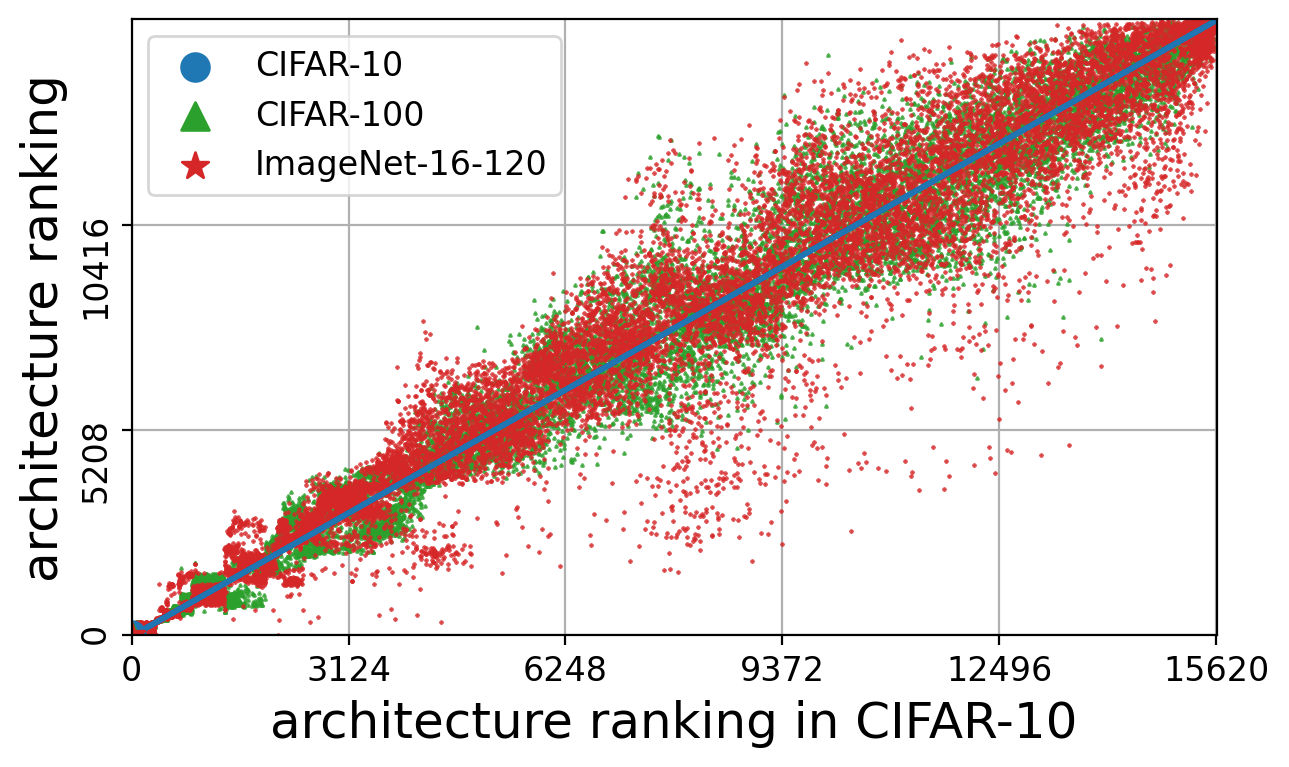

Neural architecture search (NAS) has attracted a lot of attention and has been illustrated to bring tangible benefits in a large number of applications in the past few years. Architecture topology and architecture size have been regarded as two of the most important aspects for the performance of deep learning models and the community has spawned lots of searching algorithms for both aspects of the neural architectures. However, the performance gain from these searching algorithms is achieved under different search spaces and training setups. This makes the overall performance of the algorithms to some extent incomparable and the improvement from a sub-module of the searching model unclear. In this paper, we propose NATS-Bench, a unified benchmark on searching for both topology and size, for (almost) any up-to-date NAS algorithm. NATS-Bench includes the search space of 15,625 neural cell candidates for architecture topology and 32,768 for architecture size on three datasets. We analyse the validity of our benchmark in terms of various criteria and performance comparison of all candidates in the search space. We also show the versatility of NATS-Bench by benchmarking 13 recent state-of-the-art NAS algorithms on it. All logs and diagnostic information trained using the same setup for each candidate are provided. This facilitates a much larger community of researchers to focus on developing better NAS algorithms in a more comparable and computationally cost friendly environment. All codes are publicly available at: https://xuanyidong.com/assets/projects/NATS-Bench .

翻译:过去几年来,建筑表层和建筑规模被视为深层学习模型表现的两个最重要的方面,社区为神经结构的这两个方面产生了大量的搜索算法。然而,这些搜索算法的绩效是在不同搜索空间和培训设置下取得的。这使得算法的总体性能在某种程度上无法比较,而且搜索模型的一个子模块的改进情况也不清楚。在本论文中,我们提议建立NATS-Bench,这是搜索表层和规模的统一基准,以(近乎)任何最新的NAS算法进行。NATS-Bench包括了15,625个神经细胞候选人的搜索空间,以及3个数据集的建筑规模的32,768个局的搜索算法的搜索空间。我们分析了我们基准在各种友好标准和搜索空间所有候选人业绩比较方面的有效性。我们还提出了NATS-Bench在13个层次和规模上都有一个统一的搜索基准,用于(近似)任何最新的NAS算法的搜索基准。 NATS-Bench在13个层次上,通过对高级的系统/候选序列进行更精确的精确的计算, 提供了更精确的精确的系统。