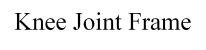

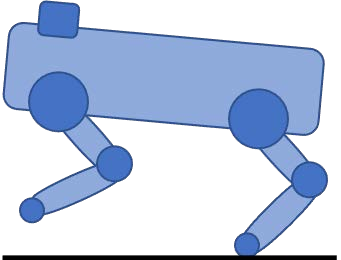

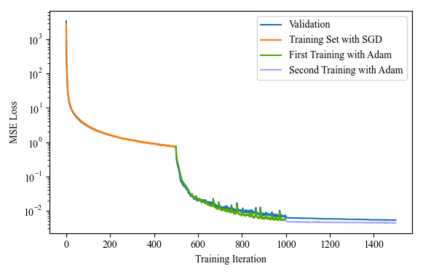

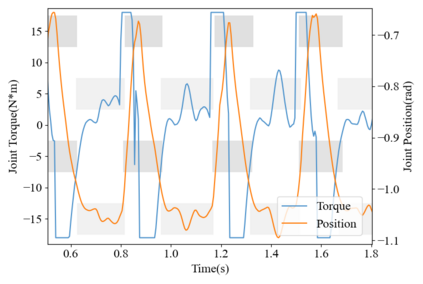

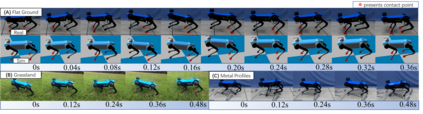

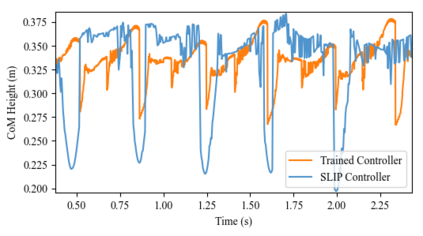

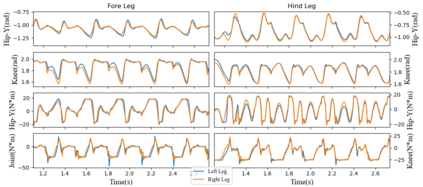

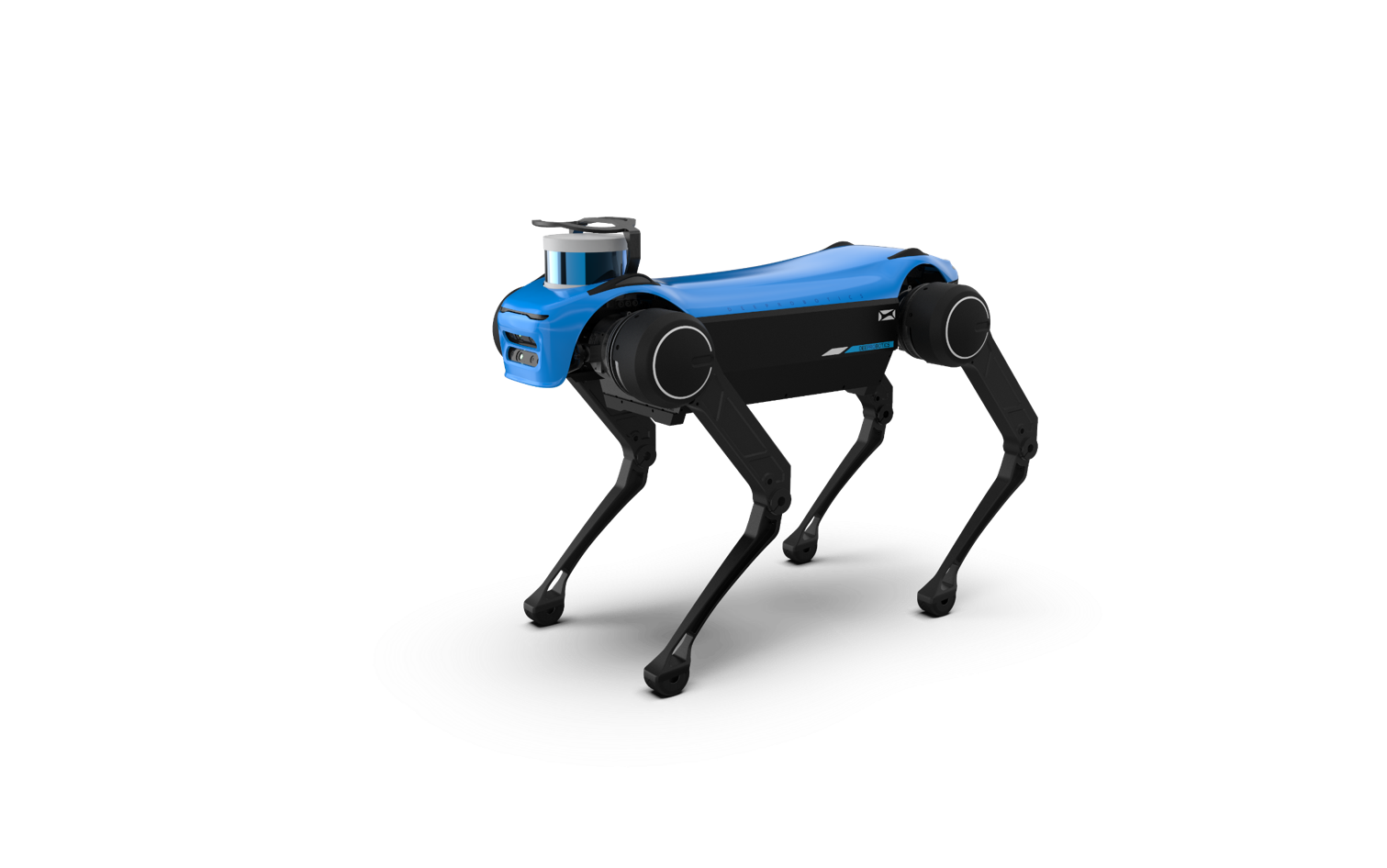

Bounding is one of the important gaits in quadrupedal locomotion for negotiating obstacles. However, due to a large number of robot and environmental constraints, conventional planning and control has limited ability to adapt bounding gaits on various terrains in real-time. We proposed an efficient approach to learn robust bounding gaits by first pretraining the deep neural network (DNN) using data from a robot that used conventional model-based controllers. Next, the pretrained DNN weights are optimized further via deep reinforcement learning (DRL). Also, we designed a reward function considering contact points to enforce the gait symmetry and periodicity, and used feature engineering to improve input features of the DRL model and the bounding performance. The DNN-based feedback controller was learned in simulation first and deployed directly on the real Jueying-Mini robot successfully, which was computationally more efficient and performed much better than the previous model-based control in terms of robustness and stability in both indoor and outdoor experiments.

翻译:然而,由于大量机器人和环境制约因素,常规规划和控制在实时调整各种地形的捆绑轨迹的能力有限。我们提出一种有效的方法,以便利用使用传统模型控制器的机器人的数据,对深神经网络(DNN)进行初步培训,从而学习牢牢的捆绑轨迹。接着,通过深强化学习(DRL),对经过预先训练的DNN重量进行进一步优化。此外,我们还设计了一项奖励功能,即考虑设立联络点,以实施格斗对称和周期性,并利用特征工程来改进DRL模型和捆绑性性性能的输入特征。DNN的反馈控制器首先在模拟中学习,然后直接部署在真正的Jueying-Mini机器人上,该机器人在计算上效率更高,在户外实验的稳健性和稳定性方面都比以前的模型控制要好得多。