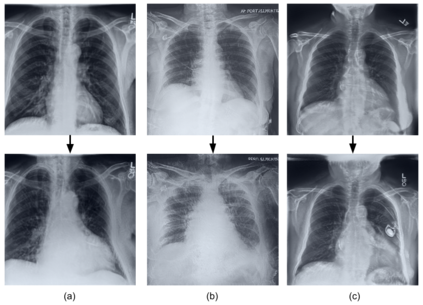

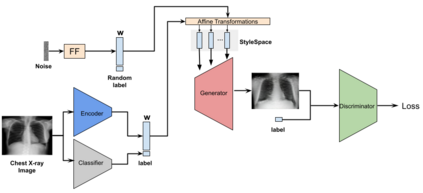

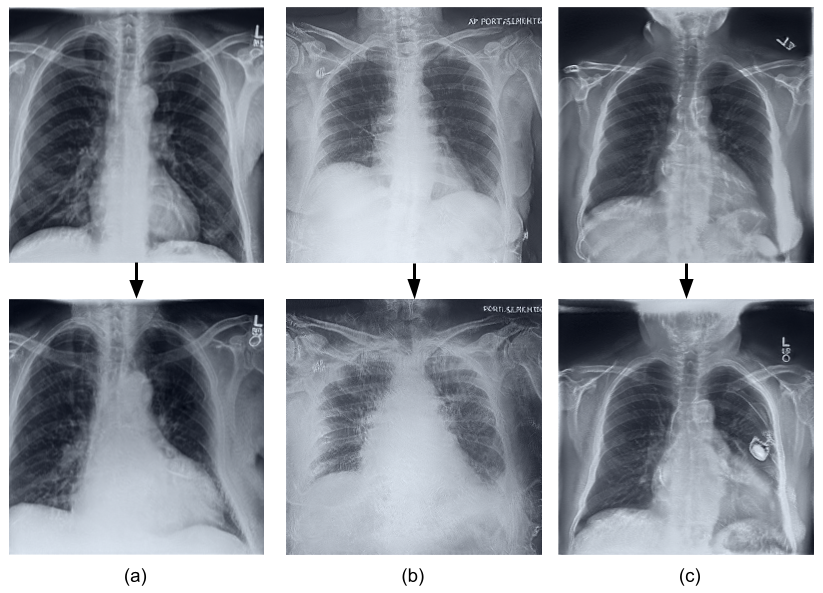

Deep learning models used in medical image analysis are prone to raising reliability concerns due to their black-box nature. To shed light on these black-box models, previous works predominantly focus on identifying the contribution of input features to the diagnosis, i.e., feature attribution. In this work, we explore counterfactual explanations to identify what patterns the models rely on for diagnosis. Specifically, we investigate the effect of changing features within chest X-rays on the classifier's output to understand its decision mechanism. We leverage a StyleGAN-based approach (StyleEx) to create counterfactual explanations for chest X-rays by manipulating specific latent directions in their latent space. In addition, we propose EigenFind to significantly reduce the computation time of generated explanations. We clinically evaluate the relevancy of our counterfactual explanations with the help of radiologists. Our code is publicly available.

翻译:用于医学图像分析的深层学习模型由于其黑盒性质而容易引起可靠性问题。 为了阐明这些黑盒模型, 先前的工作主要侧重于确定输入特征对诊断的贡献, 即特性归属。 在这项工作中, 我们探索反事实解释, 以确定模型的诊断依赖模式。 具体地说, 我们调查胸部X光中的变化特征对分类器输出的影响, 以了解其决定机制。 我们利用基于StyleGAN(StyleGANEX)的方法, 通过在它们潜藏空间操纵特定的潜伏方向来为胸部X光创造反事实解释。 此外, 我们建议 EigenFind 大幅缩短生成解释的计算时间 。 我们临床评估了我们在放射学家的帮助下反事实解释的关联性。 我们的代码是公开的 。