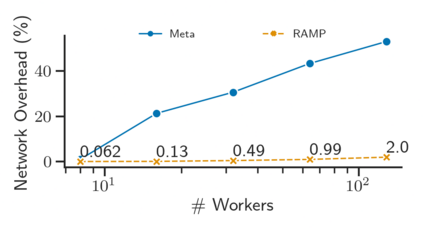

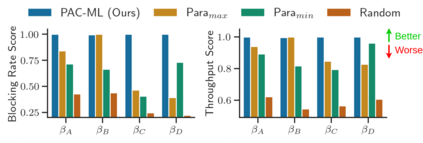

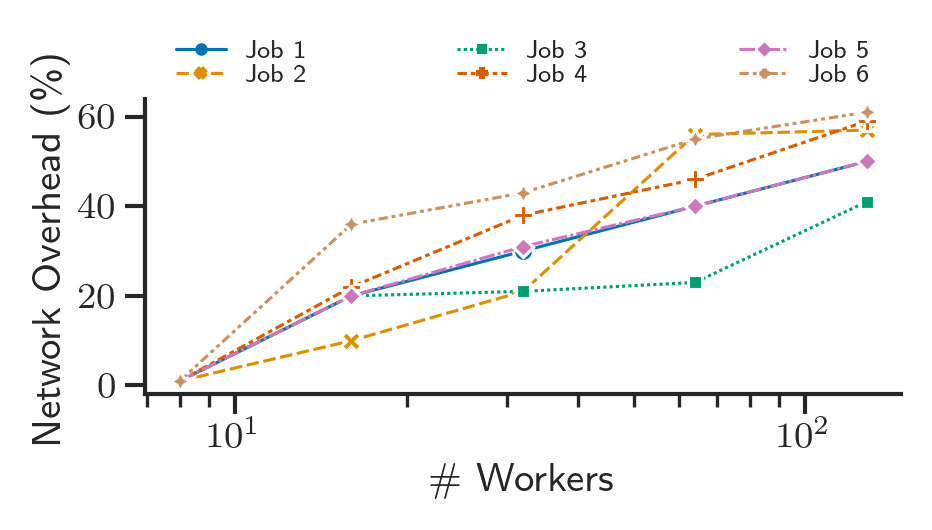

From natural language processing to genome sequencing, large-scale machine learning models are bringing advances to a broad range of fields. Many of these models are too large to be trained on a single machine, and instead must be distributed across multiple devices. This has motivated the research of new compute and network systems capable of handling such tasks. In particular, recent work has focused on developing management schemes which decide how to allocate distributed resources such that some overall objective, such as minimising the job completion time (JCT), is optimised. However, such studies omit explicit consideration of how much a job should be distributed, usually assuming that maximum distribution is desirable. In this work, we show that maximum parallelisation is sub-optimal in relation to user-critical metrics such as throughput and blocking rate. To address this, we propose PAC-ML (partitioning for asynchronous computing with machine learning). PAC-ML leverages a graph neural network and reinforcement learning to learn how much to partition computation graphs such that the number of jobs which meet arbitrary user-defined JCT requirements is maximised. In experiments with five real deep learning computation graphs on a recently proposed optical architecture across four user-defined JCT requirement distributions, we demonstrate PAC-ML achieving up to 56.2% lower blocking rates in dynamic job arrival settings than the canonical maximum parallelisation strategy used by most prior works.

翻译:从自然语言处理到基因组测序,大型机器学习模式正在将进步推向广泛的领域。许多这些模型都过于庞大,无法在单一机器上进行培训,而必须分布于多个设备。这推动了对能够处理此类任务的新计算和网络系统的研究。特别是,最近的工作重点是制定管理办法,决定如何分配分配资源,以便某些总体目标,如尽量缩短工作完成时间(JCT)得到优化。然而,这类研究忽略了明确考虑应分配多少工作,通常假设分配得最多是理想的。在这项工作中,我们显示与用户关键指标,如吞吐率和阻塞率相比,最大平行的平行化是次优的。为此,我们提议PAC-ML(通过机器学习进行无节奏的计算)方案。PAC-ML(利用图表神经网络和强化学习,以了解如何分配计算符合任意用户定义的JCT要求的工作数目。在5个真正深层次的计算图中,我们展示了5个实际学习最优化的平行化数据图,在4个用户-ML(使用)之前,我们用到最接近ML(J-ML)标准,我们最近确定了5个)的动态的配置配置结构中,可以显示,在4个用户-ML-ML-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-