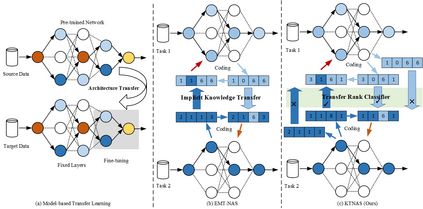

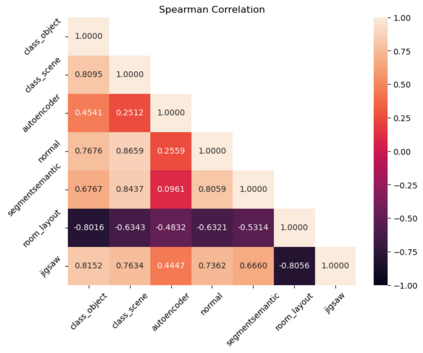

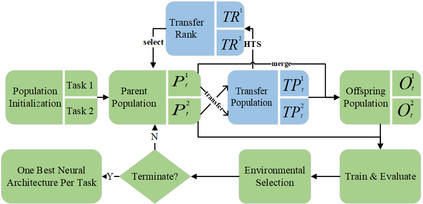

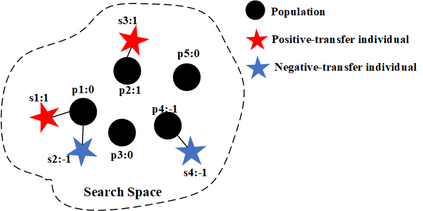

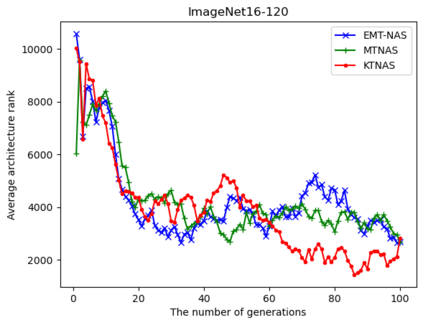

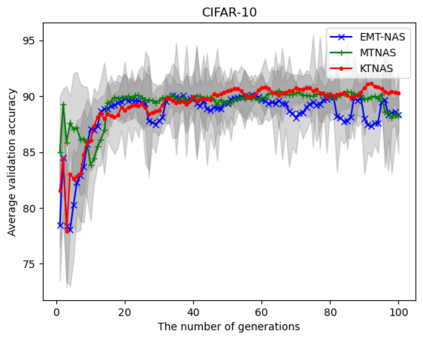

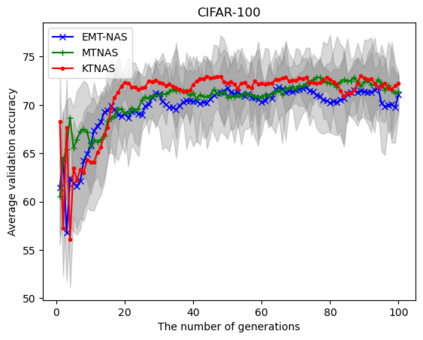

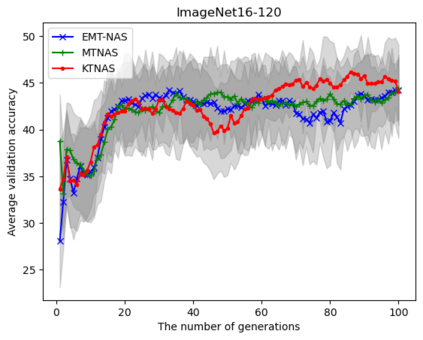

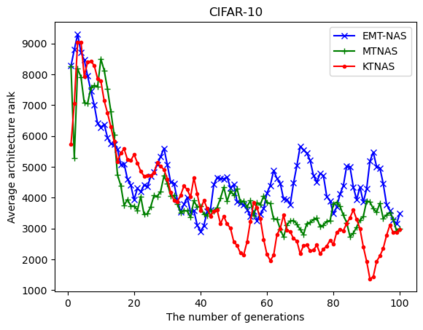

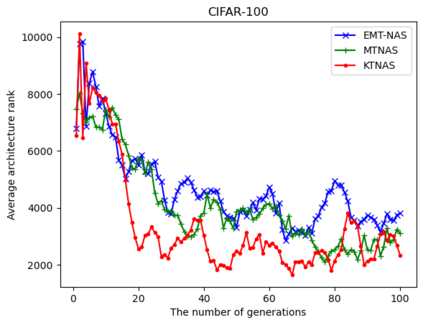

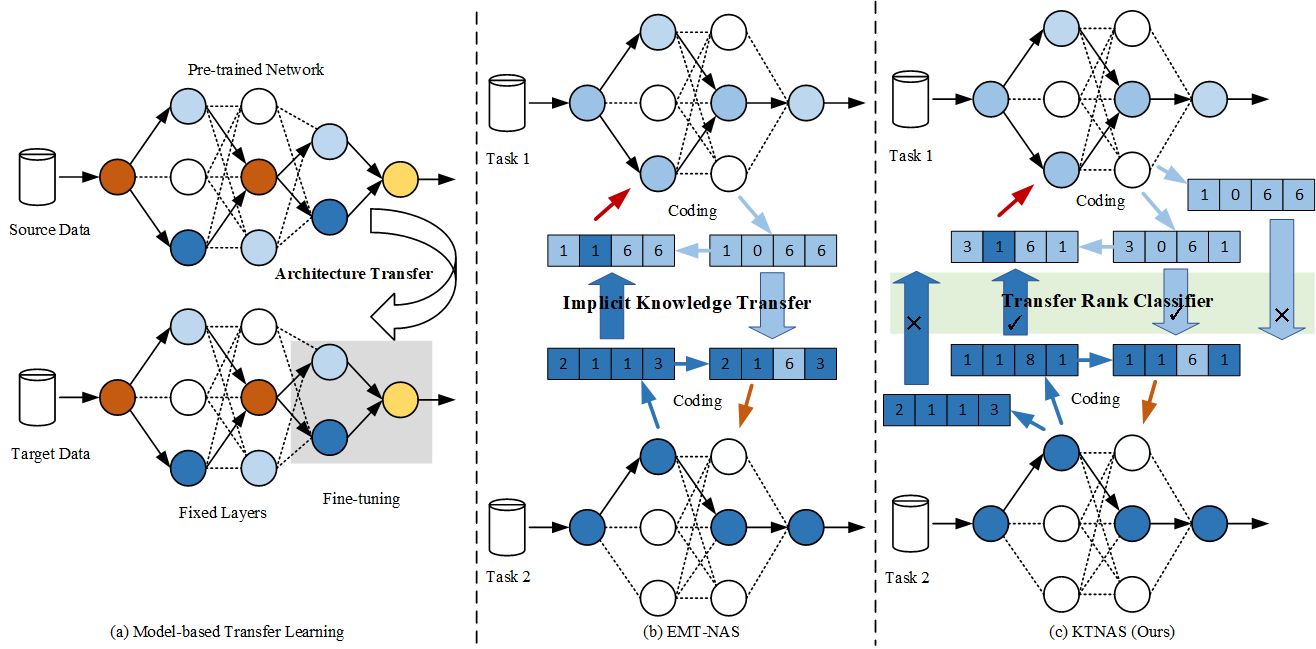

Multi-task neural architecture search (NAS) enables transferring architectural knowledge among different tasks. However, ranking disorder between the source task and the target task degrades the architecture performance on the downstream task. We propose KTNAS, an evolutionary cross-task NAS algorithm, to enhance transfer efficiency. Our data-agnostic method converts neural architectures into graphs and uses architecture embedding vectors for the subsequent architecture performance prediction. The concept of transfer rank, an instance-based classifier, is introduced into KTNAS to address the performance degradation issue. We verify the search efficiency on NASBench-201 and transferability to various vision tasks on Micro TransNAS-Bench-101. The scalability of our method is demonstrated on DARTs search space including CIFAR-10/100, MNIST/Fashion-MNIST, MedMNIST. Experimental results show that KTNAS outperforms peer multi-task NAS algorithms in search efficiency and downstream task performance. Ablation studies demonstrate the vital importance of transfer rank for transfer performance.

翻译:暂无翻译