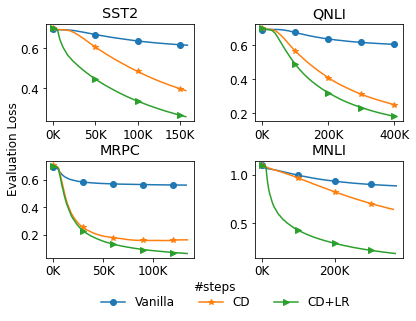

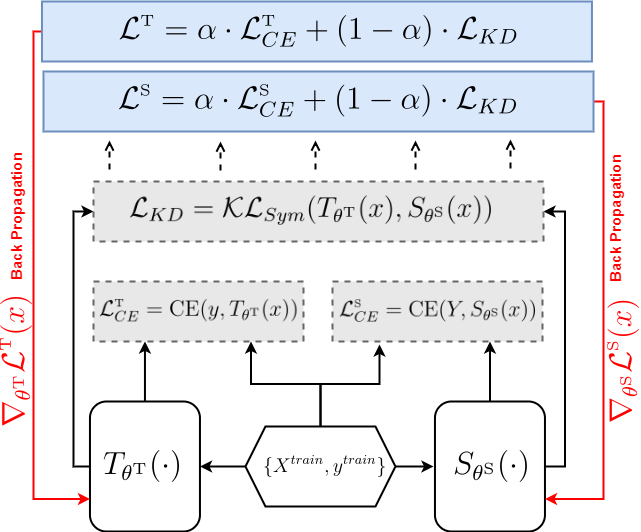

Knowledge Distillation (KD) is extensively used to compress and deploy large pre-trained language models on edge devices for real-world applications. However, one neglected area of research is the impact of noisy (corrupted) labels on KD. We present, to the best of our knowledge, the first study on KD with noisy labels in Natural Language Understanding (NLU). We document the scope of the problem and present two methods to mitigate the impact of label noise. Experiments on the GLUE benchmark show that our methods are effective even under high noise levels. Nevertheless, our results indicate that more research is necessary to cope with label noise under the KD.

翻译:知识蒸馏(KD)被广泛用于压缩和在边缘设备上部署大型预先训练的语言模型,用于现实世界的应用;然而,一个被忽视的研究领域是噪音(干扰)标签对KD的影响。 我们据我们所知,提出了第一份在《自然语言理解》中贴有噪音标签的KD研究报告。我们记录了问题的范围,并提出了减轻标签噪音影响的两种方法。关于GLUE基准的实验表明,我们的方法即使在高噪音水平下也是有效的。然而,我们的结果表明,有必要开展更多的研究,以应对KD下的标签噪音。