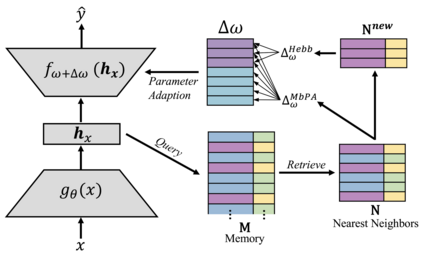

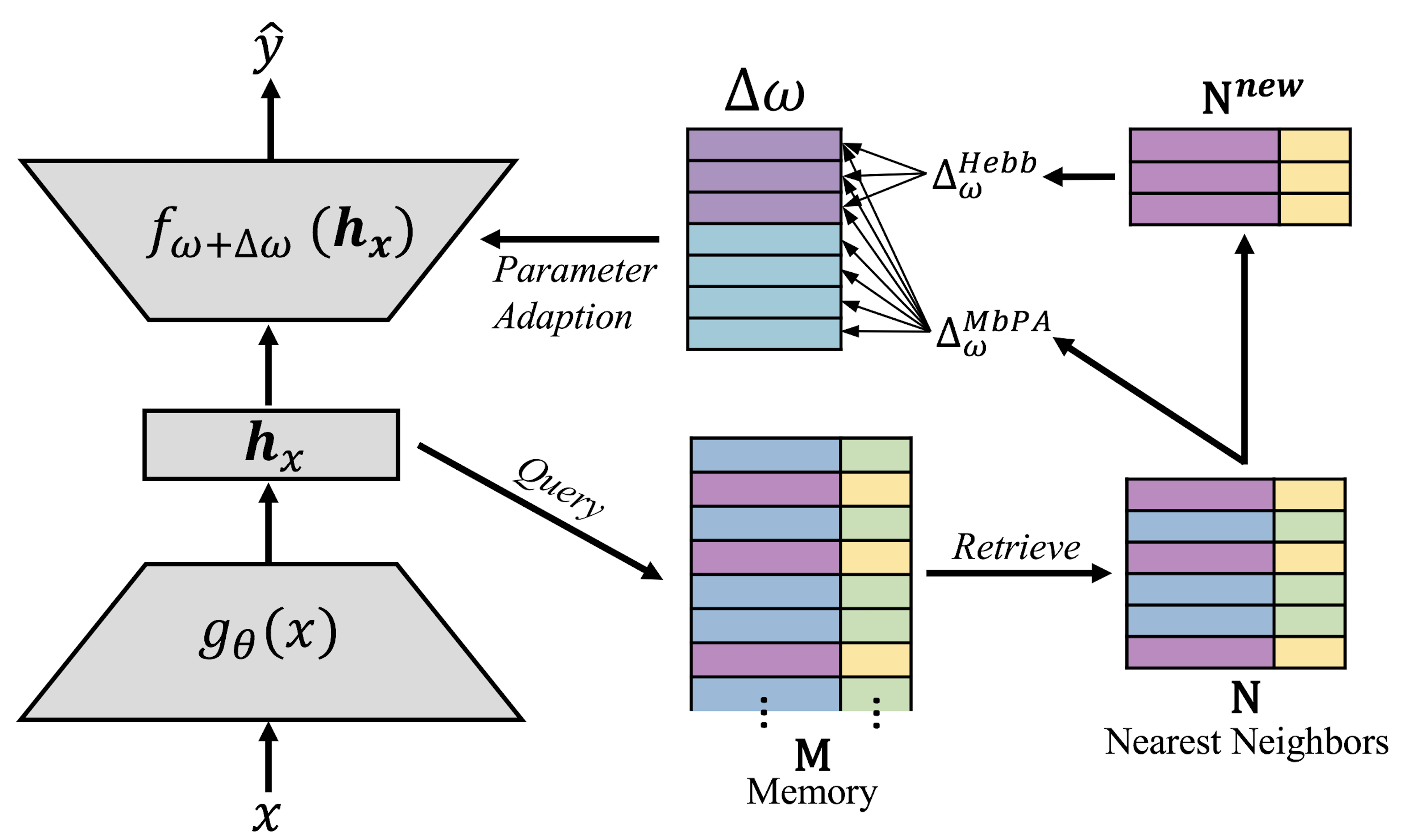

The ability to quickly learn new knowledge (e.g. new classes or data distributions) is a big step towards human-level intelligence. In this paper, we consider scenarios that require learning new classes or data distributions quickly and incrementally over time, as it often occurs in real-world dynamic environments. We propose "Memory-based Hebbian Parameter Adaptation" (Hebb) to tackle the two major challenges (i.e., catastrophic forgetting and sample efficiency) towards this goal in a unified framework. To mitigate catastrophic forgetting, Hebb augments a regular neural classifier with a continuously updated memory module to store representations of previous data. To improve sample efficiency, we propose a parameter adaptation method based on the well-known Hebbian theory, which directly "wires" the output network's parameters with similar representations retrieved from the memory. We empirically verify the superior performance of Hebb through extensive experiments on a wide range of learning tasks (image classification, language model) and learning scenarios (continual, incremental, online). We demonstrate that Hebb effectively mitigates catastrophic forgetting, and it indeed learns new knowledge better and faster than the current state-of-the-art.

翻译:快速学习新知识(例如,新类别或数据分布)的能力是人类层面智能的一个重大步骤。在本文件中,我们考虑了需要随着时间的演变而快速和渐进地学习新类别或数据分布的情景,这经常发生在现实世界的动态环境中。我们提议“基于记忆的赫比亚参数适应”(Hebbb) 以应对在统一框架内实现这一目标的两大挑战(例如,灾难性的遗忘和抽样效率) 。为了减轻灾难性的遗忘, Hebbb 增加了一个常规神经分类器,一个不断更新的内存模块,以存储先前的数据。为了提高样本效率,我们提议了一个基于众所周知的赫比理论的参数调整方法,该理论直接“连接”输出网络的参数,并用记忆中提取的相似的表达方式。我们通过广泛的学习任务(图像分类、语言模型)和学习情景(连续的、递增的、在线的)的广泛实验,对赫比当前状态更好、更快的新知识。