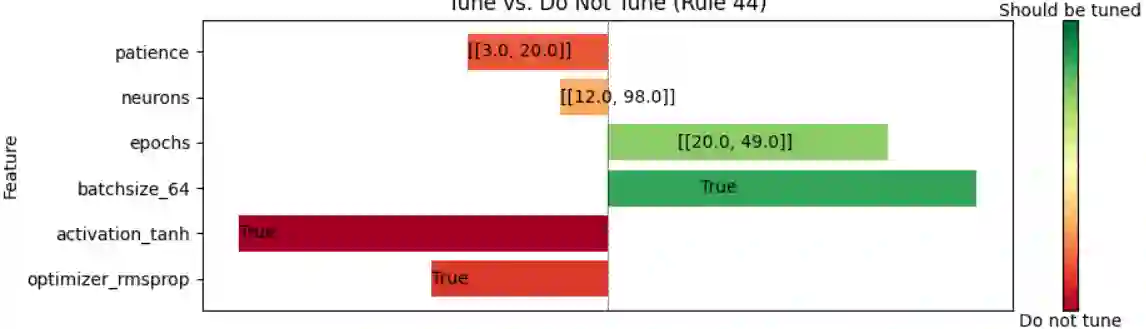

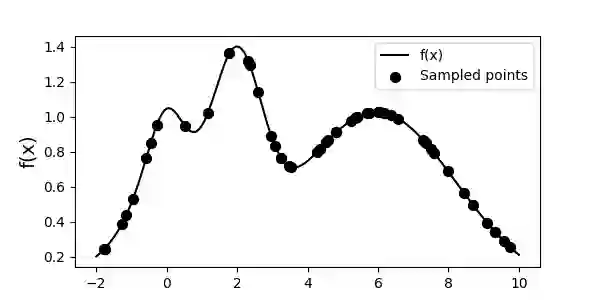

Manual parameter tuning of cyber-physical systems is a common practice, but it is labor-intensive. Bayesian Optimization (BO) offers an automated alternative, yet its black-box nature reduces trust and limits human-BO collaborative system tuning. Experts struggle to interpret BO recommendations due to the lack of explanations. This paper addresses the post-hoc BO explainability problem for cyber-physical systems. We introduce TNTRules (Tune-No-Tune Rules), a novel algorithm that provides both global and local explanations for BO recommendations. TNTRules generates actionable rules and visual graphs, identifying optimal solution bounds and ranges, as well as potential alternative solutions. Unlike existing explainable AI (XAI) methods, TNTRules is tailored specifically for BO, by encoding uncertainty via a variance pruning technique and hierarchical agglomerative clustering. A multi-objective optimization approach allows maximizing explanation quality. We evaluate TNTRules using established XAI metrics (Correctness, Completeness, and Compactness) and compare it against adapted baseline methods. The results demonstrate that TNTRules generates high-fidelity, compact, and complete explanations, significantly outperforming three baselines on 5 multi-objective testing functions and 2 hyperparameter tuning problems.

翻译:暂无翻译