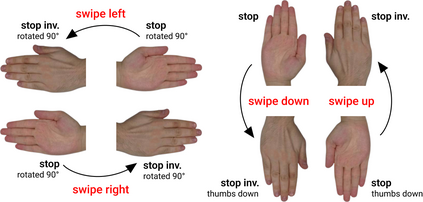

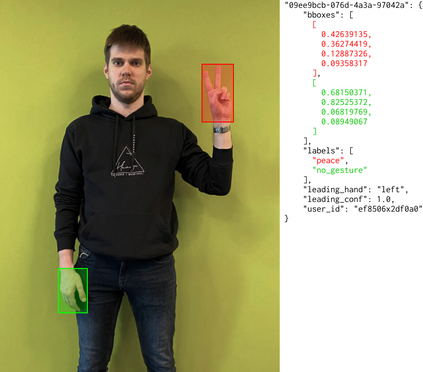

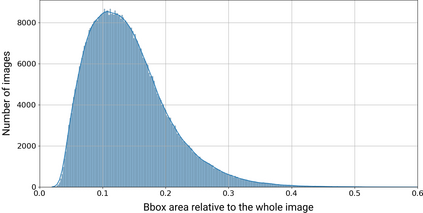

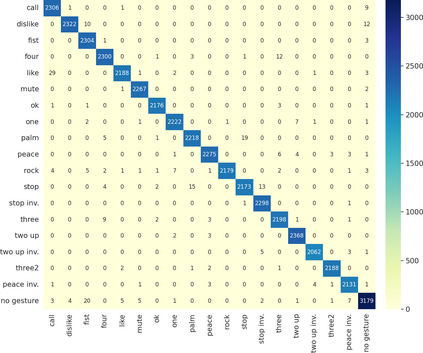

In this paper, we introduce an enormous dataset HaGRID (HAnd Gesture Recognition Image Dataset) for hand gesture recognition (HGR) systems. This dataset contains 552,992 samples divided into 18 classes of gestures. The annotations consist of bounding boxes of hands with gesture labels and markups of leading hands. The proposed dataset allows for building HGR systems, which can be used in video conferencing services, home automation systems, the automotive sector, services for people with speech and hearing impairments, etc. We are especially focused on interaction with devices to manage them. That is why all 18 chosen gestures are functional, familiar to the majority of people, and may be an incentive to take some action. In addition, we used crowdsourcing platforms to collect the dataset and took into account various parameters to ensure data diversity. We describe the challenges of using existing HGR datasets for our task and provide a detailed overview of them. Furthermore, the baselines for the hand detection and gesture classification tasks are proposed.

翻译:在本文中,我们为手势识别(HGR)系统引入了庞大的数据集HAGRID(H&Geth 识别图像数据集),该数据集包含552 992个样本,分为18类手势样本,说明包括装有手势标签和铅手标记的捆绑式手箱,提议的数据集允许建立可用于电视会议服务、家庭自动化系统、汽车部门、为有言语和听力障碍的人提供的服务等的GGRI系统。我们特别侧重于与管理这些装置的互动。这就是为什么所有18个选择的手势都功能正常,大多数人都熟悉,并可能激励人们采取某些行动。此外,我们利用众包平台收集数据集,考虑各种参数,以确保数据的多样性。我们描述了使用现有的HGR数据集执行我们的任务的挑战,并提供详细的概览。此外,还提出了手势检测和手势分类任务的基线。