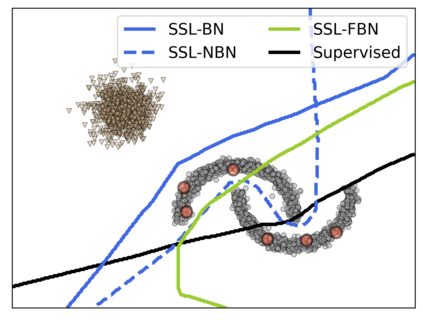

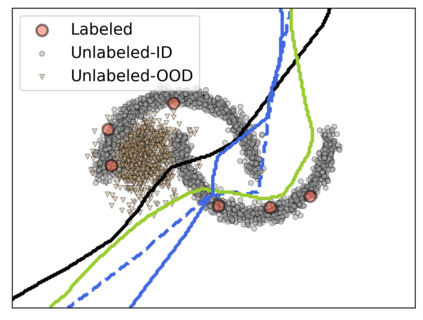

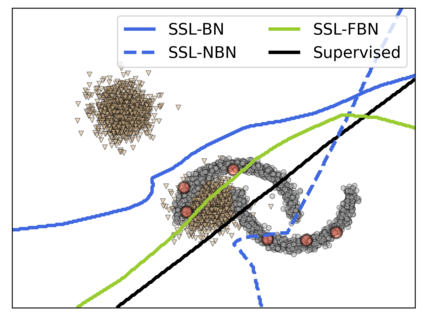

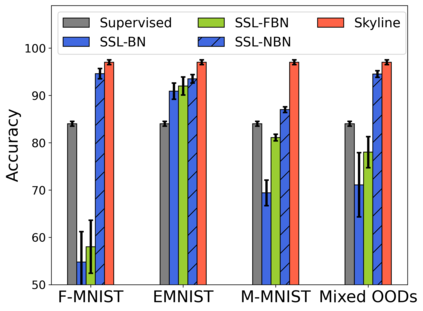

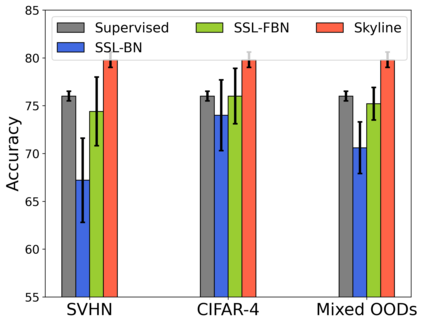

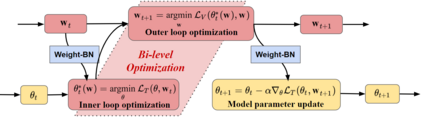

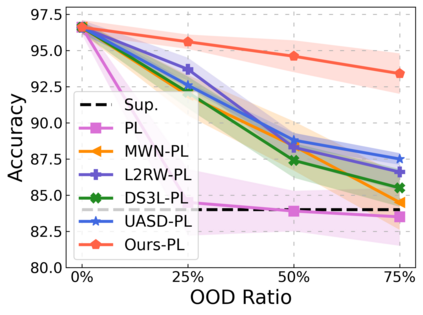

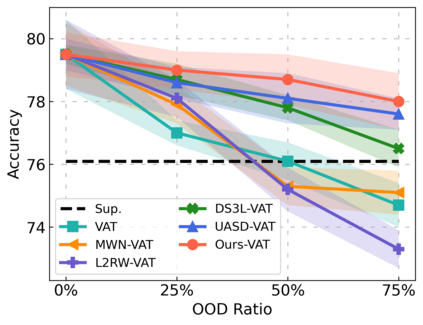

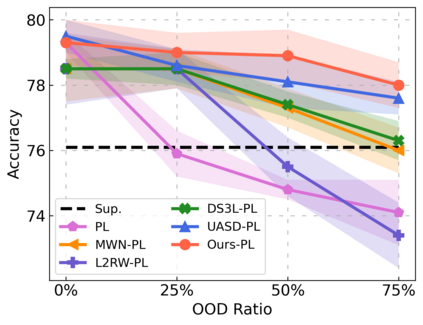

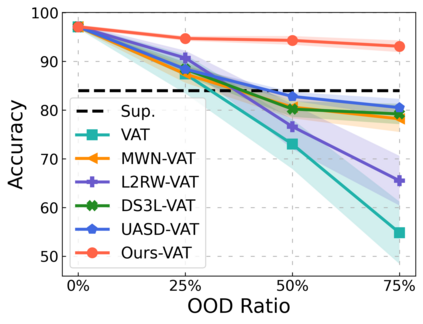

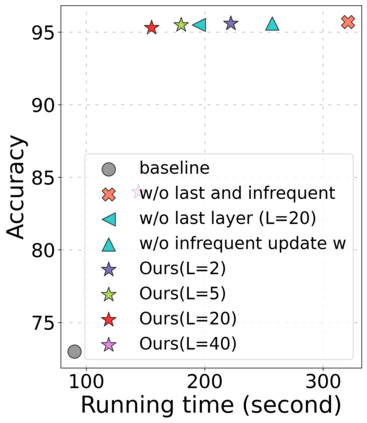

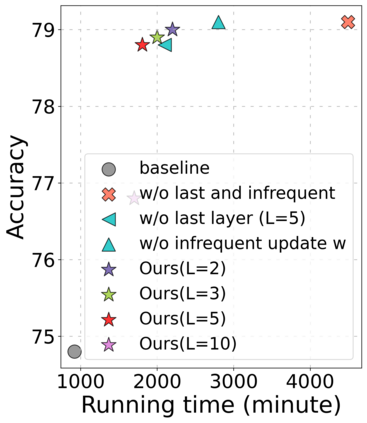

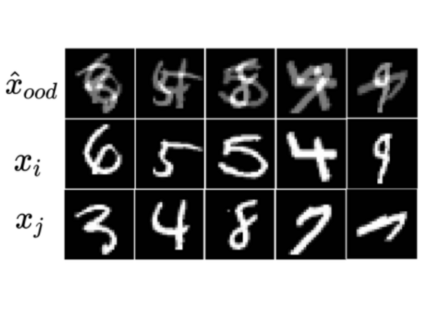

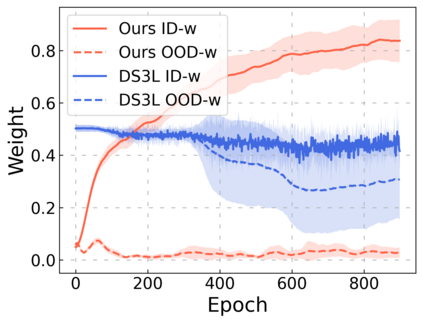

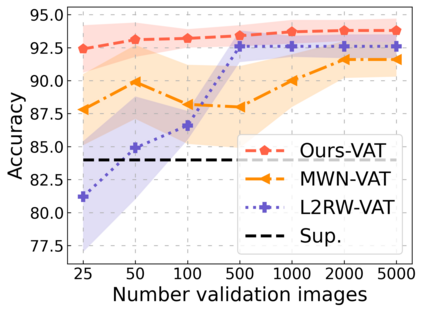

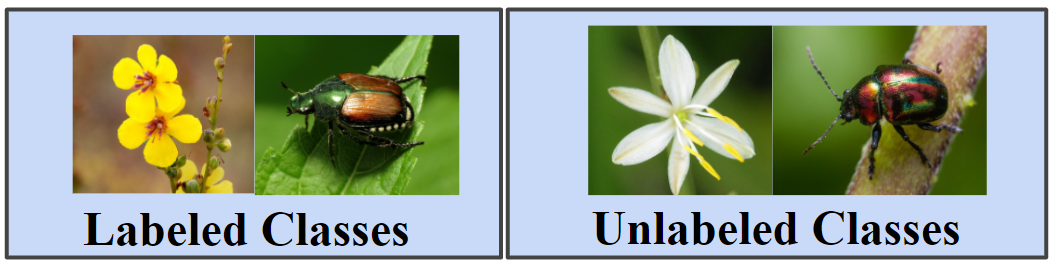

Recent semi-supervised learning algorithms have demonstrated greater success with higher overall performance due to better-unlabeled data representations. Nonetheless, recent research suggests that the performance of the SSL algorithm can be degraded when the unlabeled set contains out-of-distribution examples (OODs). This work addresses the following question: How do out-of-distribution (OOD) data adversely affect semi-supervised learning algorithms? To answer this question, we investigate the critical causes of OOD's negative effect on SSL algorithms. In particular, we found that 1) certain kinds of OOD data instances that are close to the decision boundary have a more significant impact on performance than those that are further away, and 2) Batch Normalization (BN), a popular module, may degrade rather than improve performance when the unlabeled set contains OODs. In this context, we developed a unified weighted robust SSL framework that can be easily extended to many existing SSL algorithms and improve their robustness against OODs. More specifically, we developed an efficient bi-level optimization algorithm that could accommodate high-order approximations of the objective and scale to multiple inner optimization steps to learn a massive number of weight parameters while outperforming existing low-order approximations of bi-level optimization. Further, we conduct a theoretical study of the impact of faraway OODs in the BN step and propose a weighted batch normalization (WBN) procedure for improved performance. Finally, we discuss the connection between our approach and low-order approximation techniques. Our experiments on synthetic and real-world datasets demonstrate that our proposed approach significantly enhances the robustness of four representative SSL algorithms against OODs compared to four state-of-the-art robust SSL strategies.

翻译:最近半监督的学习算法显示,由于未加标签的数据表示方式,总体性能较高,这证明取得了更大的成功。然而,最近的研究表明,当未加标签的数据集包含分配实例(OODs)时,SSL算法的性能可能会降低。这项工作解决了以下问题:在未加标签的数据集包含OOODs时,SSL算法的性能会如何对半监督的学习算法产生不利影响?为了回答这个问题,我们调查OOOD对SSL算法产生消极影响的关键原因。特别是,我们发现1)某些接近决定边界的OOOD数据对性能的影响比远远的更显著;以及2)流行的Batch Almanization(BN)这一模块可能会降低而不是改善。在这方面,我们开发了一个统一的加权强的SSL框架,可以很容易扩展到现有的许多SSL算法,并提高其相对于OLODs算法的稳健性。更具体地,我们开发了一个高效的双级优化算法,可以适应在更远处的高度精确的OLILLILLLLLLLT 的精确度上,而我们更大幅度的更接近更精确的精确的精确的OAirmalalalalimalimalal,我们学习的SOBrmald的四级级级定的四级数,我们更接近于更深级级的SOODs。我们更深级的深度的深度的深度的深度的研究。