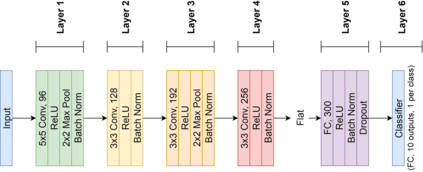

Recent work has shown that biologically plausible Hebbian learning can be integrated with backpropagation learning (backprop), when training deep convolutional neural networks. In particular, it has been shown that Hebbian learning can be used for training the lower or the higher layers of a neural network. For instance, Hebbian learning is effective for re-training the higher layers of a pre-trained deep neural network, achieving comparable accuracy w.r.t. SGD, while requiring fewer training epochs, suggesting potential applications for transfer learning. In this paper we build on these results and we further improve Hebbian learning in these settings, by using a nonlinear Hebbian Principal Component Analysis (HPCA) learning rule, in place of the Hebbian Winner Takes All (HWTA) strategy used in previous work. We test this approach in the context of computer vision. In particular, the HPCA rule is used to train Convolutional Neural Networks in order to extract relevant features from the CIFAR-10 image dataset. The HPCA variant that we explore further improves the previous results, motivating further interest towards biologically plausible learning algorithms.

翻译:最近的工作表明,在培训深层进化神经网络时,Hebbian的学习可以与深层神经神经网络的回推进学习(背对背)相结合,这在生物学上看似可信的Hebbian的学习可以用来培训神经网络的下层或上层。例如,Hebbian的学习对于再培训受过训练的深层神经网络的较高层是有效的,达到可比的准确性,SGD,同时需要较少的培训神经网络,提出潜在的转移学习应用。在本文中,我们以这些结果为基础,进一步改进Hebbbian在这些环境中的学习,方法是使用一种非线性 Hebbbian主构件分析(HPCA)的学习规则,取代以前工作中使用的HWTA全部(HWTA)战略。我们在计算机视野中测试这一方法。特别是,HPCA规则用于培训Calal Neural网络,以便从 CIFAR-10图像数据集中提取相关特征。我们探索的HPCA变式将进一步改进先前的结果,进一步激发对生物上可信的学习法式分析的兴趣。