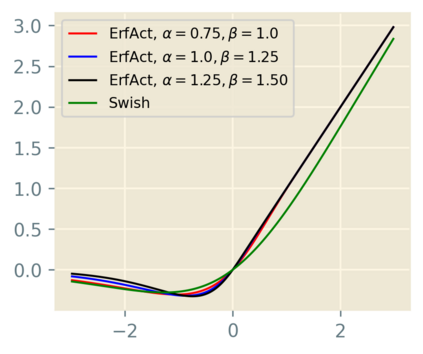

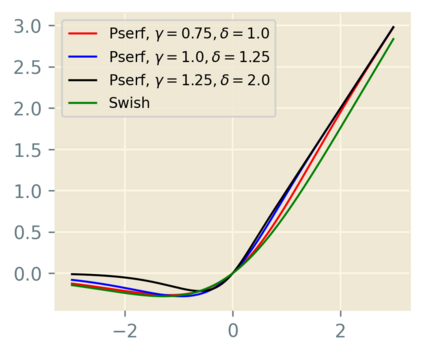

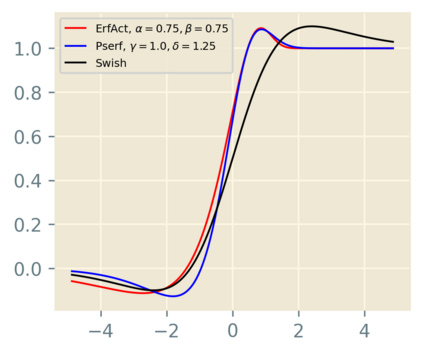

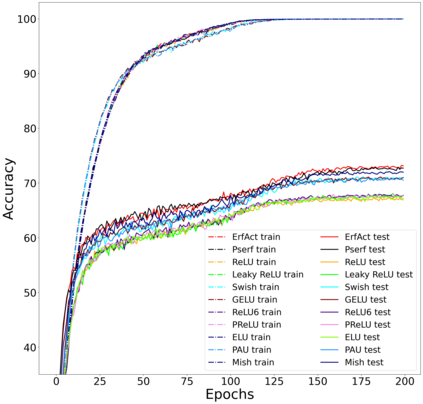

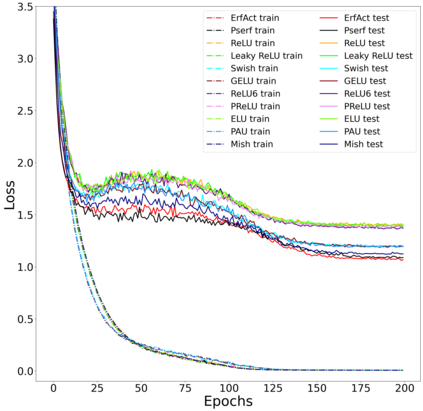

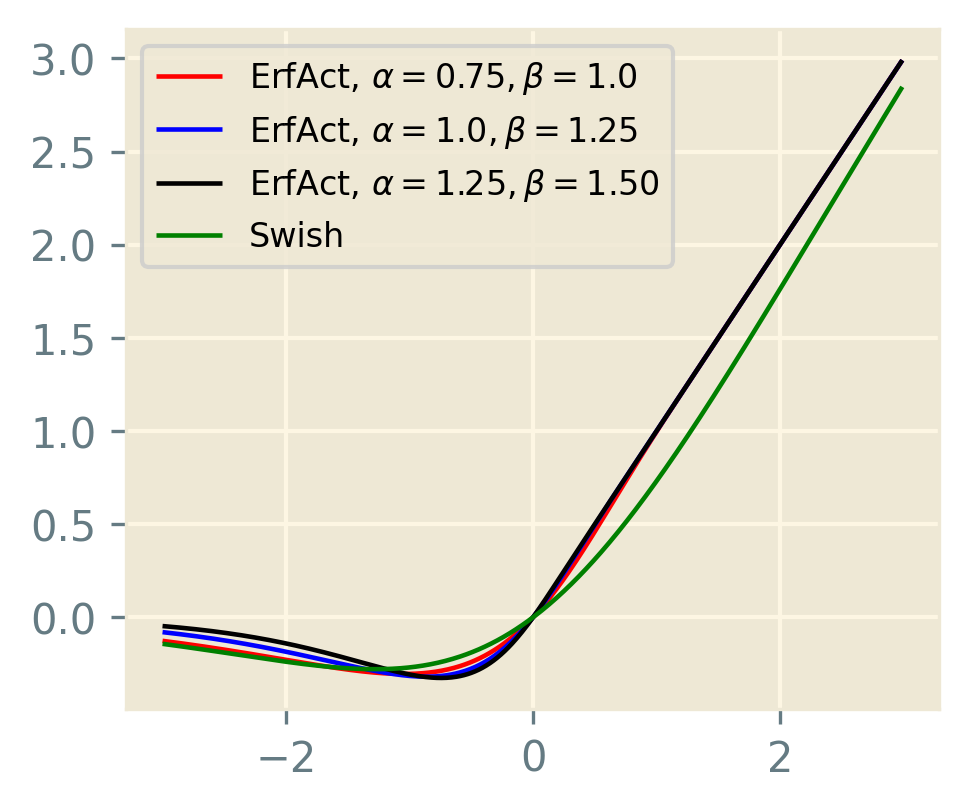

An activation function is a crucial component of a neural network that introduces non-linearity in the network. The state-of-the-art performance of a neural network depends also on the perfect choice of an activation function. We propose two novel non-monotonic smooth trainable activation functions, called ErfAct and Pserf. Experiments suggest that the proposed functions improve the network performance significantly compared to the widely used activations like ReLU, Swish, and Mish. Replacing ReLU by ErfAct and Pserf, we have 5.68% and 5.42% improvement for top-1 accuracy on Shufflenet V2 (2.0x) network in CIFAR100 dataset, 2.11% and 1.96% improvement for top-1 accuracy on Shufflenet V2 (2.0x) network in CIFAR10 dataset, 1.0%, and 1.0% improvement on mean average precision (mAP) on SSD300 model in Pascal VOC dataset.

翻译:激活功能是引入网络非线性的一个神经网络的关键组成部分。 神经网络的最先进的性能也取决于对激活功能的完美选择。 我们提议了两个新的非单声波光滑的可训练激活功能, 叫做 ErfAct 和 Pserf。 实验显示, 与RELU、 Swish 和 Mish 等广泛使用的激活相比, 拟议的功能大大改善了网络的性能。 用 ErfAct 和 Pserf 替换 ReLU。 在CIFAR100 数据集中, Shufflennet V2 (2.0x) 网络的顶级-1精度改进了5. 68% 和 5.42%, 在 CIFAR100 数据集中, Shufflenet V2 (2.0x) 网络的顶级精度改进了2. 11% 和1. 96%, 在 Pascal VOC VOC 数据集中的 SD300 模型中, 平均精度改进了1.0%。