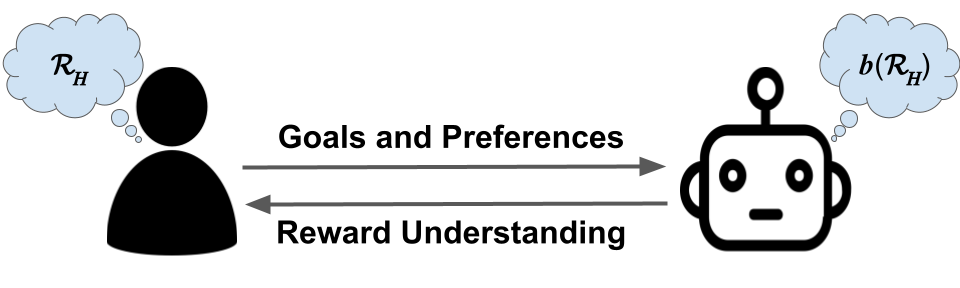

Explainable AI techniques that describe agent reward functions can enhance human-robot collaboration in a variety of settings. One context where human understanding of agent reward functions is particularly beneficial is in the value alignment setting. In the value alignment context, an agent aims to infer a human's reward function through interaction so that it can assist the human with their tasks. If the human can understand where gaps exist in the agent's reward understanding, they will be able to teach more efficiently and effectively, leading to quicker human-agent team performance improvements. In order to support human collaborators in the value alignment setting and similar contexts, it is first important to understand the effectiveness of different reward explanation techniques in a variety of domains. In this paper, we introduce a categorization of information modalities for reward explanation techniques, suggest a suite of assessment techniques for human reward understanding, and introduce four axes of domain complexity. We then propose an experiment to study the relative efficacy of a broad set of reward explanation techniques covering multiple modalities of information in a set of domains of varying complexity.

翻译:描述代理人奖赏功能的可解释的大赦国际技术可以在各种环境下加强人体-机器人合作。人们理解代理人奖赏职能特别有益的一种环境是价值调整环境。在价值调整背景下,代理人的目的是通过互动推断一个人的奖赏功能,以便协助人类完成任务。如果人类能够了解代理人奖赏理解方面存在的差距,他们将能够更高效和更有效地传授奖励解释技术,从而更快地改进人体-代理人团队的绩效。为了支持人类在价值调整环境及类似环境中的合作者,首先必须了解不同奖赏解释技术在各个领域的有效性。在本文件中,我们引入了奖赏解释技术信息模式分类,提出了一套用于人类奖赏解释技术的评估技术,并引入了四种领域复杂性轴。然后我们提议进行一项实验,研究一套广泛的奖赏解释技术的相对效力,涵盖一系列不同复杂领域的多种信息模式。