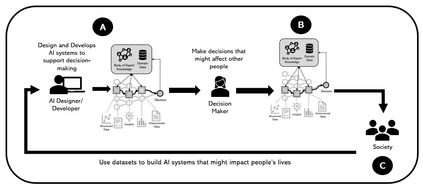

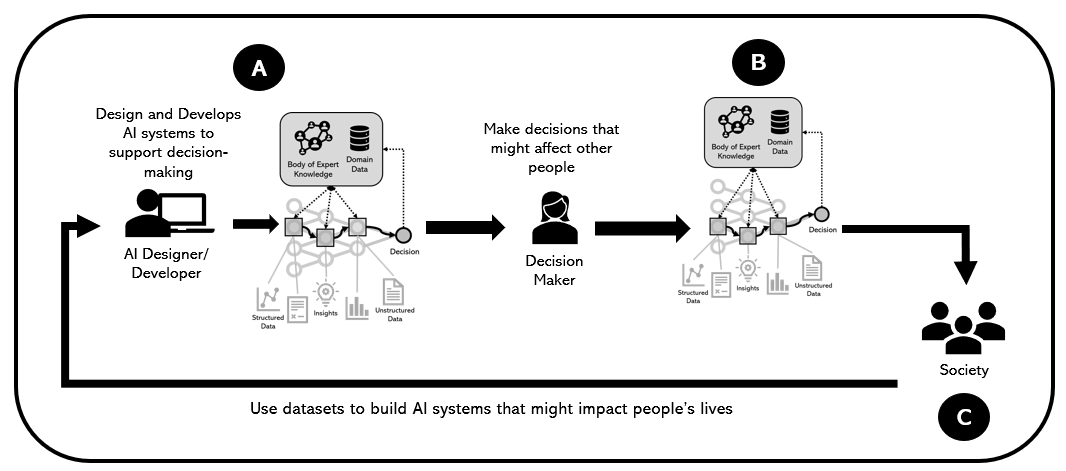

The explanation dimension of Artificial Intelligence (AI) based system has been a hot topic for the past years. Different communities have raised concerns about the increasing presence of AI in people's everyday tasks and how it can affect people's lives. There is a lot of research addressing the interpretability and transparency concepts of explainable AI (XAI), which are usually related to algorithms and Machine Learning (ML) models. But in decision-making scenarios, people need more awareness of how AI works and its outcomes to build a relationship with that system. Decision-makers usually need to justify their decision to others in different domains. If that decision is somehow based on or influenced by an AI-system outcome, the explanation about how the AI reached that result is key to building trust between AI and humans in decision-making scenarios. In this position paper, we discuss the role of XAI in decision-making scenarios, our vision of Decision-Making with AI-system in the loop, and explore one case from the literature about how XAI can impact people justifying their decisions, considering the importance of building the human-AI relationship for those scenarios.

翻译:过去几年来,基于人工智能(AI)系统的解释层面一直是一个热门话题。不同社区对AI越来越多地出现在人们的日常工作中以及它如何影响人们的生活表示关切。关于可解释的AI(XAI)概念的可解释性和透明度概念有许多研究,这些概念通常与算法和机器学习模式有关。但在决策情景中,人们需要更多地了解AI如何运作及其结果,以便与该系统建立关系。决策者通常需要证明他们的决定对不同领域其他人有正当理由。如果这一决定在某种程度上基于或受到AI系统结果的影响,那么如何使AI达成这一结果的解释是AI与人类在决策情景中建立信任的关键。在本立场文件中,我们讨论了XAI在决策情景中的作用、我们与AI系统在循环中决策的愿景,并探讨文献中关于XAI如何影响人们做出其决定的理由的一个案例,同时考虑到为这些情景建立人与AI关系的重要性。