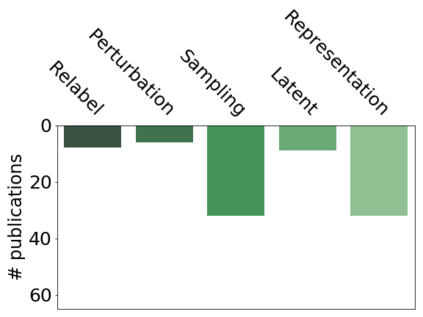

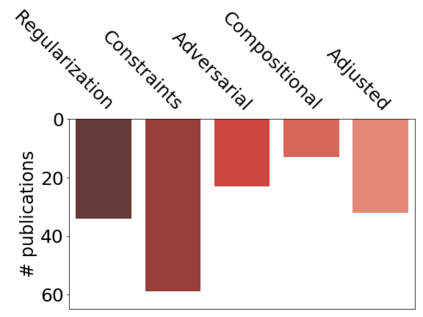

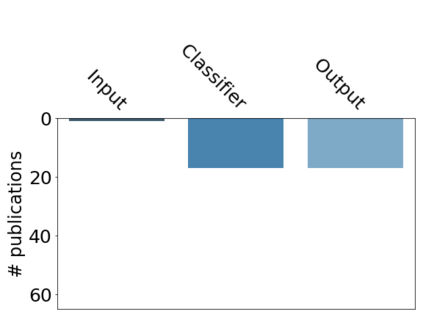

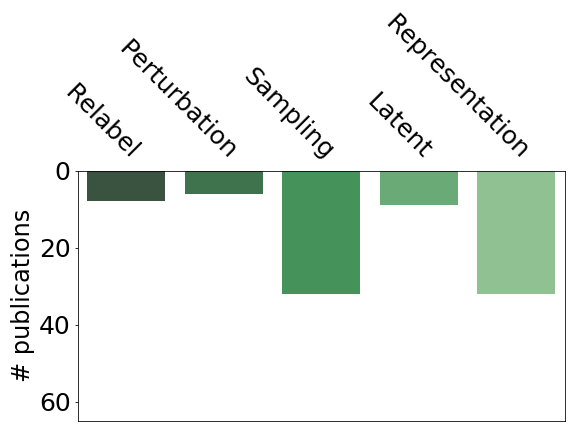

This paper provides a comprehensive survey of bias mitigation methods for achieving fairness in Machine Learning (ML) models. We collect a total of 234 publications concerning bias mitigation for ML classifiers. These methods can be distinguished based on their intervention procedure (i.e., pre-processing, in-processing, post-processing) and the technology they apply. We investigate how existing bias mitigation methods are evaluated in the literature. In particular, we consider datasets, metrics and benchmarking. Based on the gathered insights (e.g., what is the most popular fairness metric? How many datasets are used for evaluating bias mitigation methods?). We hope to support practitioners in making informed choices when developing and evaluating new bias mitigation methods.

翻译:本文件全面调查了实现机器学习(ML)模式公平性的减少偏见方法,共收集了234份关于减少ML分类人员偏见的出版物,这些方法可以根据其干预程序(即预处理、处理、后处理)及其应用技术加以区分。我们调查文献中如何评价现有的减少偏见方法。我们特别考虑数据集、衡量标准和基准。我们根据收集的见解(例如,什么是最受欢迎的公平度量度?有多少数据集用于评价减少偏见的方法?我们希望能支持从业人员在制订和评价新的减少偏见方法时作出知情选择。