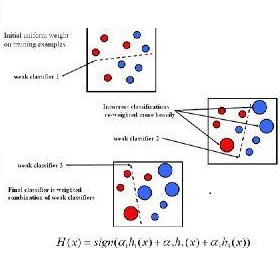

The principle of boosting in supervised learning involves combining multiple weak classifiers to obtain a stronger classifier. AdaBoost has the reputation to be a perfect example of this approach. This study analyzes the (two classes) AdaBoost procedure implemented in scikit-learn. This paper shows that AdaBoost is an algorithm in name only, as the resulting combination of weak classifiers can be explicitly calculated using a truth table. Indeed, using a logical analysis of the training set with weak classifiers constructing a truth table, we recover, through an analytical formula, the weights of the combination of these weak classifiers obtained by the procedure. We observe that this formula does not give the point of minimum of the risk, we provide a system to compute the exact point of minimum and we check that the AdaBoost procedure in scikit-learn does not implement the algorithm described by Freund and Schapire.

翻译:暂无翻译