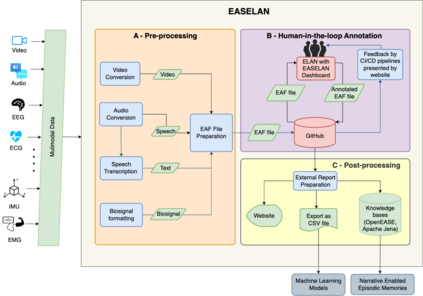

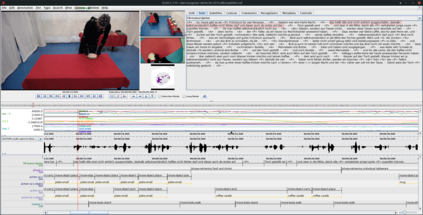

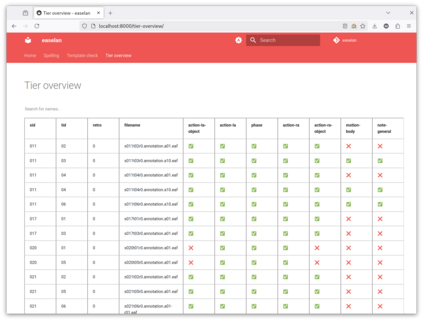

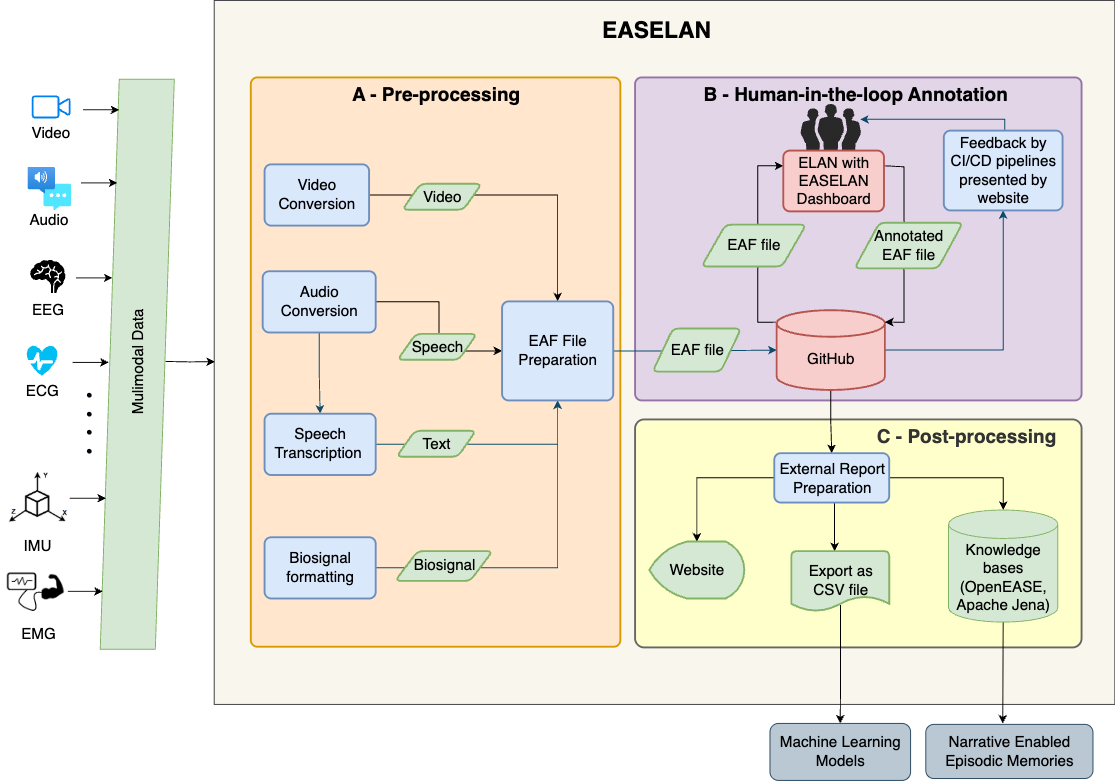

Recent advancements in machine learning and adaptive cognitive systems are driving a growing demand for large and richly annotated multimodal data. A prominent example of this trend are fusion models, which increasingly incorporate multiple biosignals in addition to traditional audiovisual channels. This paper introduces the EASELAN annotation framework to improve annotation workflows designed to address the resulting rising complexity of multimodal and biosignals datasets. It builds on the robust ELAN tool by adding new components tailored to support all stages of the annotation pipeline: From streamlining the preparation of annotation files to setting up additional channels, integrated version control with GitHub, and simplified post-processing. EASELAN delivers a seamless workflow designed to integrate biosignals and facilitate rich annotations to be readily exported for further analyses and machine learning-supported model training. The EASELAN framework is successfully applied to a high-dimensional biosignals collection initiative on human everyday activities (here, table setting) for cognitive robots within the DFG-funded Collaborative Research Center 1320 Everyday Activity Science and Engineering (EASE). In this paper we discuss the opportunities, limitations, and lessons learned when using EASELAN for this initiative. To foster research on biosignal collection, annotation, and processing, the code of EASELAN is publicly available(https://github.com/cognitive-systems-lab/easelan), along with the EASELAN-supported fully annotated Table Setting Database.

翻译:暂无翻译