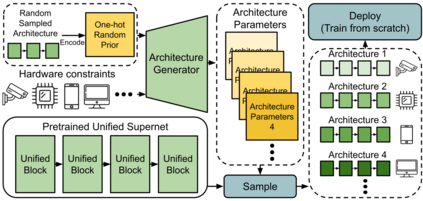

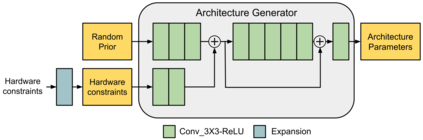

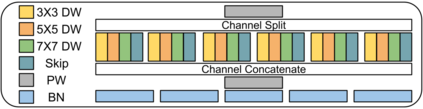

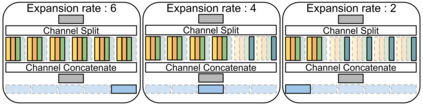

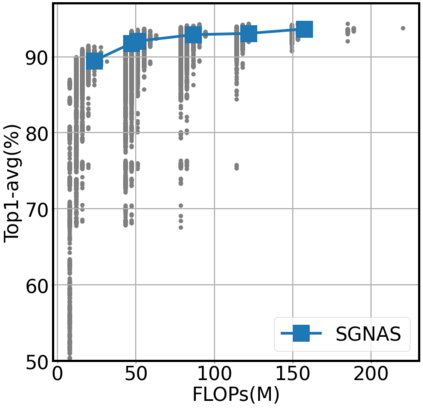

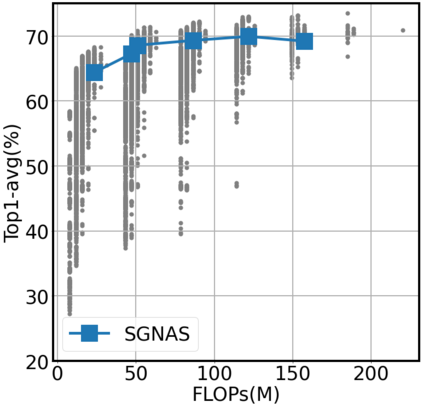

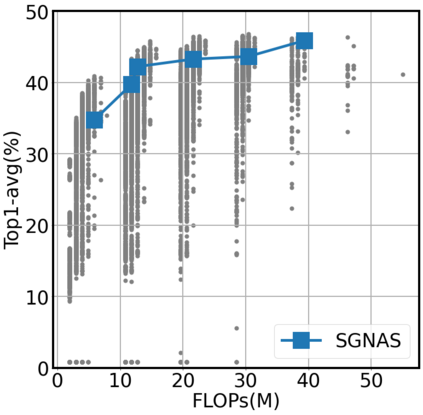

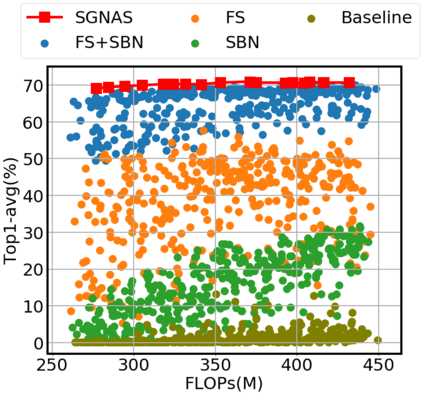

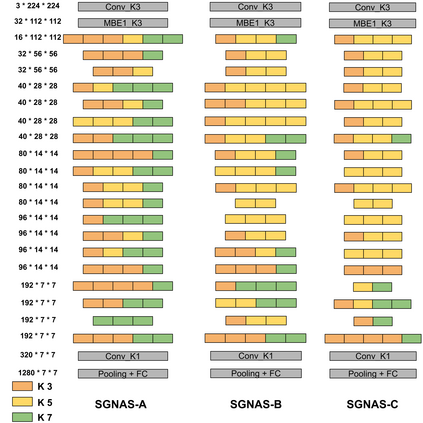

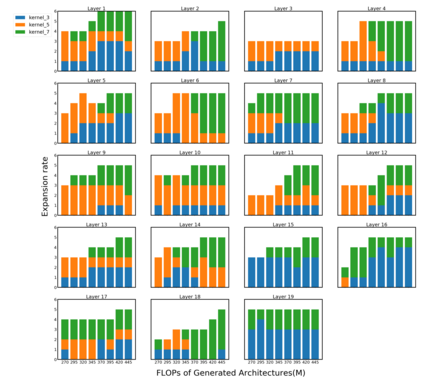

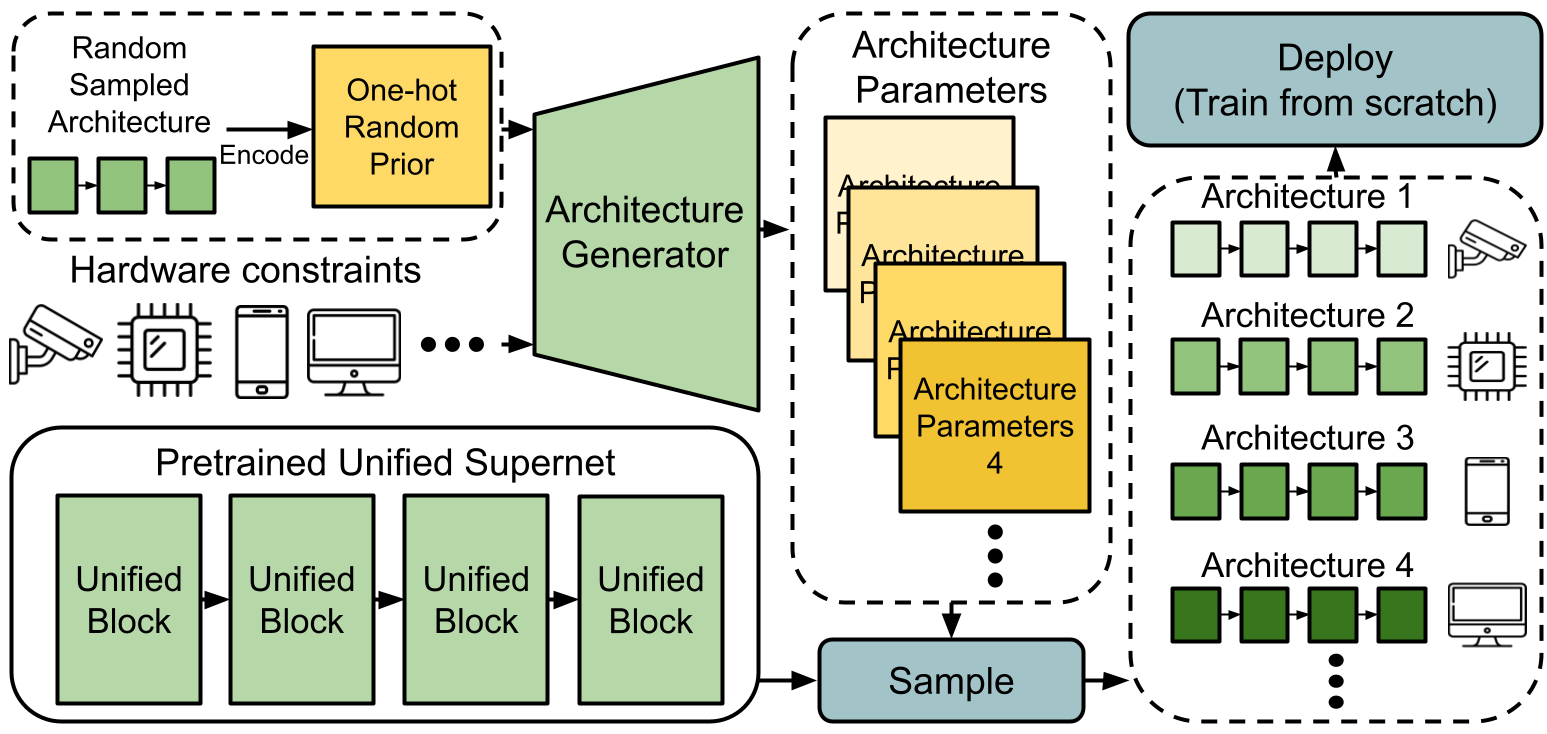

In one-shot NAS, sub-networks need to be searched from the supernet to meet different hardware constraints. However, the search cost is high and $N$ times of searches are needed for $N$ different constraints. In this work, we propose a novel search strategy called architecture generator to search sub-networks by generating them, so that the search process can be much more efficient and flexible. With the trained architecture generator, given target hardware constraints as the input, $N$ good architectures can be generated for $N$ constraints by just one forward pass without re-searching and supernet retraining. Moreover, we propose a novel single-path supernet, called unified supernet, to further improve search efficiency and reduce GPU memory consumption of the architecture generator. With the architecture generator and the unified supernet, we propose a flexible and efficient one-shot NAS framework, called Searching by Generating NAS (SGNAS). With the pre-trained supernt, the search time of SGNAS for $N$ different hardware constraints is only 5 GPU hours, which is $4N$ times faster than previous SOTA single-path methods. After training from scratch, the top1-accuracy of SGNAS on ImageNet is 77.1%, which is comparable with the SOTAs. The code is available at: https://github.com/eric8607242/SGNAS.

翻译:在一张照片的NAS中,需要从超级网络搜索次级网络,以满足不同的硬件限制。然而,搜索成本很高,需要花费美元的时间搜索,而不同的限制则需要花费不同的美元。在这项工作中,我们提议了一个叫做建筑发电机的新颖的搜索战略,通过生成来搜索次级网络,这样搜索过程可以更加高效和灵活。在经过培训的建筑发电机中,考虑到作为投入的目标硬件限制,只要一个前方通行证,不进行再研究和超级网络再培训,就可以产生一美元的良好建筑。此外,我们提议建立一个创新的单向超级网络,称为统一超级网络,以进一步提高搜索效率,减少建筑发电机的GPU记忆消耗量。与建筑发电机和统一超级网络一起,我们提议一个灵活而高效的一次性NAS框架,称为GENAS(SNAS)搜索。在经过预先培训后,SGNAS对不同硬件限制的搜索时间只有5个GUU小时,比前一个SOTA单向式网络的搜索速度为4N1倍。在SGNSA的顶端,在SA1的SOGNSA中可以进行可比的搜索后,在SGNA1的SOGNA中可以使用S。在SOGNA1的顶端点上进行SO1的搜索。