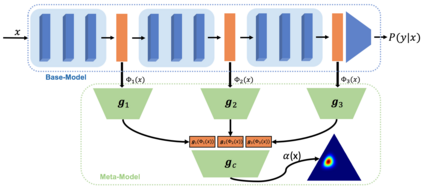

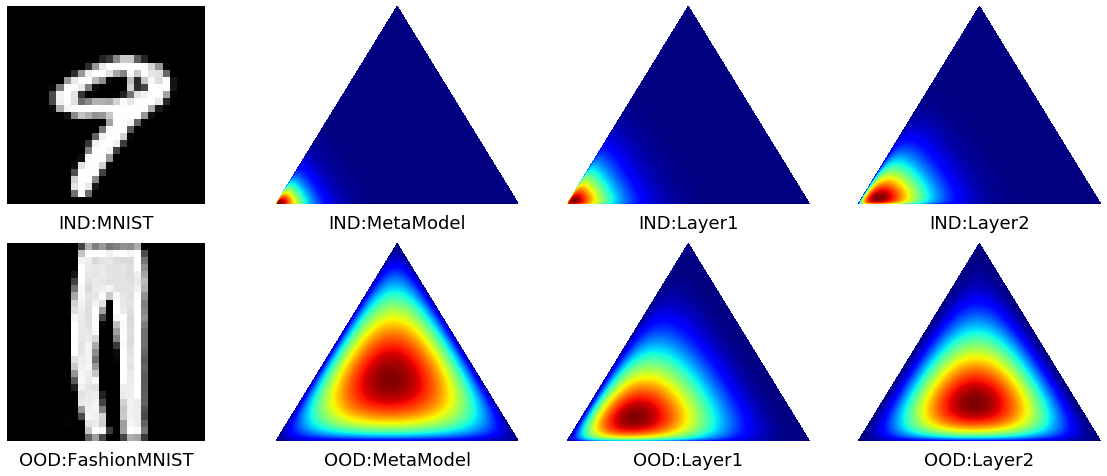

It is known that neural networks have the problem of being over-confident when directly using the output label distribution to generate uncertainty measures. Existing methods mainly resolve this issue by retraining the entire model to impose the uncertainty quantification capability so that the learned model can achieve desired performance in accuracy and uncertainty prediction simultaneously. However, training the model from scratch is computationally expensive and may not be feasible in many situations. In this work, we consider a more practical post-hoc uncertainty learning setting, where a well-trained base model is given, and we focus on the uncertainty quantification task at the second stage of training. We propose a novel Bayesian meta-model to augment pre-trained models with better uncertainty quantification abilities, which is effective and computationally efficient. Our proposed method requires no additional training data and is flexible enough to quantify different uncertainties and easily adapt to different application settings, including out-of-domain data detection, misclassification detection, and trustworthy transfer learning. We demonstrate our proposed meta-model approach's flexibility and superior empirical performance on these applications over multiple representative image classification benchmarks.

翻译:已知神经网络在直接使用输出标签分布来产生不确定措施时存在过于自信的问题。现有方法主要通过对整个模型进行再培训来解决这个问题,将不确定性量化能力强加给整个模型,以便所学模型能够同时在准确性和不确定性预测方面达到预期的性能。然而,从零到零的培训在计算上是昂贵的,在许多情况下可能不可行。在这项工作中,我们考虑一种更切合实际的后热量不确定性学习环境,在这种环境中提供经过良好培训的基础模型,我们在培训的第二阶段侧重于不确定性量化任务。我们提议了一种新颖的巴伊西亚元模型,以强化经过培训的模型,使其具有更好的不确定性量化能力,这种能力既有效又具有计算效率。我们提议的方法不需要额外的培训数据,而且足够灵活,可以量化不同的不确定性,便于适应不同的应用环境,包括外部数据探测、分类错误检测和可信赖的转移学习。我们展示了我们提议的元模型方法的灵活性,以及这些应用在多种有代表性的图像分类基准方面的优异经验表现。