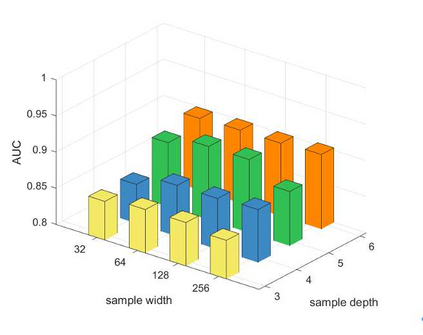

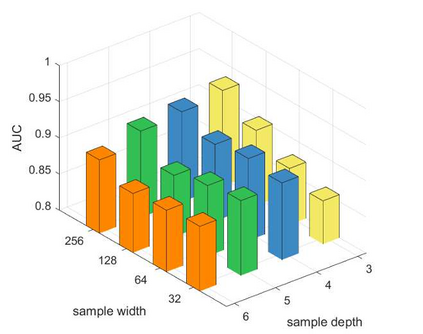

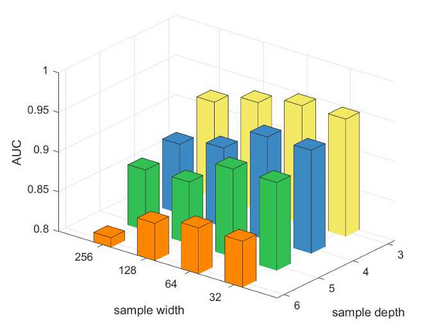

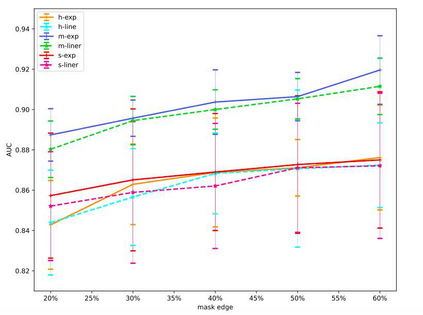

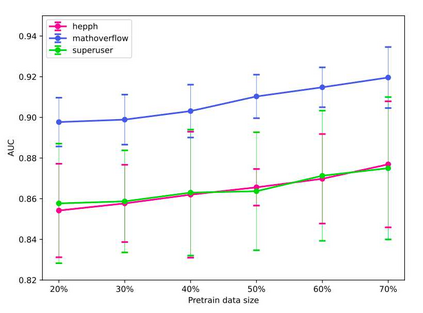

The pre-training on the graph neural network model can learn the general features of large-scale networks or networks of the same type by self-supervised methods, which allows the model to work even when node labels are missing. However, the existing pre-training methods do not take network evolution into consideration. This paper proposes a pre-training method on dynamic graph neural networks (PT-DGNN), which uses dynamic attributed graph generation tasks to simultaneously learn the structure, semantics, and evolution features of the graph. The method includes two steps: 1) dynamic sub-graph sampling, and 2) pre-training with dynamic attributed graph generation task. Comparative experiments on three realistic dynamic network datasets show that the proposed method achieves the best results on the link prediction fine-tuning task.

翻译:关于图形神经网络模型的培训前可以通过自我监督的方法了解同类大型网络或网络的一般特点,这样即使缺少节点标签,也能够使模型发挥作用;但是,现有的培训前方法没有考虑到网络的演变情况;本文件提议了动态图形神经网络的培训前方法(PT-DGNN),该方法使用动态的图形生成任务,同时学习图形的结构、语义和演变特点。该方法包括两个步骤:1)动态子图抽样,2)预先培训,并进行动态的图表生成任务。关于三个现实的动态网络数据集的比较实验表明,拟议方法在链接预测微调任务上取得了最佳结果。