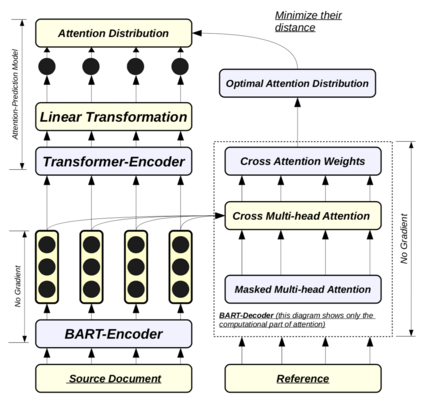

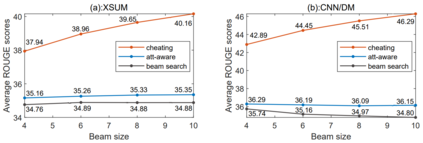

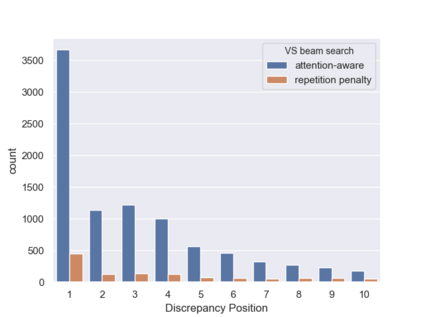

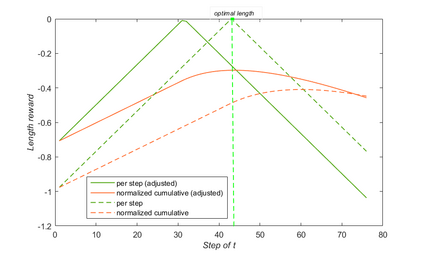

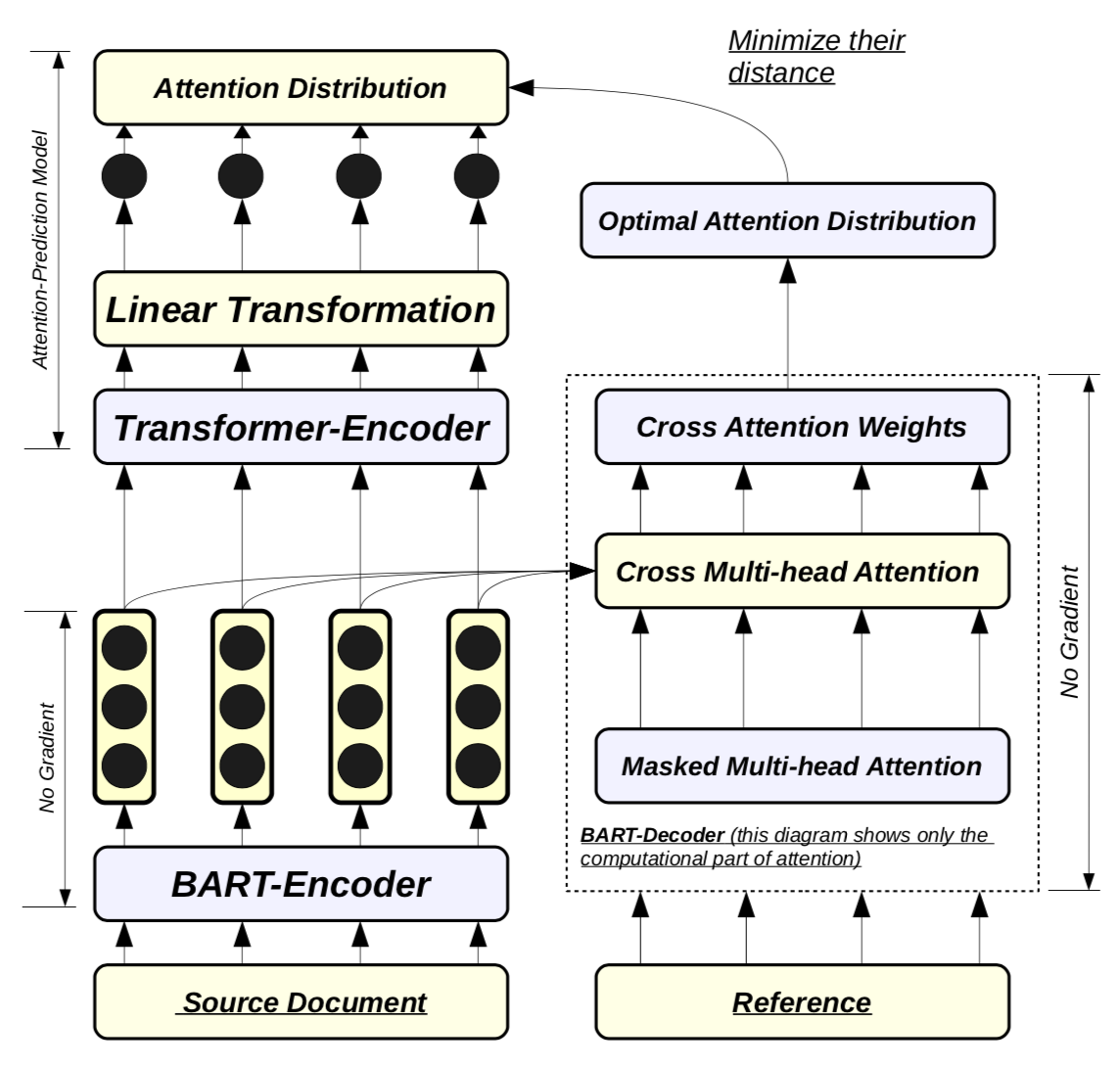

This paper proposes a novel inference algorithm for seq-to-seq models. It corrects beam search step-by-step via the optimal attention distribution to make the generated text attend to source tokens in a controlled way. Experiments show the proposed attention-aware inference produces summaries rather differently from the beam search, and achieves promising improvements of higher scores and greater conciseness. The algorithm is also proven robust as it remains to outperform beam search significantly even with corrupted attention distributions.

翻译:本文为后等值模型提出了一个新颖的推论算法。 它通过最佳关注分布, 纠正波束逐步搜索, 以使生成的文本以控制的方式关注源符号 。 实验显示, 拟议的关注度引用生成摘要与光束搜索不同, 并取得了更高的分数和更加简洁的有希望的改进 。 该算法也非常有力, 因为它仍然大大超过光束搜索, 即使有腐败的注意分布 。

相关内容

专知会员服务

21+阅读 · 2020年4月30日

专知会员服务

17+阅读 · 2020年4月10日

专知会员服务

43+阅读 · 2019年11月12日

Arxiv

0+阅读 · 2021年5月31日

Arxiv

0+阅读 · 2021年5月31日