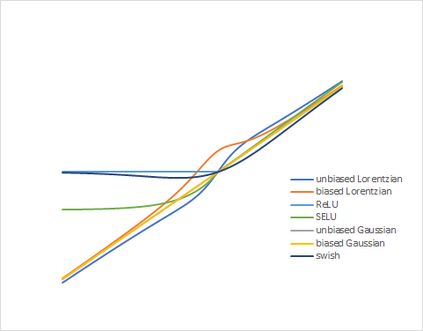

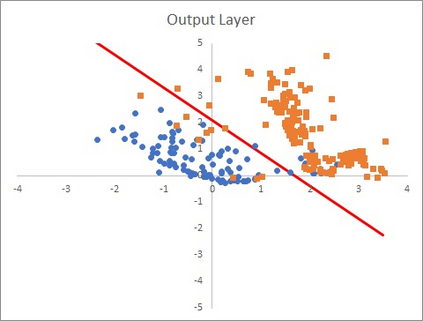

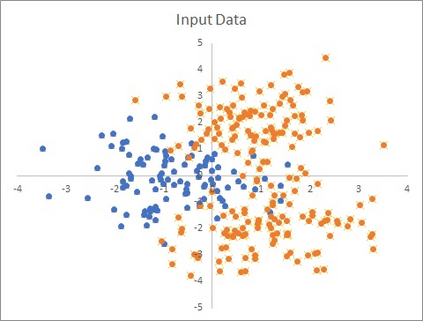

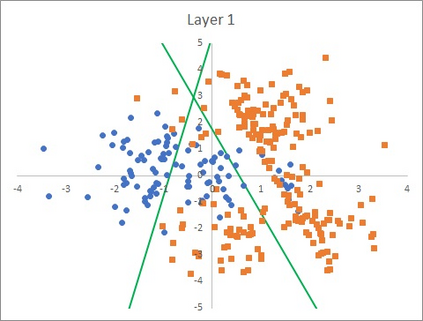

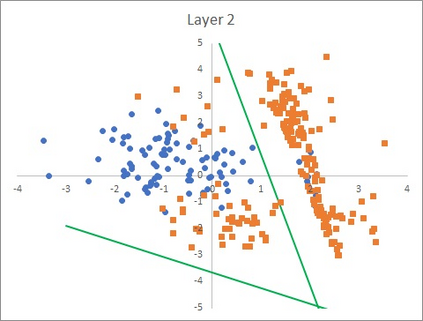

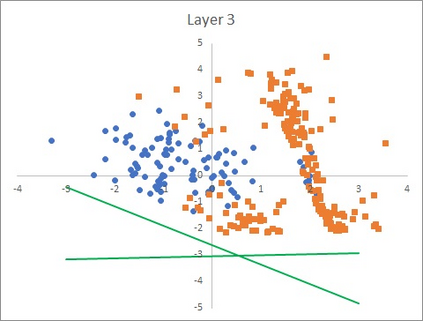

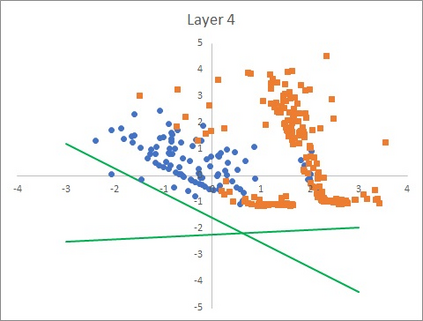

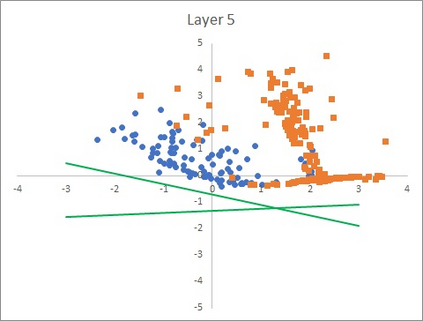

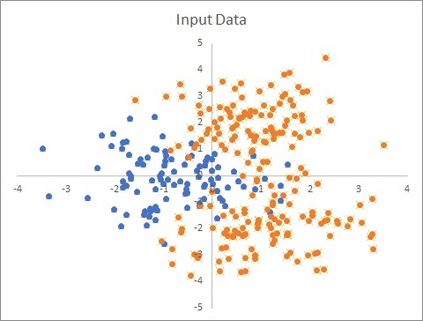

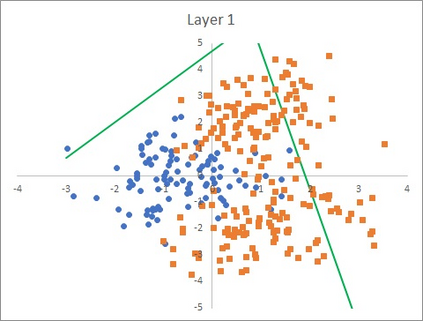

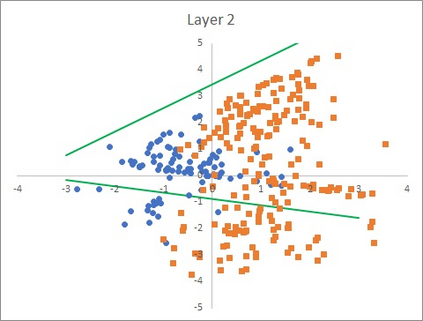

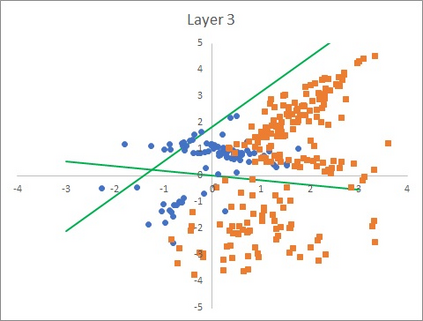

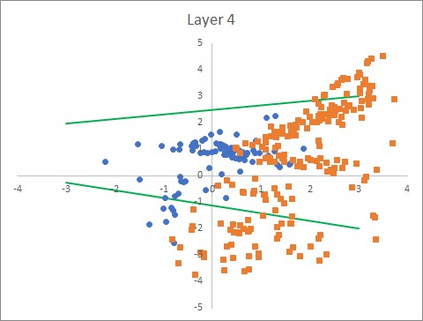

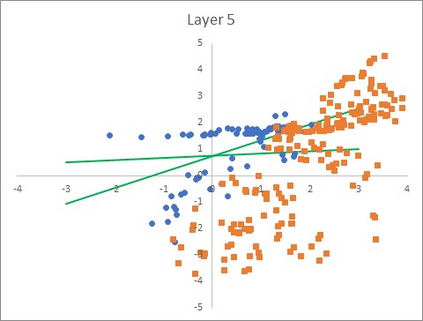

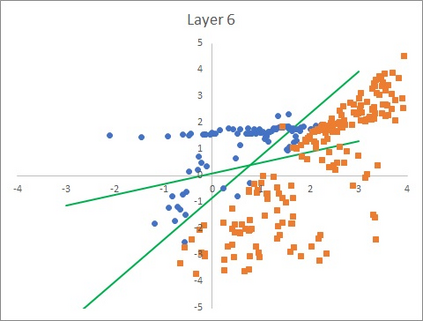

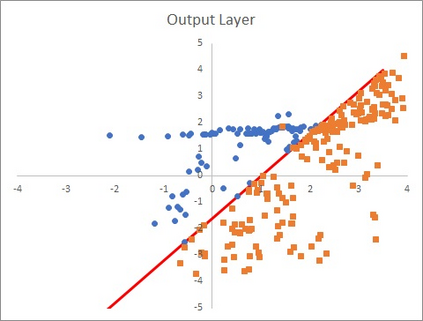

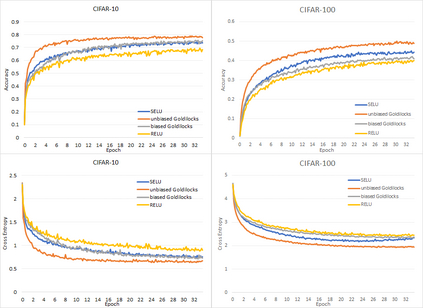

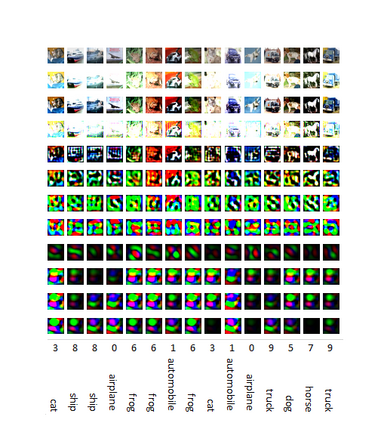

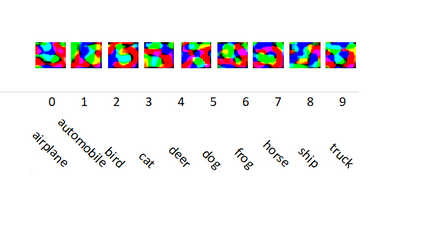

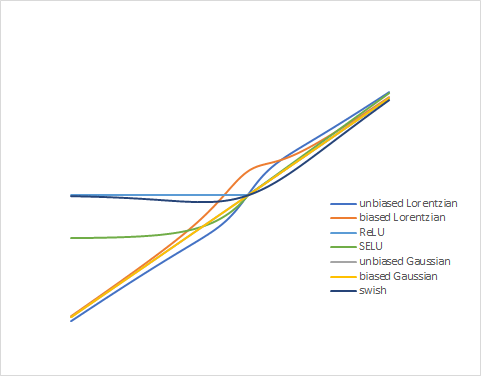

We introduce the new "Goldilocks" class of activation functions, which non-linearly deform the input signal only locally when the input signal is in the appropriate range. The small local deformation of the signal enables better understanding of how and why the signal is transformed through the layers. Numerical results on CIFAR-10 and CIFAR-100 data sets show that Goldilocks networks perform better than, or comparably to SELU and RELU, while introducing tractability of data deformation through the layers.

翻译:我们引入了新的“金锁”激活功能类别, 只有在输入信号处于适当范围时, 输入信号才会在本地进行非线性变形。 信号在本地的微小变形有助于更好地了解信号如何和为什么通过层层转换。 CIFAR- 10 和CIFAR- 100 数据集的数值结果显示, Goldilock 网络的运行优于 SELU 和 RELU, 或与 SELU 和 RELU 相当, 同时引入数据变形在层中的可感性。

相关内容

专知会员服务

17+阅读 · 2020年6月4日

专知会员服务

26+阅读 · 2020年3月26日

专知会员服务

14+阅读 · 2020年1月1日