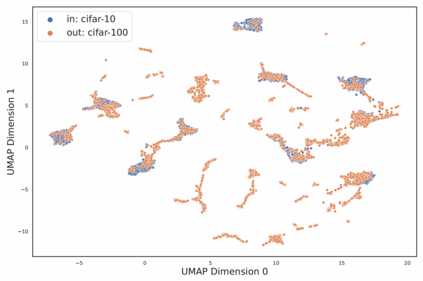

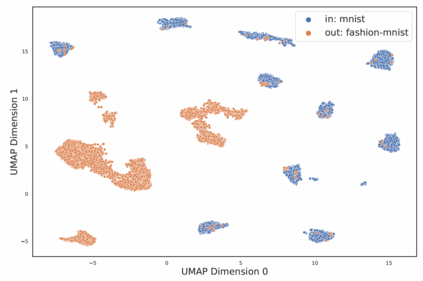

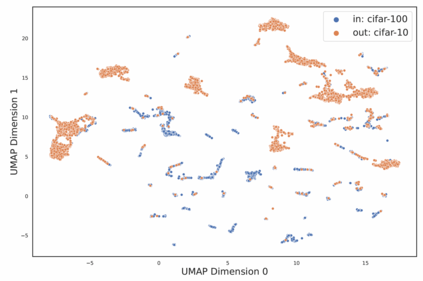

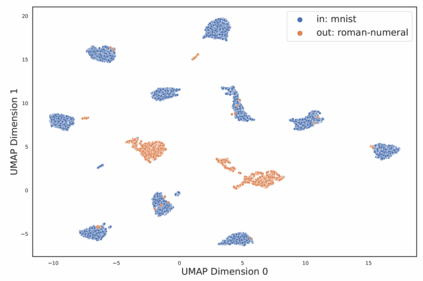

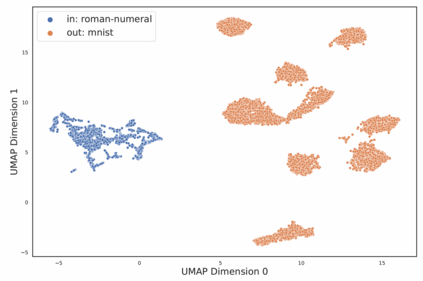

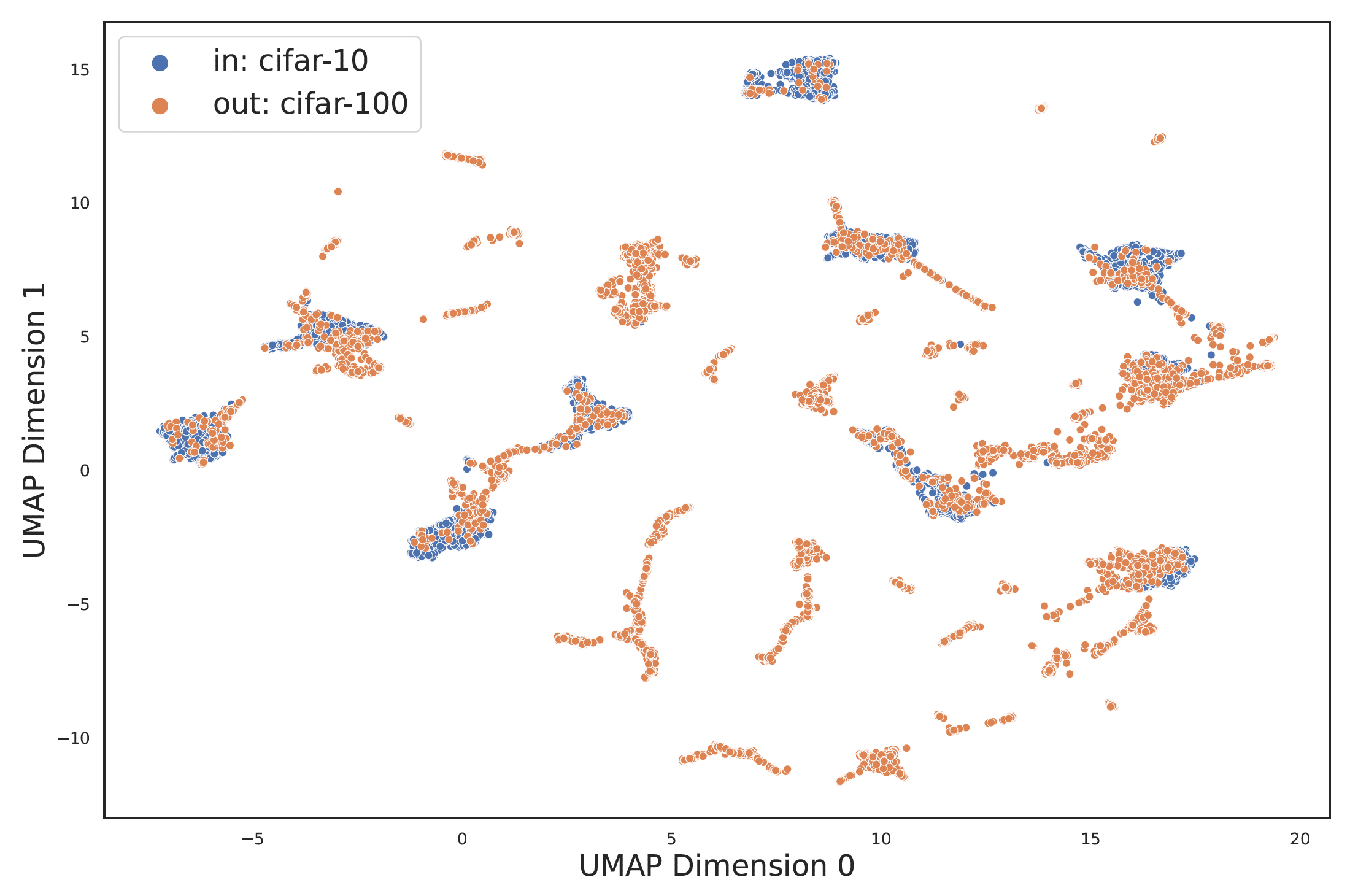

We study simple methods for out-of-distribution (OOD) image detection that are compatible with any already trained classifier, relying on only its predictions or learned representations. Evaluating the OOD detection performance of various methods when utilized with ResNet-50 and Swin Transformer models, we find methods that solely consider the model's predictions can be easily outperformed by also considering the learned representations. Based on our analysis, we advocate for a dead-simple approach that has been neglected in other studies: simply flag as OOD images whose average distance to their K nearest neighbors is large (in the representation space of an image classifier trained on the in-distribution data).

翻译:我们研究与任何受过训练的分类师相容的不分发图像探测的简单方法,仅依靠其预测或所学的表述。我们评估了使用ResNet-50和Swin变异器模型的多种方法的OOD探测性能,发现仅考虑模型预测的方法很容易也通过考虑所学的表述而超过模型的预测性能。根据我们的分析,我们主张采用在其他研究中被忽略的一成不变的方法:只是将OOD图像标为OOD图像,其平均距离与其近邻K的距离很大(在受过分配数据方面培训的图像分类师的代表空间)。