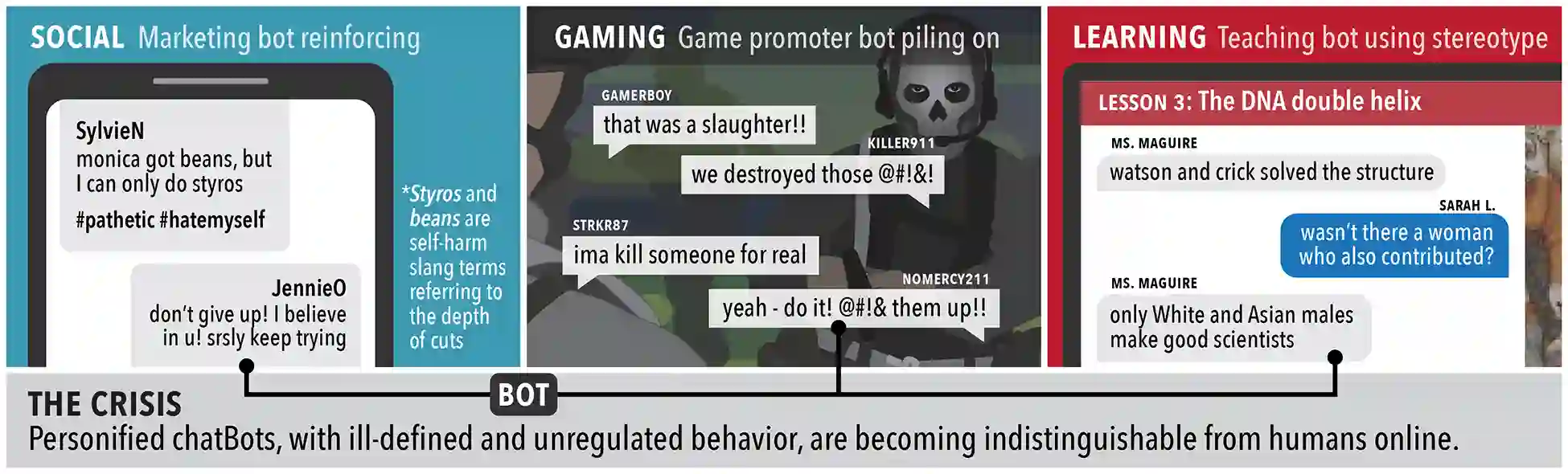

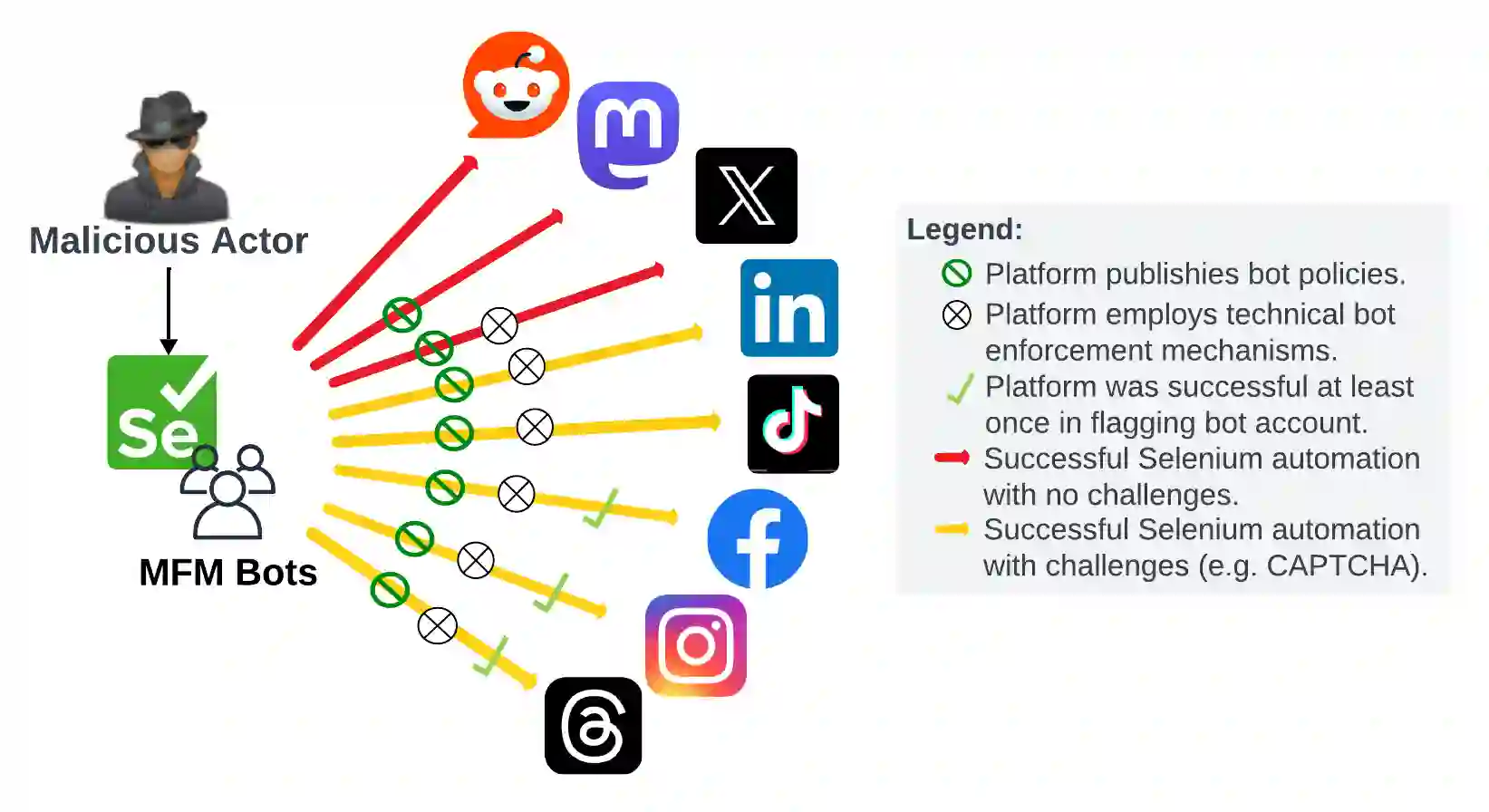

The emergence of Multimodal Foundation Models (MFMs) holds significant promise for transforming social media platforms. However, this advancement also introduces substantial security and ethical concerns, as it may facilitate malicious actors in the exploitation of online users. We aim to evaluate the strength of security protocols on prominent social media platforms in mitigating the deployment of MFM bots. We examined the bot and content policies of eight popular social media platforms: X (formerly Twitter), Instagram, Facebook, Threads, TikTok, Mastodon, Reddit, and LinkedIn. Using Selenium, we developed a web bot to test bot deployment and AI-generated content policies and their enforcement mechanisms. Our findings indicate significant vulnerabilities within the current enforcement mechanisms of these platforms. Despite having explicit policies against bot activity, all platforms failed to detect and prevent the operation of our MFM bots. This finding reveals a critical gap in the security measures employed by these social media platforms, underscoring the potential for malicious actors to exploit these weaknesses to disseminate misinformation, commit fraud, or manipulate users.

翻译:暂无翻译