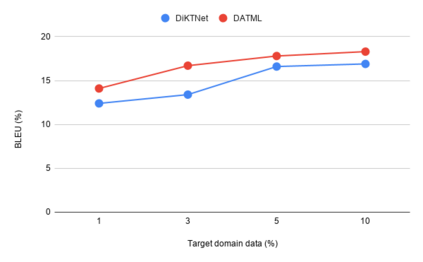

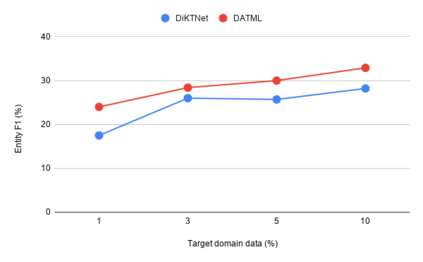

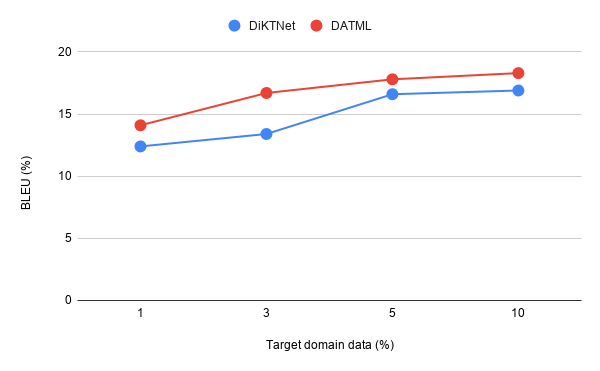

Current generative-based dialogue systems are data-hungry and fail to adapt to new unseen domains when only a small amount of target data is available. Additionally, in real-world applications, most domains are underrepresented, so there is a need to create a system capable of generalizing to these domains using minimal data. In this paper, we propose a method that adapts to unseen domains by combining both transfer and meta-learning (DATML). DATML improves the previous state-of-the-art dialogue model, DiKTNet, by introducing a different learning technique: meta-learning. We use Reptile, a first-order optimization-based meta-learning algorithm as our improved training method. We evaluated our model on the MultiWOZ dataset and outperformed DiKTNet in both BLEU and Entity F1 scores when the same amount of data is available.

翻译:目前基于基因的对话系统是数据饥饿的,当只有少量目标数据时,无法适应新的无形领域。此外,在现实世界应用中,大多数领域的代表性不足,因此需要建立一个能够利用最低限度数据向这些领域推广的系统。在本文中,我们提出一种方法,通过将转让和元学习相结合,适应无形领域。DATML通过引入一种不同的学习技术,改进了以前的最先进的对话模式DiKTNet:元学习。我们使用最先最优化的元学习算法(Reptile)作为改进的培训方法。我们在多WOZ数据集上评估了我们的模型,并在获得相同数量的数据时,在BLEU和实体F1分数中都超过了DiKTNet。