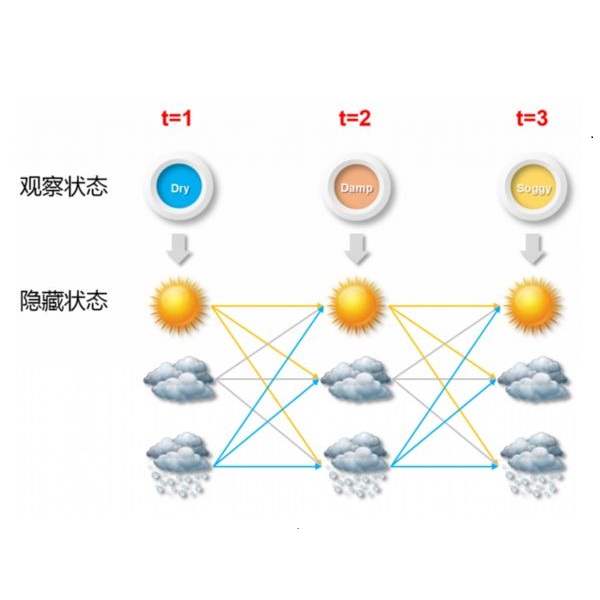

Hidden Markov models (HMMs) are commonly used for disease progression modeling when the true patient health state is not fully known. Since HMMs typically have multiple local optima, incorporating additional patient covariates can improve parameter estimation and predictive performance. To allow for this, we develop hidden Markov recurrent neural networks (HMRNNs), a special case of recurrent neural networks that combine neural networks' flexibility with HMMs' interpretability. The HMRNN can be reduced to a standard HMM, with an identical likelihood function and parameter interpretations, but can also be combined with other predictive neural networks that take patient information as input. The HMRNN estimates all parameters simultaneously via gradient descent. Using a dataset of Alzheimer's disease patients, we demonstrate how the HMRNN can combine an HMM with other predictive neural networks to improve disease forecasting and to offer a novel clinical interpretation compared with a standard HMM trained via expectation-maximization.

翻译:隐藏的 Markov 模型( HMMM) 通常用于在真正的病人健康状况不完全为人所知的情况下进行疾病递增模型。 由于 HMMM通常具有多重局部选择, 包含更多的病人共变数可以改善参数估计和预测性能。 为此, 我们开发了隐藏的 Markov 经常性神经网络( HMNN), 这是一种将神经网络的灵活性与HMMs的可解释性结合起来的经常性神经网络的特例。 HMMN可以降低为标准的 HMM, 具有相同的概率函数和参数解释, 但也可以与其他将病人信息作为投入的预测性神经网络相结合。 HMNNN通过梯度下降同时估算所有参数。 使用老年痴呆病病人的数据集, 我们演示 HMNNM如何将 HMM 与其他预测性神经网络结合, 以改进疾病预报, 并提供与通过预期- 氧化法培训的标准 HMMMM 的临床解释。