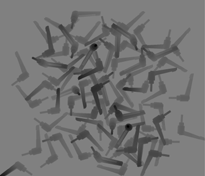

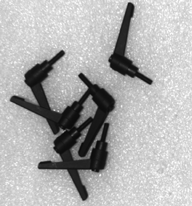

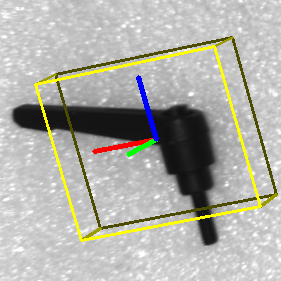

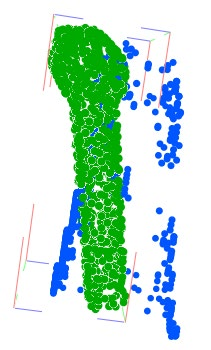

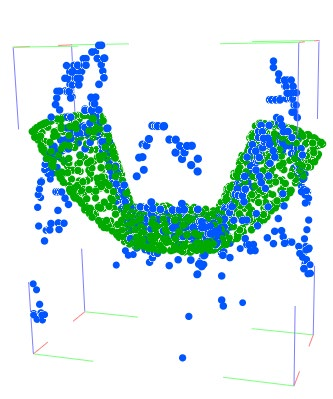

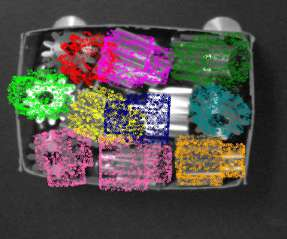

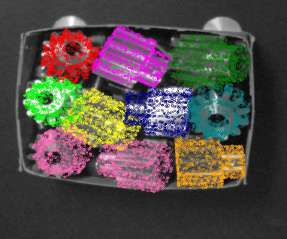

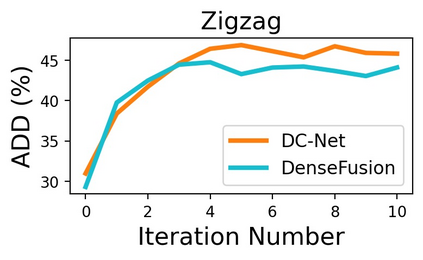

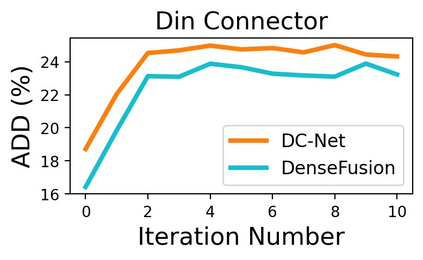

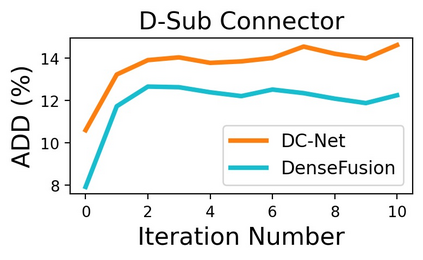

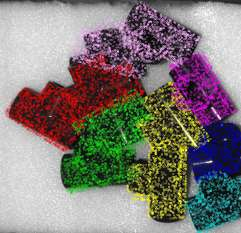

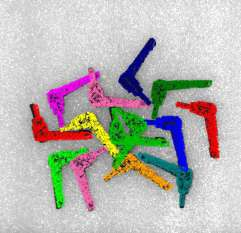

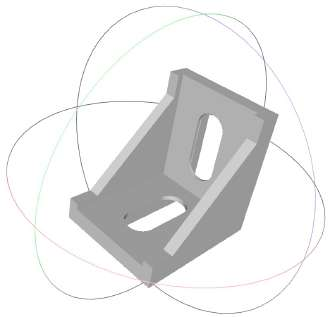

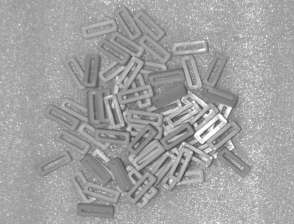

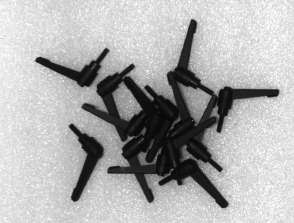

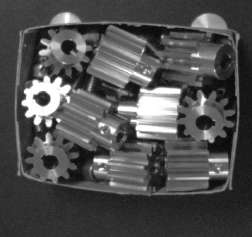

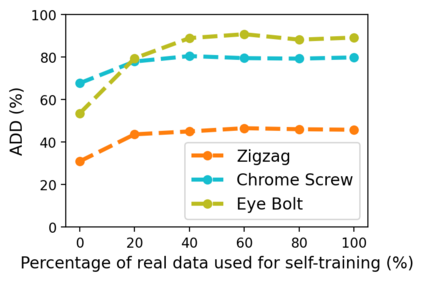

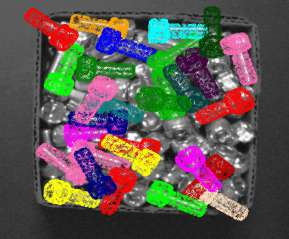

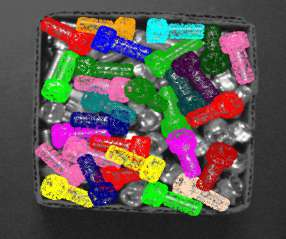

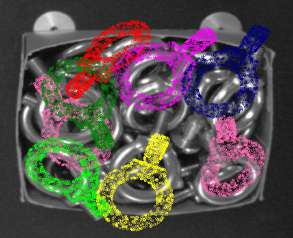

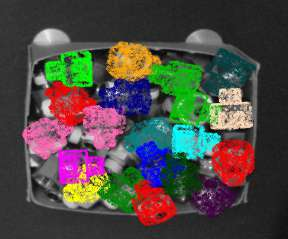

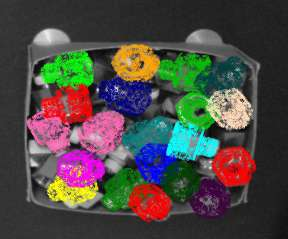

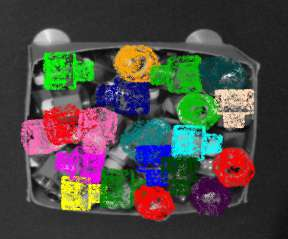

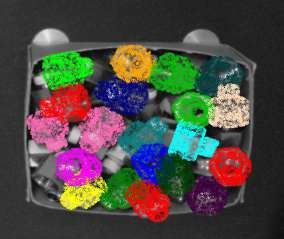

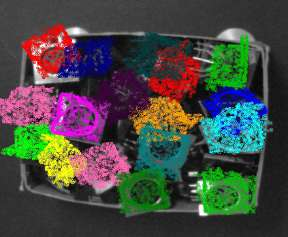

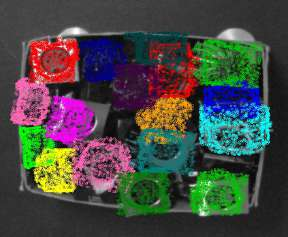

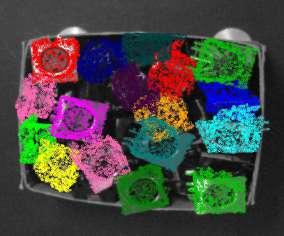

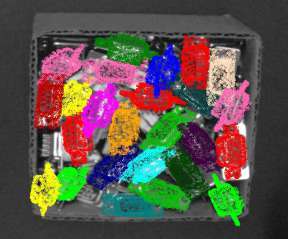

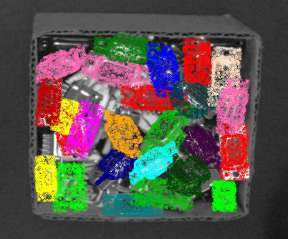

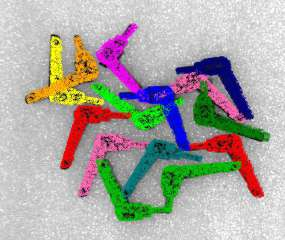

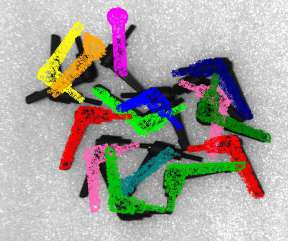

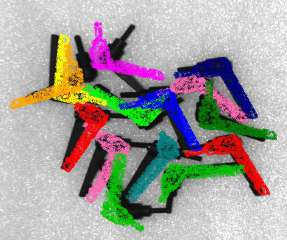

In this paper, we propose an iterative self-training framework for sim-to-real 6D object pose estimation to facilitate cost-effective robotic grasping. Given a bin-picking scenario, we establish a photo-realistic simulator to synthesize abundant virtual data, and use this to train an initial pose estimation network. This network then takes the role of a teacher model, which generates pose predictions for unlabeled real data. With these predictions, we further design a comprehensive adaptive selection scheme to distinguish reliable results, and leverage them as pseudo labels to update a student model for pose estimation on real data. To continuously improve the quality of pseudo labels, we iterate the above steps by taking the trained student model as a new teacher and re-label real data using the refined teacher model. We evaluate our method on a public benchmark and our newly-released dataset, achieving an ADD(-S) improvement of 11.49% and 22.62% respectively. Our method is also able to improve robotic bin-picking success by 19.54%, demonstrating the potential of iterative sim-to-real solutions for robotic applications.

翻译:在本文中,我们提出一个模拟到真实 6D 对象的迭代自我培训框架, 以方便成本- 效益高的机器人掌握。 在垃圾选择的情景下, 我们建立一个照片现实模拟器, 以合成大量虚拟数据, 并用这个模拟器来训练初步的构成估计网络。 这个网络接着扮演一个教师模型的角色, 给未贴标签的真实数据做出预测。 通过这些预测, 我们进一步设计了一个全面的适应性选择计划, 以区分可靠的结果, 并用它们作为假标签来更新学生模型, 以显示真实数据的估计。 为了不断提高假标签的质量, 我们通过将经过培训的学生模型作为新的教师, 并利用完善的教师模型重新标注真实数据来推广上述步骤。 我们用公共基准来评估我们的方法和我们新发行的数据集, 分别实现11.49%和22.62%的ADD(-S) 改进。 我们的方法还能够将机器人的bin选择成功率提高19.54%, 展示了对机器人应用的交互模拟模拟到真实解决方案的潜力 。