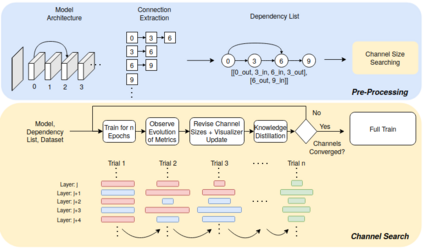

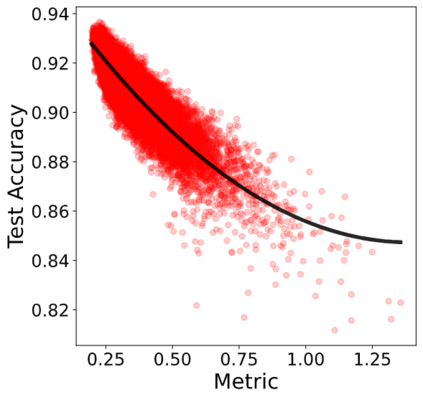

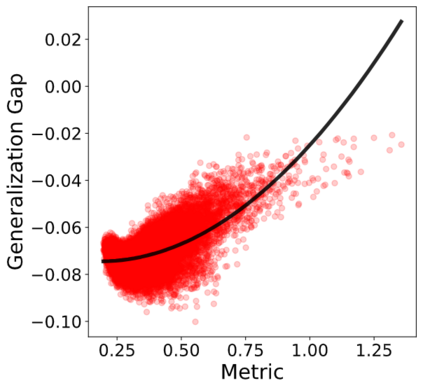

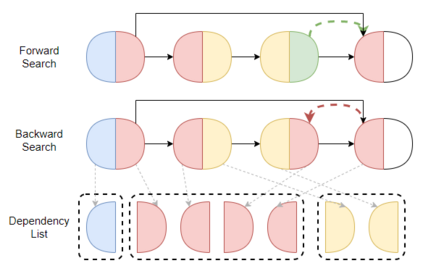

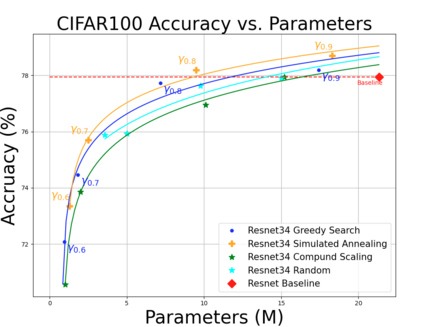

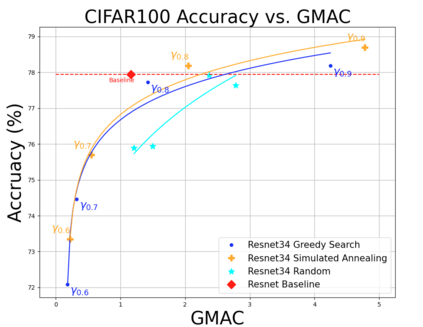

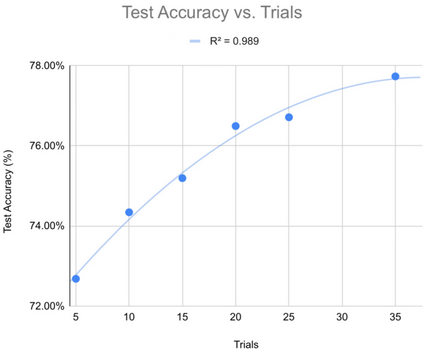

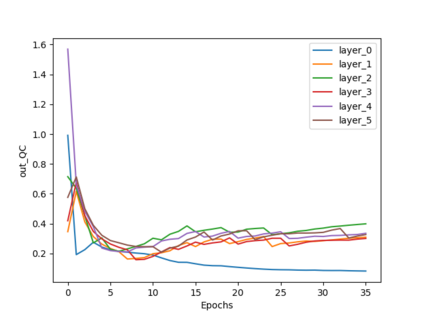

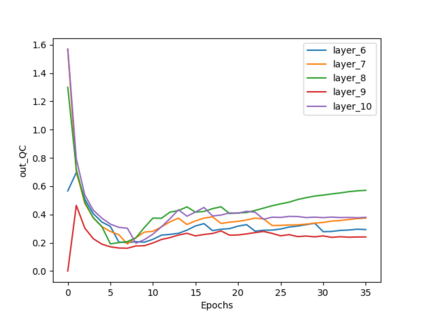

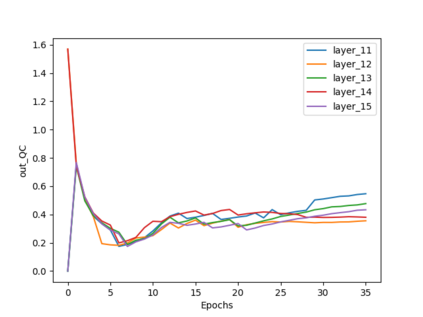

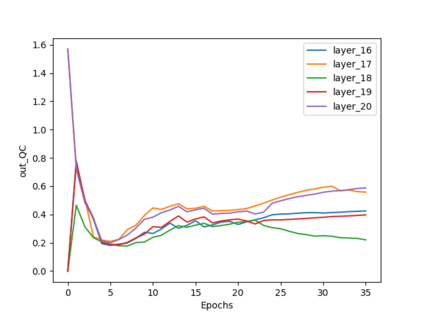

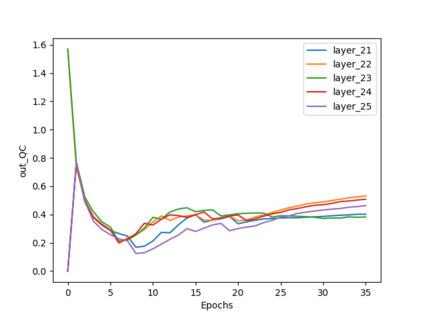

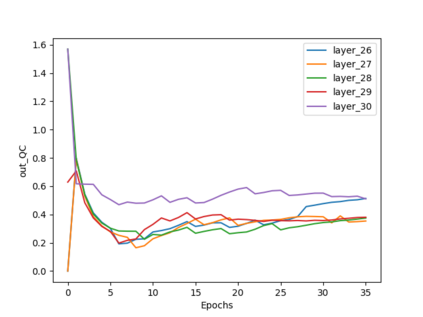

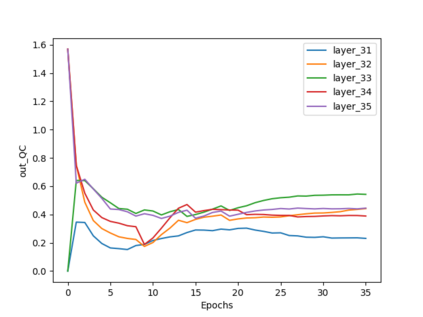

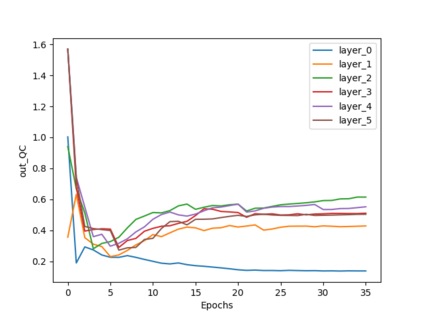

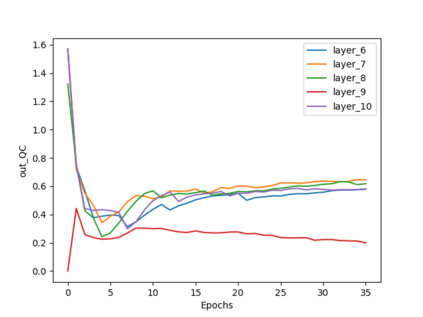

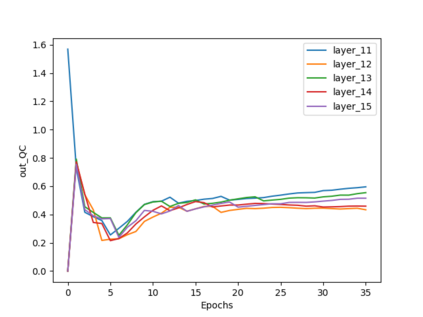

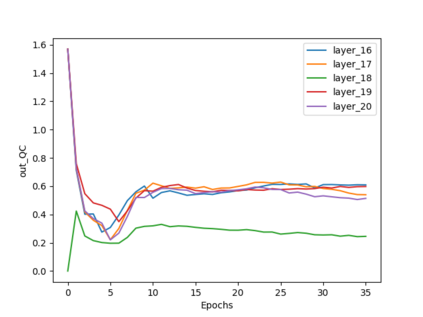

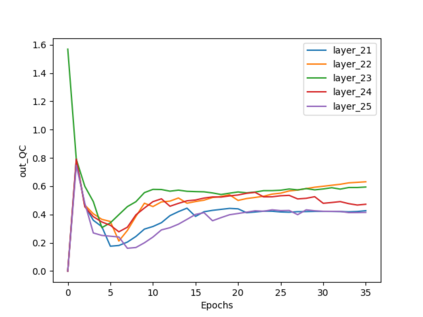

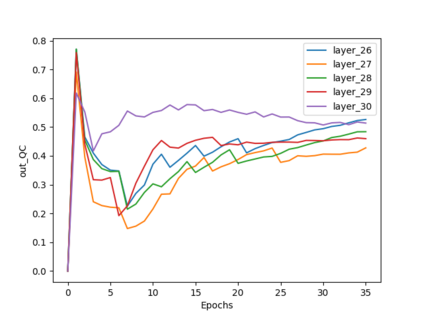

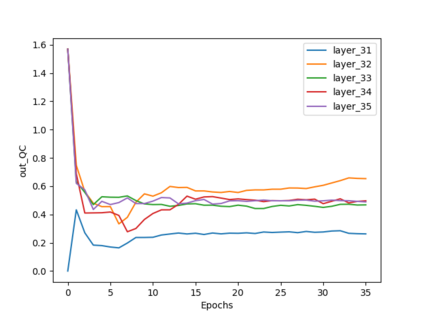

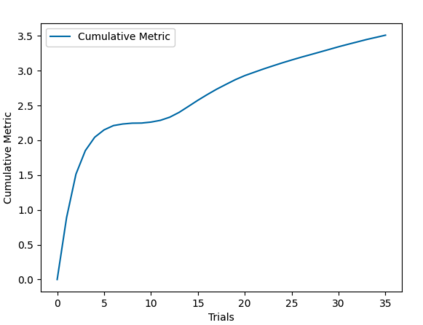

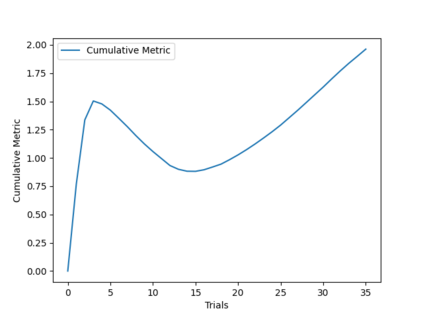

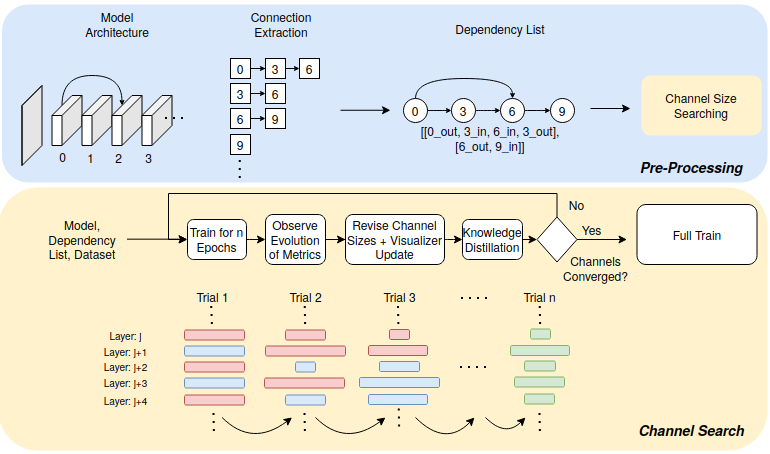

Neural Architecture Search (NAS) has been pivotal in finding optimal network configurations for Convolution Neural Networks (CNNs). While many methods explore NAS from a global search-space perspective, the employed optimization schemes typically require heavy computational resources. This work introduces a method that is efficient in computationally constrained environments by examining the micro-search space of channel size. In tackling channel-size optimization, we design an automated algorithm to extract the dependencies within different connected layers of the network. In addition, we introduce the idea of knowledge distillation, which enables preservation of trained weights, admist trials where the channel sizes are changing. Further, since the standard performance indicators (accuracy, loss) fail to capture the performance of individual network components (providing an overall network evaluation), we introduce a novel metric that highly correlates with test accuracy and enables analysis of individual network layers. Combining dependency extraction, metrics, and knowledge distillation, we introduce an efficient searching algorithm, with simulated annealing inspired stochasticity, and demonstrate its effectiveness in finding optimal architectures that outperform baselines by a large margin.

翻译:在寻找进化神经网络的最佳网络配置方面,神经结构搜索(NAS)一直是关键所在。虽然许多方法都从全球搜索空间的角度探索NAS,但所采用的优化计划通常需要大量的计算资源。这项工作引入了一种通过检查频道大小微搜索空间来有效计算受限环境的方法。在处理频道大小优化时,我们设计了一种自动算法,以提取网络不同连接层的相互依存关系。此外,我们引入了知识蒸馏理念,从而能够在频道大小正在变化的地方保存经过训练的重量、广告试验。此外,由于标准业绩指标(准确性、损失性)未能捕捉到单个网络组成部分的性能(提供总体网络评价),我们引入了一种与测试准确性高度相关并能够分析单个网络层的新指标。我们结合了依赖提取、度和知识蒸馏,我们引入了一种高效的搜索算法,以模拟净性激发的随机性,并展示了在寻找超越大边缘基线的最佳结构方面的有效性。