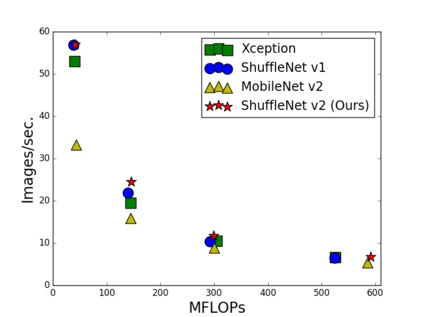

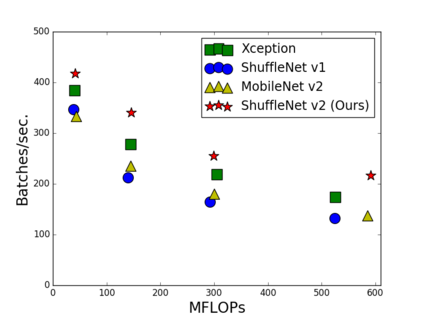

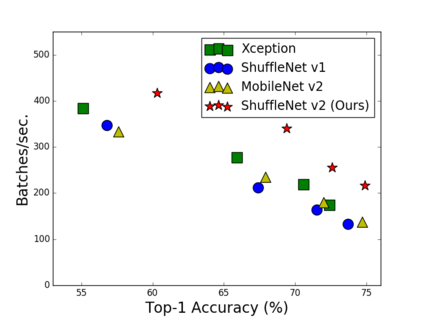

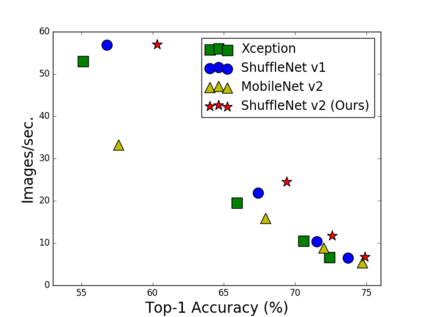

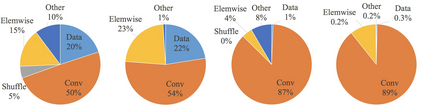

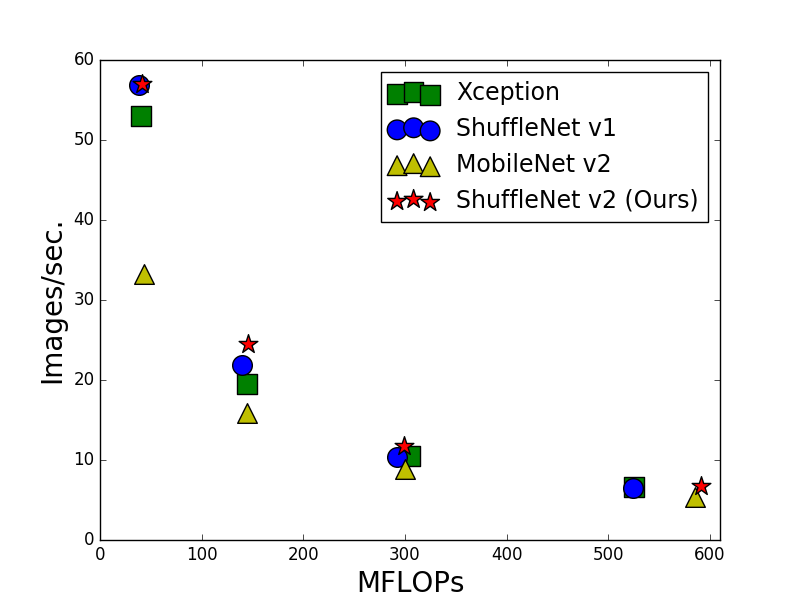

Currently, the neural network architecture design is mostly guided by the \emph{indirect} metric of computation complexity, i.e., FLOPs. However, the \emph{direct} metric, e.g., speed, also depends on the other factors such as memory access cost and platform characterics. Thus, this work proposes to evaluate the direct metric on the target platform, beyond only considering FLOPs. Based on a series of controlled experiments, this work derives several practical \emph{guidelines} for efficient network design. Accordingly, a new architecture is presented, called \emph{ShuffleNet V2}. Comprehensive ablation experiments verify that our model is the state-of-the-art in terms of speed and accuracy tradeoff.

翻译:目前,神经网络结构设计大多以计算复杂度(即FLOPs)的计算标准为指南。然而,计算速度(例如速度)的计算标准也取决于记忆存取成本和平台特征等其他因素。因此,这项工作提议评估目标平台的直接度量,而不只是考虑FLOPs。根据一系列受控实验,这项工作为高效网络设计提供了几种实用的计算标准。因此,提出了一个新的结构,称为\emph{ShuffleNet V2}。全面模拟实验证实,我们的模型在速度和准确性方面是最新条件。