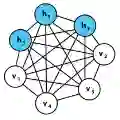

The deep Boltzmann machine (DBM) has been an important development in the quest for powerful "deep" probabilistic models. To date, simultaneous or joint training of all layers of the DBM has been largely unsuccessful with existing training methods. We introduce a simple regularization scheme that encourages the weight vectors associated with each hidden unit to have similar norms. We demonstrate that this regularization can be easily combined with standard stochastic maximum likelihood to yield an effective training strategy for the simultaneous training of all layers of the deep Boltzmann machine.

翻译:深波尔茨曼机器(DBM)是寻求强大的“深层”概率模型方面的一个重要发展。 到目前为止,对DBM所有层面的同步或联合培训在很大程度上以现有的培训方法未获成功。我们引入了一个简单的正规化计划,鼓励与每个隐藏单位相关的重量矢量具有类似的规范。我们证明,这种正规化很容易与标准的随机性最大可能性相结合,为同时培训深波尔茨曼机器的所有层面制定有效的培训战略。