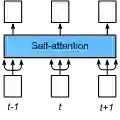

Transformers are the type of neural networks that has revolutionised natural language processing and protein science. Their key building block is a mechanism called self-attention which is trained to predict missing words in sentences. Despite the practical success of transformers in applications it remains unclear what self-attention learns from data, and how. Here, we give a precise analytical and numerical characterisation of transformers trained on data drawn from a generalised Potts model with interactions between sites and Potts colours. While an off-the-shelf transformer requires several layers to learn this distribution, we show analytically that a single layer of self-attention with a small modification can learn the Potts model exactly in the limit of infinite sampling. We show that this modified self-attention, that we call ``factored'', has the same functional form as the conditional probability of a Potts spin given the other spins, compute its generalisation error using the replica method from statistical physics, and derive an exact mapping to pseudo-likelihood methods for solving the inverse Ising and Potts problem.

翻译:通过带因数注意力的单层变压器对广义Potts模型进行最优推断

变压器是一种神经网络,已经彻底改变了自然语言处理和蛋白质科学的领域。它们的关键组件是被称为自注意力的机制,其被训练来预测句子中缺失的单词。尽管变压器在应用中获得成功,但自注意力从数据中学到了什么以及如何学习仍不清楚。在这篇文章中,我们对从具有位点和Potts颜色相互作用的广义Potts模型中提取的数据进行了精确的分析和数值描述。虽然一个非专业的变压器需要多个层才能学习这个分布,但我们证明了对于无限采样极限,带有小修改的自注意力的单层可以完美地学习Potts模型。我们表明,这个修改过的自注意力,我们称之为“因数”,具有与Potts自旋给出其他自旋的条件概率相同的函数形式,利用统计物理中的复制方法计算其广义误差,并导出解决反向Ising和Potts问题的伪似然方法的精确映射。